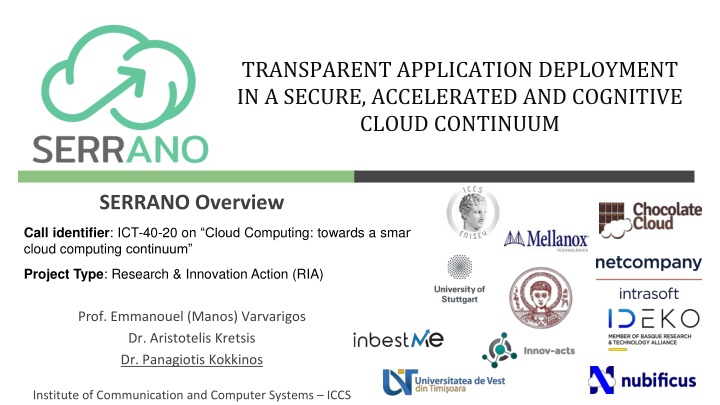

Accelerated Cloud Continuum: SERRANO Platform for Transparent Application Deployment

Explore the innovative SERRANO platform, designed for transparent application deployment in a secure, accelerated, and cognitive cloud continuum. This research project focuses on creating a smart cloud computing continuum that integrates edge, cloud, and HPC resources. The platform adopts a lifecycle methodology for seamless application deployment and cognitive resource orchestration across the continuum. Through AI-enhanced service orchestrators and autonomous adaptation mechanisms, SERRANO enables transparent deployment and continuous allocation of resources, paving the way for efficient and secure cloud operations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

TRANSPARENT APPLICATION DEPLOYMENT IN A SECURE, ACCELERATED AND COGNITIVE CLOUD CONTINUUM SERRANO Overview Call identifier: ICT-40-20 on Cloud Computing: towards a smart cloud computing continuum Project Type: Research & Innovation Action (RIA) Prof. Emmanouel (Manos) Varvarigos Dr. Aristotelis Kretsis Dr. Panagiotis Kokkinos Institute of Communication and Computer Systems ICCS

SERRANO Platform Targets infrastructures consisting of edge, cloud and HPC resources and builds a continuum

SERRANO lifecycle SERRANO platform adopts a lifecycle methodology to facilitate application deployment and cognitive resource orchestration in the edge-cloud-HPC continuum Lifecycle: Step a, b: Transparent application deployment and the translation from generic intents to specific objectives Step c, d: Continuous and autonomous allocation and adaptation of resources and applications

SERRANO Platform Enables transparent application deployment, through an abstraction layer Implement : UI based mechanisms for application deployment description and for the specification of infrastructure- agnostic intents Orchestrator Plugin translates intents from the TOSCA specification to the SERRANO specific Application and Resource models and to Kubernetes descriptors (YAML) AI-Enhanced Service Orchestrator (AISO) that use these models and creates deployment objectives based on the intents AI/ML algorithms that translate intents to infrastructure-aware objectives and policies

SERRANO platform Adapts continuously and autonomously the continuum The Resource Orchestrator, the Resource Optimisation Toolkit (ROT) and the Telemetry Framework enable the orchestration of the continuum using AI-based mechanisms stage approach orchestration: Two for the resource SERRANO Resource Orchestrator Local orchestration mechanisms based on K8s The SERRANO HPC Gateway service interacts with HPC systems The Service Assurance and Remediation Service (SAR) and the Event detection Engine (EDE) enable the detection of events and the re- optimization of the infrastructure

SERRANO platform SERRANO platform introduces and integrates a number of key innovations: SERRANO Storage Service enables secure storage in the edge and in the cloud, using erasure coding NVIDIA s commercial off-the-shelf Data Processing Units (DPUs) were used for accelerated data processing, reducing CPU overhead through TLS-offloading A lightweight hypervisor that runs unikernels as functions, was developed, so as to isolate instances and to reduce the attack surface of the running applications Developed HW and SW accelerated kernels (for FPGA, GPU, HPC), based on the UC applications, utilizing transprecision and approximation computing vAccel framework was extended, facilitating access to hardware accelerators by applications that run as containerized functions OpenFaaS serverless framework was integrated into the platform

Use Case Demonstrations UC1 - Secure Storage Use Case: Secure, high-performance storage of files HW-assisted TLS offloading reduces the total number of CPU cycles Serve a large number of parallel client requests with high throughput UC2 - The Fintech Use Case: Dynamic Portfolio Optimization (DPO) application FPGA and GPU hw-accelerators and HPC resources are utilized Increase the number of generated portfolios that have a higher expected return and maintain the lowest possible risk Reduce cloud costs by using a hybrid edge-cloud infrastructure UC3 - Anomaly Detection in Manufacturing Settings: Detect machine anomalies, without stopping the machines Timely process of streaming data leveraging SERRANO s kernel acceleration (FPGA, GPU) mechanisms Perform continuous health assessment Predict imminent failures with enough time to avoid severe machine failures

Thank you! Prof. Emmanouel (Manos) Varvarigos Dr. Aristotelis Kretsis Dr. Panagiotis Kokkinos Visit our website! https://ict-serrano.eu Institute of Communication and Computer Systems ICCS The research leading to these results has received funding from the EC HORIZON 2020 SERRANO project, under grant agreement number 101017168

![❤[READ]❤ Deep Space Craft: An Overview of Interplanetary Flight (Springer Praxis](/thumb/21511/read-deep-space-craft-an-overview-of-interplanetary-flight-springer-praxis.jpg)