Accelerator-CPU Integration in Heterogeneous SoC Modeling

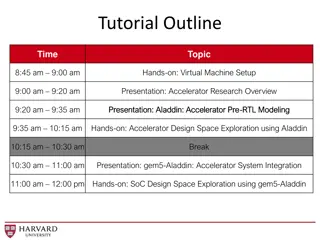

Explore the integration of accelerators and CPUs in heterogeneous SoC modeling, discussing DMA, IP integration, programming challenges, and system-level studies using gem5-Aladdin. Learn about the benefits of co-design approaches and the complexities of DMA flow management in this comprehensive overview.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Integration for Heterogeneous SoC Modeling Yakun Sophia Shao, Sam Xi, Gu-Yeon Wei, David Brooks Harvard University 1

Todays Accelerator-CPU Integration Simple interface to accelerators: DMA Easy to integrate lots of IP Hard to program and share data Core L1 $ Core L1 $ Acc #1 Acc #n SPAD SPAD L2 $ On-Chip System Bus DMA DRAM 2

Todays Accelerator-CPU Integration Simple interface to accelerators: DMA Easy to integrate lots of IP Hard to program and share data Core L1 $ Core L1 $ Acc #1 Acc #n SPAD SPAD L2 $ On-Chip System Bus DMA DRAM 3

Typical DMA Flow Flush and invalidate inputdata from CPU caches. Invalidate a region of memory to be used for receiving accelerator output. Program a buffer descriptor describing the transfer (start, length, source, destination). When data is large, program multiple descriptors Initiate accelerator. Initiate data transfer. Wait for accelerator to complete. 4

DMA can be very expensive Only 20% of total time! 16-way parallel md-knn accelerator 5

Co-Design vs. Isolated Design No need to build such an aggressively parallel design! 8

Features End-to-end simulation of accelerated workloads. Models hardware-managed caches and DMA + scratchpad memory systems. Supports multiple accelerators. Enables system-level studies of accelerator-centric platforms. Xenon: A powerful design sweep system. Highly configurable and extensible. 10

DMA Engine Extend the existing DMA engine in gem5 to accelerators. Special dmaLoad and dmaStore functions. Insert into accelerated kernel. Trace will capture them. gem5-Aladdin will handle them. Currently a timing model only. Analytical model for cache flush and invalidation latency. 11

DMA Engine /* Code representing the accelerator */ void fft1D_512(TYPE work_x[512], TYPE work_y[512]){ int tid, hi, lo, stride; /* more setup */ dmaStore(&work_y[0], 0, 512 * sizeof(TYPE)); } dmaLoad(&work_x[0], 0, 512 * sizeof(TYPE)); dmaLoad(&work_y[0], 0, 512 * sizeof(TYPE)); /* Run FFT here ... */ dmaStore(&work_x[0], 0, 512 * sizeof(TYPE)); 12

Caches and Virtual Memory Gaining traction on multiple platforms. Intel QuickAssist QPI-Based FPGA Accelerator Platform (QAP) IBM POWER8 s Coherent Accelerator Processor Interface (CAPI) System vendors provide a Host Service Layer with virtual memory and cache coherence support. Host service layer communicates with CPUs through an agent. Processors FPGA QPI/PCIe Core L1 $ Core L1 $ Accelerator Acc Agent Host Service Layer L2 $ 13

Caches and Virtual Memory Accelerator caches are connected directly to system bus. Support for multi-level cache hierarchies. Hybrid memory system: can use both caches and scratchpads. Basic MOESI coherence protocol. Special Aladdin TLB model. Map trace address space to simulated address space. 14

Demo: DMA Exercise: change system bus width and see effect on accelerator performance. Open up your VM. Go to: ~gem5-aladdin/sweeps/tutorial/dma/stencil-stencil2d/0 Examine these files: stencil-stencil2d.cfg ../inputs/dynamic_trace.gz gem5.cfg run.sh 15

Demo: DMA Run the accelerator with DMA simulation Change the system bus width to 32 bits Set xbar_width=4 in run.sh Run again. Compare results. 16

Demo: Caches Exercise: see effect of cache size on accelerator performance. Go to: ~gem5-aladdin/sweeps/tutorial/cache/stencil-stencil2d/0 Examine these files: ../inputs/dynamic_trace.gz stencil-stencil2d.cfg gem5.cfg 17

Demo: Caches Run the accelerator with caches simulation Change the cache size to 1kB. Set cache_size = 1kB in gem5.cfg. Run again. Compare results. Play with some other parameters (associativity, line size, etc.) 18

CPU Accelerator Cosimulation CPU can invoke an attached accelerator. We use the ioctl system call. Communicate status through shared memory. Spin wait for accelerator, or do something else (e.g. start another accelerator). 19

Code example /* Code running on the CPU. */ void run_benchmark(TYPE work_x[512], TYPE work_y[512]) { } 20

Code example /* Code running on the CPU. */ void run_benchmark(TYPE work_x[512], TYPE work_y[512]) { /* Establish a mapping from simulated to trace * address space */ mapArrayToAccelerator(MACHSUITE_FFT_TRANSPOSE, "work_x", work_x, sizeof(work_x)); ioctl request code Associate this array name with the addresses of memory accesses in the trace. Starting address and length of one memory region that the accelerator can access. } 21

Code example /* Code running on the CPU. */ void run_benchmark(TYPE work_x[512], TYPE work_y[512]) { /* Establish a mapping from simulated to trace * address space */ mapArrayToAccelerator(MACHSUITE_FFT_TRANSPOSE, "work_x", work_x, sizeof(work_x)); mapArrayToAccelerator(MACHSUITE_FFT_TRANSPOSE, "work_y", work_y, sizeof(work_y)); } 22

Code example /* Code running on the CPU. */ void run_benchmark(TYPE work_x[512], TYPE work_y[512]) { /* Establish a mapping from simulated to trace * address space */ mapArrayToAccelerator(MACHSUITE_FFT_TRANSPOSE, "work_x", work_x, sizeof(work_x)); mapArrayToAccelerator(MACHSUITE_FFT_TRANSPOSE, "work_y", work_y, sizeof(work_y)); // Start the accelerator and spin until it finishes. invokeAcceleratorAndBlock(MACHSUITE_FFT_TRANSPOSE); } 23

Demo: disparity You can just watch for this one. If you want to follow along: ~/gem5-aladdin/sweeps/tutorial/cortexsuite_sweep/0 This is a multi-kernel, CPU + accelerator cosimulation. 24

How can I use gem5-Aladdin? Investigate optimizations to the DMA flow. Study cache-based accelerators. Study impact of system-level effects on accelerator design. Multi-accelerator systems. Near-data processing. All these will require design sweeps! 25

Xenon: Design Sweep System A small declarative command language for generating design sweep configurations. Implemented as a Python embedded DSL. Highly extensible. Not gem5-Aladdin specific. Not limited to sweeping parameters on benchmarks. Why Xenon ? 26

Xenon: Generation Procedure Read sweep configuration file Execute sweep commands Generate all configurations Backend: Generate any additional outputs Backend: read JSON, rewrite into desired format. Export configurations to JSON 27

Xenon: Data Structures cycle_time pipelining md-knn md_kernel force_x force_y force_z partition_type partition_factor memory_type loop_i Example of a Python data structure that Xenon will operate on. unrolling A benchmark suite would contain many of these. loop_j 28

Xenon: Commands set unrolling 4 set partition_type cyclic set unrolling for md_knn.* 8 set partition_type for md_knn.force_x block sweep cycle_time from 1 to 5 sweep partition_factor from 1 to 8 expstep 2 set partition_factor for md_knn.force_x 8 generate configs generate trace 29

Xenon: Execute "Benchmark(\"md-knn\")": { "Array(\"NL\")": { "memory_type": "cache", "name": "NL", "partition_factor": 1, "partition_type": "cyclic", "size": 4096, "type": "Array", "word_length": 8 }, "Array(\"force_x\")": { "memory_type": "cache", "name": "force_x", "partition_factor": 1, "partition_type": "cyclic", "size": 256, "type": "Array", "word_length": 8 }, "Array(\"force_y\")": { "memory_type": "cache", "name": "force_y", "partition_factor": 1, "partition_type": "cyclic", "size": 256, "type": "Array", "word_length": 8 } ... Every configuration in a JSON file. A backend is then invoked to load this JSON object and write application specific config files. 30

Demo: Design Sweeps with Xenon Exercise: sweep some parameters. Go to: ~gem5-aladdin/sweeps/tutorial/ Examine these files: ../inputs/dynamic_trace.gz stencil-stencil2d.cfg gem5.cfg 32

Tutorial References Y.S. Shao, S. Xi, V. Srinivasan, G.-Y. Wei, D. Brooks, Co-Designing Accelerators and SoC Interfaces using gem5-Aladdin , MICRO, 2016. Y.S. Shao, S. Xi, V. Srinivasan, G.-Y. Wei, D. Brooks, Toward Cache-Friendly Hardware Accelerators , SCAW, 2015. Y.S. Shao and D. Brooks, ISA-Independent Workload Characterization and its Implications for Specialized Architectures, ISPASS 13. B. Reagen, Y.S. Shao, G.-Y. Wei, D. Brooks, Quantifying Acceleration: Power/Performance Trade-Offs of Application Kernels in Hardware, ISLPED 13. Y.S. Shao, B. Reagen, G.-Y. Wei, D. Brooks, Aladdin: A Pre-RTL, Power-Performance Accelerator Simulator Enabling Large Design Space Exploration of Customized Architectures, ISCA 14. B. Reagen, B. Adolf, Y.S. Shao, G.-Y. Wei, D. Brooks, MachSuite: Benchmarks for Accelerator Design and Customized Architectures, IISWC 14. 33

Validation Implemented accelerators in Vivado HLS Designed complete system in Vivado Design Suite 2015.1. 35

DMA Optimization Results Overlap of flush and data transfer 39

DMA Optimization Results Overlap of data transfer and compute 40

DMA Optimization Results md-knn is able to completely overlap compute with data transfer. 41

DMA vs Caches DMA Cache Push-based data access Bulk transfer efficiency Simple hardware, lower power On-demand data access Automatic coherence handling Fine grained communication Automatic eviction Manual coherence management Manual optimizations Coarse grained communication Larger, higher power cost Less efficient at bulk data movement. 42

DMA vs Caches DMA is faster and lower power than caches. Regular access patterns Small input data size Caches will have cold misses. 43

DMA vs Caches DMA and caches are approximately equal. Power is dominated by floating point units. 44

DMA vs Caches DMA is faster and lower power than caches. Regular access patterns Small input data size Caches will have cold misses. 45