ACF.AI Activation Toolkit Phase 2 Design Checklist

The Development and Deployment Checklist is a vital component of the ACF.AI Activation Toolkit Phase 2. It ensures the effective deployment of AI systems, focusing on avoiding bias and unintended consequences. Learn about the checklist's phases, actions, and domains to successfully design and deliver AI solutions.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

ACF AI Activation Toolkit Phase 2 Design and Delivery Checklist last updated Jan 31, 2025 | email ai@acf.hhs.gov with feedback and/or questions on this resource

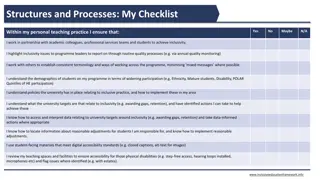

Introduction The Development and Deployment Checklist is one component of the broader toolkit.1 Phase 1 Proposal for Integrating AI Phase 2 Design and Delivery Checklist Tools for Measuring Success Use to ensure you are taking necessary steps to deploy and operate an effective system, avoiding and mitigating risks of bias and other negative unintended consequences. The checklist has multiple sub-components, each of which align to a stage in the Design and Delivery process. Use to define how you will measure whether your AI- enabled system achieves the intended goals Use to think through the problem you re trying to solve and why an AI- enabled system makes sense Design Pilot Scaled Deployment Ongoing Monitoring This deck s focus The Development and Deployment checklist should be used after completing the Phase 1 Proposal for Integrating AI. The checklist summarizes actions the system owner and development team should take when designing and delivering an AI solution. Some actions may be dependent on the results of the ACF AI Activation Toolkit Team review. 2 1. Details on when and how to use the broader toolkit can be found in ACF AI Activation Toolkit - 0 When and How to Use the Toolkit

How the checklist is organized Within each phase of the checklist, there are individual actions aligned to a given domain that should be completed by a system owner or other actor Phases of the Design and Delivery Checklist Scaled Deployment Ongoing Monitoring Design Pilot Domains Covered across all Phases AI Use Case Inventory Authority to Operate (ATO) Bias Data Set Size Data Sources and Quality Disclosure Fairness Monitoring and Oversight Opt Out Performance Metrics Prohibited Uses Publicly Available Data Sensitive Data Sharing and Releasing AI Code and Models Transparency Each of the following four slides lists out the actions across all relevant domains for a single phase of the checklist. 3

Design Checklist Design Pilot Scaled Deployment Ongoing Monitoring AI Project Inventory. Update your project s record in the ACF AI Project Inventory with any major changes since the initial proposal / submission. Bias. Establish a bias testing plan based on industry measures of bias, including training data analysis and outcome evaluation. Learn more about bias identification. Data Set Size. Establish a minimum acceptable data volume based on industry standards. Learn more about dataset sizes. Training Data Sources and Quality. Document intended training data sources and validate data quality (e.g., completeness, accuracy, relevance). Disclosure. Include system feature to properly identify AI-generated content (e.g., using a watermark or footnote). Fairness. Ensure representative data with equal selection rates across demographic groups is used, and end-users are involved in designing AI solution to reduce bias and maximize fairness. Monitoring and Oversight. Include appropriate monitoring of user activity by system owners, such as usage statistics and user logs. Opt Out. Include an option to opt out of the use of AI and design the necessary pathways to support alternative pathways (e.g., talking to a person rather than interfacing with an AI system). Performance Metrics. Establish performance metrics for the solution. View examples of AI system performance metrics. Prohibited Uses. Determine and document prohibited uses for the AI solution (e.g., denial of benefits using AI). Publicly Available Data. If the AI system uses publicly available data, explore the possible utilities of and legal authorities supporting the use of publicly available information and ensure that ACF has legal authority to use this data. Sensitive Data. Ensure necessary security protocols, such as FedRAMP certification or on-premises deployment, are incorporated into design to protect sensitive information [e.g., Personally Identifiable Information (PII), Protected Health Information (PHI), Business Identifiable Information (BII)] from data leakage or misuse. Transparency. Include notifications for affected individuals in system design. Affected individuals may include those who are impacted by a decision made by AI (e.g., grantee selection, benefits determination, etc.). Notifications should be in writing, visible to all affected individuals, and available in the most common languages of potential impacted individuals. Note: this toolkit only covers the AI-specific responsibilities throughout design and delivery. For more information on broader responsibilities for the teams owning a technology system, refer to HHS s Policy for Information Security and Privacy Protection (IS2P). 4

Pilot Checklist Design Pilot Scaled Deployment Ongoing Monitoring AI Project Inventory. Update your project s record in the ACF AI Project Inventory with any major changes since the design phase. Authority to Operate (ATO). Secure an ATO for the AI system, including cybersecurity and privacy assessments. Bias. Check system outputs for unintended bias using established measures of bias. Learn more about bias identification. Data Sources and Quality. Establish secure connections to data sources (if necessary), protect data privacy, and address data quality as established in design phase. Data Set Size. Ensure that the training data set is of sufficient size (as established in the design phase) and that it has an appropriate level of real-world context to produce relevant and useful results. Disclosure. Any AI-generated information has been properly identified as AI-generated (e.g., using a watermark or footnote). Fairness. Where AI may affect fair treatment across groups the AI solution impacts, you must mitigate disparities leading to unlawful discrimination and harmful bias. If disparities cannot be mitigated, discontinue the use of the AI for agency decision making. Monitoring and Oversight. Assess monitoring logs (e.g., log review) to ensure that pilot participants are not using the solution for prohibited uses. Opt Out. Assess the frequency of opt out to understand how often people are opting out of the use of AI and to confirm that the opt out feature is working as expected. Performance Metrics. Collect and assess system performance data against the established performance metrics. Adjust performance targets as needed based on pilot data. Prohibited Uses. Communicate prohibited uses for the AI solution (e.g., denial of benefits using AI) to all pilot participants. Sensitive Data. Communicate policies on the types of data that can and cannot be ingested into the AI model (e.g., social security numbers) to all pilot participants. Sharing and Releasing AI Code and Models. If the AI system includes custom developed code including models and weights in active use, ensure that the code has been shared with the ACF AI Team via AI@ACF.HHS.gov so that it can be appropriately released in a public repository. Transparency. Notify directly affected individuals about the use of AI (or ensure that they will be notified prior to sharing their data). Note: this toolkit only covers the AI-specific responsibilities throughout design and delivery. For more information on broader responsibilities for the teams owning a technology system, refer to HHS s Policy for Information Security and Privacy Protection (IS2P). 5

Scaled Deployment Checklist Design Pilot Scaled Deployment Ongoing Monitoring AI Project Inventory. Update your project s record in the ACF AI Project Inventory with any major changes since the pilot phase. Authority to Operate (ATO). If not completed during pilot (e.g., because pilot used only public data), complete an ATO for the AI system, including cybersecurity and privacy assessments. Ensure ATO is kept up to date as the AI system changes. Bias. Continuously check system outputs for unintended bias using established measures of bias. Learn more about bias identification. Data Sources and Quality. Scale secure connections to data sources including adding connections to data sources not included in the pilot, if necessary. Disclosure. Continue identifying any AI-generated information as AI-generated (e.g., using a watermark or footnote). Fairness. Continue monitoring for places where AI may impact fairness, regularly mitigate disparities that leading to unlawful discrimination and harmful bias. If disparities cannot be mitigated, discontinue the use of the AI for agency decision making. Monitoring and Oversight. Continue assessing monitoring logs (e.g., log review) to ensure system users are not using the solution for prohibited uses. Opt Out. Continue assessing the frequency of opt out to understand how often people are opting out of the use of AI and to confirm that the opt out feature is working as expected at scale. If variation exists between the opt out frequency in the pilot phase, explore what is driving variations and explain the implications. Performance Metrics. Collect and assess system performance data against the established performance metrics. Include an off-switch or other process for discontinuing the use of the system if it is not performing as intended. Prohibited Uses. Communicate prohibited uses for the AI solution (e.g., denial of benefits using AI) to all users of the system. Sensitive Data. Communicate policies on the types of data that can and cannot be ingested into the AI model (e.g., social security numbers) to all users of the system. Sharing and Releasing AI Code and Models. If the model includes custom developed code including models and weights in active use, ensure that the code has been shared with the ACF AI Team via AI@ACF.HHS.gov so that it can be appropriately released in a public repository. Transparency. Notify people directed affected about the use of AI, including any additional individuals who were not impacted during the pilot (e.g., if new use cases are being added during scaled deployment). Note: this toolkit only covers the AI-specific responsibilities throughout design and delivery. For more information on broader responsibilities for the teams owning a technology system, refer to HHS s Policy for Information Security and Privacy Protection (IS2P). 6

Ongoing Monitoring Checklist Design Pilot Scaled Deployment Ongoing Monitoring AI Project Inventory. Update your project s record in the ACF AI Project Inventory with any major changes, such as if the AI-enabled system is sunset. Bias. Perform regular checks of system bias, including data analysis and outcome evaluation. Create and implement plans for addressing bias, if found. Learn more about bias identification. Data Sources and Quality. Continuously monitor data quality (e.g., accuracy, completeness, relevance, etc.), especially for new data. Data Set Size. Routinely validated volume of training data to ensure it is of sufficient size and balanced representation across groups. Learn more about dataset sizes. Fairness. If new disparities arise that lead to unlawful discrimination or harmful bias, mitigate these disparities. If these disparities cannot be mitigated, discontinue use of the AI system. Performance Metrics. Conduct ongoing system performance against established metrics to inform the ongoing use of the AI-enabled system (incl. decisions to renew licenses for the system, updating use cases, etc.). Monitoring and Oversight. System owners consistently monitor AI use to ensure that its use is consistent with its intended purpose and that system users are not using the tool for prohibited uses. Sensitive Data. Make policies on the types of data that can and cannot be ingested available and accessible to all users of the system (e.g., in system documentation or within the tool itself). Note: this toolkit only covers the AI-specific responsibilities throughout design and delivery. For more information on broader responsibilities for the teams owning a technology system, refer to HHS s Policy for Information Security and Privacy Protection (IS2P). 7

Appendix A. Definitions and Concepts Artificial Intelligence (AI):1The term artificial intelligence has the meaning provided in Section 238(g) of the John S. McCain National Defense Authorization Act for Fiscal Year 2019 (1025 U.S.C. note prec. 4061; Public Law 115-232) which states that the term artificial intelligence includes the following : 1. Any artificial system that performs tasks under varying and unpredictable circumstances without significant human oversight, or that can learn from experience and improve performance when exposed to data sets. 2. An artificial system developed in computer software, physical hardware, or other context that solves tasks requiring human-like perception, cognition, planning, learning, communication, or physical action. 3. An artificial system designed to think or act like a human, including cognitive architectures and neural networks. 4. A set of techniques, including machine learning, that is designed to approximate a cognitive task. 5. An artificial system designed to act rationally, including an intelligent software agent or embodied robot that achieves goals using perception, planning, reasoning, learning, communicating, decision making, and acting. Generative Artificial Intelligence (AI):2the class of AI models that emulate the structure and characteristics of input data in order to generate derived synthetic content. This can include images, videos, audio, text, and other digital content. AI Model:2a component of an information system that implements AI technology and uses computational, statistical, or machine-learning techniques to produce outputs from a given set of inputs. Data Drift: Changes in the distribution of underlying training or target data that leads to poor model performance AI Hallucinations: A limitation of generative AI in which the model may produce incorrect or misleading results and can be caused by insufficient training data, inaccurate assumptions, and other factors. Rights-Impacting AI:1The term rights-impacting AI refers to AI whose output serves as a principal basis for a decision or action concerning a specific individual or entity that has a legal, material, binding, or similarly significant effect on that individual s or entity s: 1. Civil rights, civil liberties, or privacy, including but not limited to freedom of speech, voting, human autonomy, and protections from discrimination, excessive punishment, and unlawful surveillance; 2. Equal opportunities, including equitable access to education, housing, insurance, credit, employment, and other programs where civil rights and equal opportunity protections apply; or 3. Access to or the ability to apply for critical government resources or services, including healthcare, financial services, public housing, social services, transportation, and essential goods and services. 1. 2. Source: OMB Memo M-24-10, Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence Source: Executive Order 14110, Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence 9

Appendix A. Definitions and Concepts, cont. Safety-Impacting AI:1The term safety-impacting AI refers to AI whose output produces an action or serves as a principal basis for a decision that has the potential to significantly impact the safety of: 1. Human life or well-being, including loss of life, serious injury, bodily harm, biological or chemical harms, occupational hazards, harassment or abuse, or mental health, including both individual and community aspects of these harms; 2. Climate or environment, including irreversible or significant environmental damage; 3. Critical infrastructure, including the critical infrastructure sectors defined in Presidential Policy Directive 2159 or any successor directive and the infrastructure for voting and Protecting the integrity of elections; or 4. Strategic assets or resources, including high-value property and information marked as sensitive or classified by the Federal Government. Risks from the use of AI:1risks related to efficacy, safety, fairness, transparency, accountability, appropriateness, or lawfulness of a decision or action resulting from the use of AI to inform, influence, decide, or execute that decision or action. This includes such risks regardless of whether: 1. the AI merely informs the decision or action, partially automates it, or fully automates it 2. there is or is not human oversight for the decision or action 3. it is or is not easily apparent that a decision or action took place, such as when an AI application performs a background task or silently declines to take an action 4. the humans involved in making the decision or action or that are affected by it are or are not aware of how or to what extent the AI influenced or automated the decision or action. While the particular forms of these risks continue to evolve, at least the following factors can create, contribute to, or exacerbate these risks: 1. AI outputs that are inaccurate or misleading 2. AI outputs that are unreliable, ineffective, or not robust 3. AI outputs that are discriminatory or have a discriminatory effect 4. AI outputs that contribute to actions or decisions resulting in harmful or unsafe outcomes, including AI outputs that lower the barrier for people to take intentional and harmful actions 5. AI being used for tasks to which it is poorly suited or being inappropriately repurposed in a context for which it was not intended 6. AI being used in a context in which affected people have a reasonable expectation that a human is or should be primarily responsible for a decision or action 7. the adversarial evasion or manipulation of AI, such as an entity purposefully inducing AI to misclassify an input. 1. 2. Source: OMB Memo M-24-10, Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence, Source: Executive Order 14110, Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence 10

Appendix B. Bias overview A predictive or generative AI model may be biased due its training data or algorithm(s). Bias is prejudice in favor of or against one thing, person, or group compared with another, usually in a way considered to be unfair. Bias may be unknowingly or unintentionally introduced based on historical patterns. Most commercially available models have been fine tuned to reduce bias, but bias often still appears in model outputs. Sample Tests for Bias Sample Questions for Vendors There are multiple simple ways to test a model for bias: Below are sample questions that can be asked of vendors throughout design and delivery of an AI solution. Training data analysis: Review historical training data for patterns of inequality, such as over- or underrepresented groups. For example, if a facial recognition algorithm's training data over-represents people of one race, it may not work well for people of another race. Data and Training What sources were used to train the AI model? How diverse is the training data in terms of race, gender, income, geography, and other demographics? Algorithm analysis: Examine the model's decision-making process to see if it weighs certain features too heavily. This could be tested by examining intermediary outputs for bias. An intermediary output in a predictive AI model refers to any intermediate data or results generated during the process of making a prediction. Model Development What methods were used to detect and mitigate bias during the training process? What fairness metrics were used to evaluate the model? What mechanisms can be used to explain model responses in a transparent way? Outcome evaluation: Regularly assess the model's decisions to see if certain clusters or groups of people are consistently disadvantaged. Testing and Validation What mechanisms are in place for ongoing monitoring of the model s performance and bias? How does the tool report potential biases or similar issues? Stakeholder feedback: Engage with affected groups for insights and provide feedback channels for them to share their experiences. Evaluation metrics: Compare your model's predictions with the actual or expected outcomes to quantify error, accuracy, precision, recall, or fairness. Deployment and Monitoring What mechanisms are in place for ongoing monitoring of the model s performance and bias? How do users report potential bias or similar issues with the solution? Statistical tests and visualization techniques: Use commercially available tools (e.g., Tableau, IBM SPSS Statistics, PowerBI, Stata) to identify disparities in predictions across different demographic groups. For more rigorous testing of bias, system owners may choose to use the bias review metrics found in the HHS Trustworthy AI Playbook1 (slide 83). Compliance and Ethics How does the solution comply with the Civil Rights Act of 1964, Americans with Disabilities Act of 1990, and other anti-discrimination laws? 11

Appendix C. AI model performance metrics overview Predictive Models Several common metrics and measures are used to assess the overall performance of an AI model. Below are sample generalized metrices for evaluating predictive AI models, as well as sample questions to ask vendors related to the model s performance. Note that the type of metrics used to evaluate model performance depends on the type of predictive model. Sample Performance Metrics for Predictive AI Models Classification Models Accuracy: The proportion of correct predictions to total predictions. Precision: The proportion of true positive predictions to the total predicted positives. Recall (Sensitivity): The proportion of true positive predictions to the actual positives, which includes true positives and false negatives. F1 Score: The harmonic mean of precision and recall, useful for imbalanced datasets. ROC-AUC: The area under the receiver operating characteristic curve, measuring the trade-off between true positive rate and false positive rate. Sample Questions for Vendors Model Performance What is the accuracy of the predictive AI model? What are the precision and recall metrics for the model? What is the F1 score of the model? What is the ROC-AUC score of the model? Regression Models Mean Absolute Error (MAE): The average of absolute differences between predicted and actual values. Mean Squared Error (MSE): The average of squared differences between predicted and actual values, penalizing larger errors. Root Mean Squared Error (RMSE): The square root of MSE, providing error in the same units as the original data. R-squared (Coefficient of Determination): Indicates the proportion of variance in the dependent variable that is predictable from the independent variables. Clustering Silhouette Score: Measures how similar an object is to its own cluster compared to other clusters. Davies-Bouldin Index: A ratio of within-cluster to between-cluster distances, where lower values indicate better clustering. Adjusted Rand Index: Measures the similarity between two data clusters, adjusting for chance. Model Robustness and Generalization Was cross-validation used to evaluate the model's performance? How did you address overfitting1 and underfitting during model development? How does the model perform across different demographic subgroups (especially race, gender, and geography)? Model Evaluation and Testing What test data was used to evaluate the model's performance? Has the model been stress-tested under various conditions? Model Deployment and Monitoring How does the model perform in real-time or near-real-time scenarios? How scalable is the model? What mechanisms are in place for monitoring the model's performance post-deployment? In addition to the generalized performance metrics above, the AI system should be evaluated against the success criteria established in the Proposal for Integrating AI. 12 1. Overfitting is a common problem in predictive AI models where the model learns the training data too well, including its noise and outliers, to the extent that it performs poorly on new, unseen data.

Appendix C. AI model performance metrics overview Generative AI Models Several common metrics and measures are used to assess the overall performance of an AI model. The graphic below includes sample generalized metrices for evaluating generative AI models, as well as sample questions to ask vendors related to the model s performance. Sample Questions for Vendors Model Performance How do you measure the quality of the content generated by the AI tool? How consistent and coherent is the generated content over longer outputs? How does the model balance creativity and diversity in its outputs? What are the error rates for the model in generating content? Sample Performance Metrics for Generative AI Models Quantitative Metrics Inception Score (IS): Evaluates the quality of generated images based on how well they match the distribution of real images and the diversity of generated outputs. Fr chet Inception Distance (FID): Compares feature distributions of generated samples and real samples, providing a sense of how similar the generated images are to real images. Perplexity: Commonly used in language models, it measures how well a probability distribution predicts a sample, where lower values indicate better performance. BLEU Score: Used for evaluating text generation, this metric compares generated text to reference text. ROUGE Score: Measures the overlap of generated text and reference text, useful for summarization tasks. Mean Opinion Score (MOS): A subjective measure where human evaluators rate the quality of generated samples, typically on a scale (e.g., 1 to 5). Model Evaluation and Testing How has the generated content been evaluated by human reviewers? Has the model been stress-tested under various conditions? How explainable are the model's outputs? Model Deployment and Monitoring How does the model perform in real-time or near-real-time scenarios? How scalable is the model? What mechanisms are in place for monitoring the model's performance post-deployment? Qualitative Assessments Human Evaluation: Human judges assess the quality, relevance, and creativity of the generated outputs, provides invaluable insights that metrics alone may miss. Diversity Metrics: Assessing how varied the generated outputs are, such as measuring the number of unique samples or the range of topics covered. Adversarial Testing: Using adversarial examples to see how well the generative model can withstand or respond to challenges, providing insights into robustness Compliance and Ethics What ethical guidelines were followed during the development of the AI tool? How does the AI tool comply with relevant regulations and standards for fairness and non- discrimination? In addition to the generalized performance metrics above, the AI system should be evaluated against the success criteria established in the Proposal for Integrating AI. 13

Appendix D. Training Data Size for Predictive AI Models There is no magic number of training data parameters that is considered universally sufficient for an AI model. Sufficiently large data sets will produce accurate and useful outputs, with better outputs resulting from more and higher quality training data. The graphic below provides some considerations that should be considered when determining if your training data set for your predictive AI model is large enough. Training Data Size Considerations for Predictive AI Models Sample Questions for Vendors Determining if a dataset is large enough for a predictive AI model can involve several considerations: Model Complexity: More complex models (like deep learning) typically require larger datasets to perform well, while simpler models (like linear regression) may suffice with smaller datasets. Feature Count: The number of variables in your dataset influences the required size. A rule of thumb is to have at least 10 times as many samples as variables. Task Type: Different tasks (classification, regression, etc.) have different data requirements. Classification tasks, especially with many classes, usually need more data. Data Variability: High variability in the data (e.g., many different classes or a wide range of values) often requires more samples to ensure the model generalizes well. Overfitting1 Risk: If the model performs well on training data but poorly on validation/testing data, this might indicate that the dataset is too small or not representative. Cross-Validation: Using techniques like k-fold cross-validation can help evaluate model performance and indicate whether more data might improve results. Performance Metrics: Monitor key metrics (accuracy, precision, recall, etc.) to assess whether the model's performance plateaus, which might suggest you have enough data. Learning Curves: Plot learning curves to visualize how performance improves with more data. If the curve flattens, you may have sufficient data. Simulations or Synthetic Data2: In some cases, simulating data or augmenting existing datasets can help test whether more data would improve performance. How much data was used to train the AI model? What is the level of granularity of the training data? How diverse is the training data in terms of demographics, geography, and other factors? How representative is the training data of the real-world scenarios where the model will be deployed? What are the sources of the training data? Were any data augmentation techniques used to increase the size of the training dataset? Was any synthetic data2 used in the training process? 1. 2. Overfitting is a common problem in predictive AI models where the model learns the training data too well, including its noise and outliers, to the extent that it performs poorly on new, unseen data. Synthetic data is artificially generated data that mimics the characteristics and statistical properties of real-world data. It is created using algorithms and models rather than being collected from actual events or observations. Synthetic data can be used for a variety of purposes, including training machine learning models, testing software, and conducting simulations. 14

Appendix D. Training Data Size for Generative AI Models There is no magic number of training data parameters that is considered universally sufficient for an AI model. Sufficiently large data sets will produce accurate and useful outputs, with better outputs resulting from more and higher quality training data. The graphic below provides some considerations that should be considered when determining if your training data set is large enough. Training Data Size Condensations for Generative AI Models Sample Questions for Vendors Generative AI models typically consist of foundational models (e.g., GPT4) that have been fine tuned to a specific purpose. When acquiring a genAI solution, you should seek to understand more about the size of the data used to train the model, as well as the size of the data used to fine tune the model. Determining if a dataset is large enough for a generative AI model can involve several key factors: Model Architecture: The complexity of the generative model (e.g., GANs, VAEs, diffusion models) influences data needs. More complex architectures generally require more data to learn effectively. Data Diversity: Generative models benefit from diverse data to capture various patterns. If your dataset lacks diversity (e.g., similar images), it may not be sufficient, regardless of size. Dimensionality: High-dimensional data typically requires larger datasets to avoid overfitting. The complexity of the data space means more examples are needed to learn effectively. Quality of Data: The quality of the data matters. Noisy or low-quality data can hinder training, necessitating a larger dataset to mitigate these issues. Training Stability: Monitor training stability during model training. If the model is unstable or doesn't converge, it might indicate insufficient data. Evaluation Metrics: Use metrics like Inception Score (IS) or Fr chet Inception Distance (FID) to evaluate the generative quality. Consistently low scores may suggest more data is needed. Learning Curves: Analyze learning curves to see how performance improves with additional data. A plateau may indicate that you have reached a sufficient dataset size. Foundational Model Is there a model card1 available? How much data was used to train the generative AI model? How diverse is the training data in terms of content, style, and context? What are the sources of the training data? Were any data augmentation techniques used to increase the size of the training dataset? Was any synthetic data used in the training process? Model Fine Tuning or RAG How much data was used to fine tune the generative AI model? How diverse is the training data in terms of content, style, and context? What are the sources of the training data? 15 1. A model card is standardized documentation format that provides detailed information about a generative AI model.