Achieving Low-Latency Serializability with Transaction Chains

Explore the concept of transaction chains in geo-distributed storage systems to achieve low-latency and serializable transactions. Understand the challenges of geo-distribution and how transaction chains offer a solution for efficient data management across multiple data centers.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

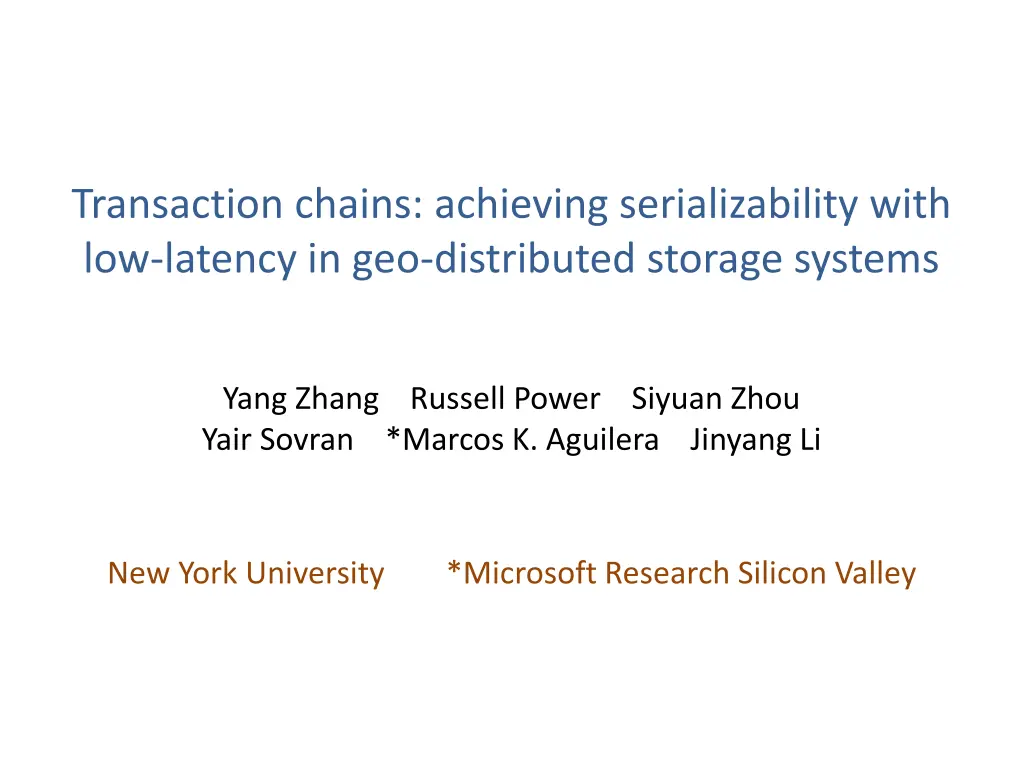

Transaction chains: achieving serializability with low-latency in geo-distributed storage systems Yang Zhang Russell Power Siyuan Zhou Yair Sovran *Marcos K. Aguilera Jinyang Li New York University *Microsoft Research Silicon Valley

Why geo-distributed storage? Large-scale Web applications Geo-distributed storage Replication

Geo-distribution is hard Strong semantics: relational tables w/ transactions Low latency: O(Intra-datacenter RTT)

Prior work Strict serializable High latency Spanner [OSDI 12] Serializable Provably high latency according to CAP Our work ? Various non-serializable Walter [SOSP 11] COPS [SOSP 11] Eiger [NSDI 13] Low latency Dynamo [SOSP 07] Eventual General transaction Key/value only Limited forms of transaction

Our contributions 1. A new primitive: transaction chain Allow for low latency, serializable transactions 1. Lynx geo-storage system: built with chains Relational tables Secondary indices, materialized join views

Talk Outline Motivation Transaction chains Lynx Evaluation

Why transaction chains? Auction service Items Bids Seller Item Highest bid Bidder Alice Item Book Price $100 Alice iPhone $20 Bob Camera $100 Bob Book $20 Bob Alice Datacenter-1 Datacenter-2

Why transaction chains? Operation: Alice bids on Bob s camera 1. Insert bid to Alice s Bids 1. Insert bid to Alice s Bids 2. Update highest bid on Bob s Items Alice s Bids Alice Book $100 Bob Bob s Items Alice Bob Camera $100 Datacenter-1 Datacenter-2

Why transaction chains? Operation: Alice bids on Bob s camera 1. Insert bid to Alice s Bids 2. Update highest bid on Bob s Items Alice s Bids Alice Book $100 Bob Bob s Items Alice Bob Camera $100 Datacenter-1 Datacenter-2

Low latency with first-hop return Alice bid on Bob s camera Alice s Bids Alice Book $100 Bob Alice Camera $500 Bob s Items Bob Camera $100 $500 Datacenter-1 Datacenter-2

Problem: what if chains fail? 1. What if servers fail after executing first-hop? 2. What if a chain is aborted in the middle?

Solution: provide all-or-nothing atomicity 1. Chains are durably logged at first-hop Logs are replicated to another closest data center Chains are re-executed upon recovery 2. Chains allow user-aborts only at first hop Guarantee: First hop commits all hops eventually commit

Problem: non-serializable interleaving Concurrent chains ordered inconsistently at different hops Not serializable! T1 Server-Y: T2 < T1 Server-X: T1 < T2 X=1 Y=1 T2 T2 T1 X=2 Y=2 Time Traditional 2PL+2PC prevents non-serializable interleaving at the cost of high latency

Solution: detect non-serializable interleaving via static analysis Statically analyze all chains to be executed Web applications invoke fixed set of operations T1 X=1 Y=1 Conflict? T2 A SC-cycle has both red and blue edges X=2 Y=2 Serializable if no SC-cycle [Shasha et. al TODS 95]

Outline Motivation Transaction chains Lynx s design Evaluation

How Lynx uses chains User chains: used by programmers to implement application logic System chains: used internally to maintain Secondary indexes Materialized join views Geo-replicas

Example: secondary index Bids (secondary index) Bids (base table) Bidder Item Price Bidder Item Price Alice Camera $100 Alice Camera $100 Bob iPhone $20 Bob Car $20 Alice Book $20 Bob Car $20 Bob Camera $100 Alice iPhone $100

Example user and system chain Alice bid on Bob s camera Alice Book $100 Alice Camera $100 Bob Bob Camera $100 Datacenter-1 Datacenter-2

Lynx statically analyzes all chains beforehand Read Bids table Read-bids Update Items table Insert to Bids table Put-bid Insert to Bids table Update Items table Put-bid SC-cycle One solution: execute chain as a distributed transaction Read Bids table Read-bids

SC-cycle source #1: false conflicts in user chains Update Items table Insert to Bids table Put-bid False conflict because max(bid, current_price) commutes Update Items table Insert to Bids table Put-bid

Solution: users annotate commutativity Update Items table Insert to Bids table Put-bid commutes Update Items table Insert to Bids table Put-bid

SC-cycle source #2: system chains Insert to Bids-secondary Insert to Bids table Put-bid Insert to Bids table Insert to Bids-secondary Put-bid SC-cycle

Solution: chains provide origin-ordering Observation: conflicting system chains originate at the same first hop server. T1 Insert to Bids-secondary Insert to Bids table Both write the same row of Bids table T2 Insert to Bids table Insert to Bids-secondary Origin-ordering: if chains T1 < T2 at same first hop, then T1 < T2 at all subsequent overlapping hops. Can be implemented cheaply sequence number vectors

Limitations of Lynx/chains 1. Chains are not strictly serializable, only serializable. 2. Programmers can abort only at first hop Our application experience: limitations are managable

Outline Motivation Transaction chains Lynx s design Evaluation

Simple Twitter Clone on Lynx Tweets Geo-replicated Author Tweet Tweets JOIN Follow-Graph (Timeline) Alice New York rocks Bob Time to sleep Author (=to) From Tweet Eve Hi there Bob Alice Time to sleep Eve Alice Hi there Follow-Graph Geo-replicated Follow-Graph (secondary) From To To From Alice Bob Bob Alice Alice Eve Bob Clark

Experimental setup europe Lynx protoype: In-memory database Local disk logging only. us-east us-west

Returning on first-hop allows low latency Chain completion 300 252 250 Latency (ms) 200 174 150 100 First hop return 50 3.2 3.1 3.1 0 Follow-user Post-tweet Follow-user Post-tweet Read-timeline

Applications achieve good throughput 1.6 1.35 1.4 1.2 Million ops/sec 1 0.8 0.6 0.4 0.184 0.173 0.2 0 Follow-User Post-Tweet Read-Timeline

Related work Transaction decomposition SAGAS [SIGMOD 96], step-decomposed transactions Incremental view maintenance Views for PNUTS [SIGMOD 09] Various geo-distributed/replicated storage Spanner[OSDI 12], MDCC[Eurosys 13], Megastore[CIDR 11], COPS [SOSP 11], Eiger[NSDI 13], RedBlue[OSDI 12].

Conclusion Chains support serializability at low latency With static analysis of SC-cycles Key techniques to reduce SC-cycles Origin ordering Commutative annotation Chains are useful Performing application logic Maintaining indices/join views/geo-replicas

Limitations of Lynx/chains 1. Chains are not strict serializable Time Serializable Strict serializable Remedies: Programmers can wait for chain completion Lynx provides read-your-own-writes 2. Programmers can only abort at first hop Our application experience shows the limitations are managable

2PC and chains The easy way R(A) T1 T1 R(A) T2 W(A) W(B) T2 2PC-W(AB) T2 W(A) W(B) R(A) T1 R(A) T1

2PC and chains The hard way R(A) R(B) T1 T1 R(A) R(B) T2 W(A) W(B) T2 2PC-W(AB) T2 W(A) W(B) T1 R(A) R(B) R(A) R(B) T1

2PC and chains The hard way B Chain A C D DC2 DC4 DC1 DC3 Parallel unlock retry 2PC

Lynx is scalable 3000 2770 2500 2000 QPS (K/s) Follow 1500 1350 Tweet 1000 Timeline 586 500 374 356 265 184 173 93 86 48 42 0 1 2 4 8 #Servers per DC

Challenge of static analysis: false conflict T1 1. Insert bid into bid history 2. Update max price on item Conflict on bid history Conflict on item T2 1. Insert bid into bid history 2. Update max price on item SC-cycle Not serializable

Solution: communitivity annotations T1 1. Insert bid into bid history 2. Update max price on item Conflict on bid history operation Commutative operation item Conflict on Commutative No real conflict because bid ids are unique Updating max commutes T2 1. Insert bid into bid history 2. Update max price on item No SC-cycle Serializable

ACID: all-or-nothing atomicity Chain s failure guarantee: If the first hop of a chain commits, then all hops eventually commit Users are only allowed to abort a chain in the first hop Achievable with low latency: Log chains durably at the first hop Logs replicated to a nearby datacenter Re-execute stalled chains upon failure recovery

ACID: serializability Serializability Execution result appears as if obey a serial order for all transactions No restrictions on the serial order Transactions Ordering 1 Ordering 2

Problem #2: unsafe interleaving Serializability Execution result appears as if obey a serial order for all transactions No restrictions on the serial order Transactions Ordering 1 Ordering 2

Chains are not linearizable Serializability Linearability a total ordering of chains a total ordering of chains & total order obeys the issue order Transactions Time Ordering 1 Ordering 2 Linearizable

Transaction chains: recap Chains provide all-or-nothing atomicity Chains ensure serializability via static analysis Practical challenges: How to use chains? How to avoid SC-cycles?

Example user chain Items Bids Seller Item Highest Bidder Item Price Bob Bob Camera Camera 100 Alice Camera 100 Alice Bob 2. Update max price on Bob s camera 1. Insert bid into Alice s bid history

Lynx implementation 5000 lines C++ and 3500 lines RPC library Uses an in-memory key/value store Support user chains in Javascript (via V8)

Geo-distributed storage is hard Applications demand simplicity & performance Friendly programming model Relational tables Transactions Fast response Ideally, operation latency = O(intra-datacenter RTT) Geo-distribution leads to high latency Coordinate data access across datacenters Operation latency = O(inter-datacenter RTT) = O(100ms)