Advanced Compiler Optimization Techniques - ECE.454 Programming Course Recap

"Explore advanced compiler optimization techniques discussed in ECE.454 Computer Systems Programming course by Ding Yuan at the University of Toronto, covering manual optimizations, parallel unrolling, and performance metrics. Learn about the impact of compiler optimization on code efficiency and understand optimization blockers. Discover how compiler optimizations like scheduling and register allocation can significantly improve code performance."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

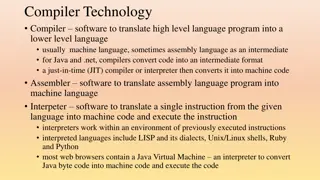

ECE 454 Computer Systems Programming Compiler and Optimization (II) Ding Yuan ECE Dept., University of Toronto http://www.eecg.toronto.edu/~yuan

Content Compiler Basics (last lec.) Understanding Compiler Optimization (last lec.) Manual Optimization Advanced Optimizations Parallel Unrolling Profile-Directed Feedback 2 Ding Yuan, ECE454 2025-05-27

Manual Optimization Example: Vector Sum Function 3 Ding Yuan, ECE454 2025-05-27

Vector Procedures vec_ptr: 0 1 2 length 1 length data Procedures: vec_ptr new_vec(int len) Create vector of specified length int get_vec_element(vec_ptr v, int index, int *dest) Retrieve vector element, store at *dest Return 0 if out of bounds, 1 if successful int *get_vec_start(vec_ptr v) Return pointer to start of vector data 4 Ding Yuan, ECE454 2025-05-27

Vector Sum Function: Original Code void vsum(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i < vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } What it does: Add all the vector elements together Store the resulting sum at destination location *dest Performance Metric: CPU Cycles per Element (CPE) CPE = 42.06 (Compiled -g) 5 Ding Yuan, ECE454 2025-05-27

After Compiler Optimization // same as vsum void vsum1(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i<vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } void vsum(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i<vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } Impact of compiler optimization vsum: CPE = 42.06 (Compiled -g) vsum1: (a C-code view of the resulting assembly instructions) CPE = 31.25 (Compiled -O2, will compile O2 from now on) Result: better scheduling & reg alloc, lots of missed opportunities 2025-05-27 6 Ding Yuan, ECE454

Optimization Blocker: Procedure Calls Why didn t compiler move vec_len out of the inner loop? Procedure may have side effects Function may not return same value for given arguments Depends on other parts of global state Why doesn t compiler look at code for vec_len? Linker may overload with different version Unless declared static Interprocedural optimization is not used extensively due to cost Warning: Compiler treats procedure call as a black box Weak optimizations in and around them 2025-05-27 7 Ding Yuan, ECE454

Manual LICM void vsum2(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; get_vec_element(v, i, &val); *dest += val; } } void vsum1(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i<vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } CPE = 20.66 Next Opportunity: Inlining get_vec_element()? compiler may not have thought worth it O2 will prioritize code size increase rather than performance 8 Ding Yuan, ECE454 2025-05-27

Announcement Bonus mark in HW1 I looked at your reports, bonus mark question is not well answered To encourage you to think more (especially after learning the knowledge in this lecture), you still can submit your answer to Q22 before Oct/14, 11:59PM Send an email to me (yuan@eecg.toronto.edu), with the Names, Student numbers of all group members Q17 & Q18 now are 1 mark each (previously 2 marks), and are bonus questions Because we haven t covered them in our lectures by the due date 9 Ding Yuan, ECE454 2025-05-27

Manual Inlining void vsum3i(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; int *data = get_vec_start(v); val = data[i]; *dest += val; } } void vsum2(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; get_vec_element(v, i, &val); *dest += val; } } Inlining: replace a function call with its body shouldn t normally have to do this manually could also convince the compiler to do it via directives Inlining enables further optimizations 10 Ding Yuan, ECE454 2025-05-27

Further LICM, val unnecessary void vsum3(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); *dest = 0; for (i = 0; i < length; i++) { *dest += data[i]; } } void vsum3i(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; int *data = get_vec_start(v); val = data[i]; *dest += val; } } CPE = 6.00 Compiler may not always inline when it should It may not know how important a certain loop is It may be blocked by missing function definition 11 Ding Yuan, ECE454 2025-05-27

Reduce Unnecessary Memory Refs void vsum4(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); int sum = 0; for (i = 0; i < length; i++) for (i = 0; i < length; i++) { *dest += data[i]; } } void vsum3(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); *dest = 0; sum += data[i]; *dest = sum; } Don t need to store in destination until end Can use local variable sum instead It is more likely to be allocated to a register! Avoids 1 memory read, 1 memory write per iteration CPE = 2.00 Difficult for the compiler to promote pointer de-refs to registers 12 Ding Yuan, ECE454 2025-05-27

Why are pointers so hard for compilers? Pointer Aliasing Two different pointers might point to a single location Example: int x = 5, y = 10; int *p = &x; int *q = &y; if (one_in_a_million){ q = p; } // compiler must assume q==p = &x from here on, though unlikely 13 Ding Yuan, ECE454 2025-05-27

Minimizing the impact of Pointers Easy to over-use pointers in C/C++ Since allowed to do address arithmetic Direct access to storage structures Get in habit of introducing local variables Eg., when accumulating within loops Your way of telling compiler not to worry about aliasing 14 Ding Yuan, ECE454 2025-05-27

Understanding Instruction-Level Parallelism: Unrolling and Software Pipelining 15 Ding Yuan, ECE454 2025-05-27

Superscalar CPU Design Instruction Control Address Fetch Control Retirement Unit Instruction Cache Instrs. Register File Instruction Decode Operations Register Updates Prediction OK? Integer/ Branch General Integer FP Add FP Mult/Div Functional Load Store Units Operation Results Addr. Addr. Data Data Data Cache Execution 16 Ding Yuan, ECE454 2025-05-27

Assumed CPU Capabilities Multiple Instructions Can Execute in Parallel 1 load 1 store 2 integer (one may be branch) 1 FP Addition 1 FP Multiplication or Division Some Instructions Take > 1 Cycle, but Can be Pipelined Instruction Latency Load / Store Integer Multiply Double/Single FP Multiply Double/Single FP Add Integer Divide Double/Single FP Divide Cycles/Issue 3 4 5 3 1 1 2 1 36 38 36 38 17 Ding Yuan, ECE454 2025-05-27

Intel Core i7 18 Ding Yuan, ECE454 2025-05-27

Zooming into x86 assembly void vsum4(vec_ptr v, int *dest) { int i; // i => %edx int length = vec_length(v); // length => %esi int *data = get_vec_start(v); // data => %eax int sum = 0; // sum => %ecx for (i = 0; i < length; i++) sum += data[i]; *dest = sum; } .L24: addl (%eax,%edx,4),%ecx # sum += data[i] // data+i*4 incl %edx # i++ cmpl %esi,%edx # compare i and length jl .L24 # if i < length goto L24 # Loop code only: 19 Ding Yuan, ECE454 2025-05-27

Note: addl includes a load! addl (%eax,%edx,4),%ecx %edx(i) load incl %edx.1 3 cycles load %ecx.i +1 cmpl addl cc.1 %ecx.0 (sum) jl t.1 1 cycle addl %ecx.1 20 Ding Yuan, ECE454 2025-05-27

Visualizing the vsum Loop .L24: addl (%eax,%edx,4),%ecx # sum += data[i] // data+i*4 incl %edx # i++ cmpl %esi,%edx # compare i and length jl .L24 # if i < length goto L24 # Loop code only: Operations Vertical position denotes time at which executed Cannot begin operation until operands available Height denotes latency addl is split into a load and addl Eg., X86 converts to micro-ops %edx.0 (i) load incl %edx.1 load %ecx.i +1 cmpl cc.1 %ecx.0 (sum) jl t.1 Time addl %ecx.1 21 Ding Yuan, ECE454 2025-05-27

4 Iterations of vsum %edx.0 %edx.0 incl incl 1 1 %edx.1 %edx.1 load load %ecx.i +1 cmpl %ecx.i +1 cmpl incl incl 2 2 %edx.2 %edx.2 cc.1 cc.1 jl jl load load %ecx.i +1 cmpl %ecx.i +1 cmpl incl incl 3 3 %ecx.0 %edx.3 %edx.3 t.1 t.1 cc.2 cc.2 4 integer ops i=0 i=0 addl %ecx.1 %ecx.1 Iteration 1 addl jl jl load load %ecx.i +1 cmpl %ecx.i +1 cmpl incl incl 4 4 %edx.4 t.2 t.2 cc.3 cc.3 i=1 i=1 addl %ecx.2 %ecx.2 Iteration 2 addl jl jl load load %ecx.i +1 cmpl %ecx.i +1 cmpl 5 5 t.3 t.3 cc.4 cc.4 i=2 i=2 addl %ecx.3 %ecx.3 Iteration 3 addl jl jl 6 6 Cycle Cycle t.4 t.4 i=3 i=3 addl %ecx.4 %ecx.4 Iteration 4 addl 7 7 Unlimited Resource Analysis Assume operation can start as soon as operands available Operations for multiple iterations overlap in time CPE = 1.0 But needs to be able to issue 4 integer ops per cycle Performance on assumed CPU: CPE = 2.0 Number of parallel integer ops (2) is the bottleneck 22 Ding Yuan, ECE454 2025-05-27

Loop Unrolling: vsum void vsum5(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-2; int *data = get_vec_start(v); int sum = 0; int i; for (i = 0; i < limit; i+=3){ sum += data[i]; sum += data[i+1]; sum += data[i+2]; } for ( ; i < length; i++){ sum += data[i] *dest = sum; } Optimization Combine multiple iterations into single loop body Amortizes loop overhead across multiple iterations Finish extras at end Measured CPE = 1.33 23 Ding Yuan, ECE454 2025-05-27

Visualizing Unrolled Loop: vsum %edx.0 Loads can pipeline, since don t have dependences Only one set of loop control operations addl %edx.1 load %ecx.i +1 cmpl cc.1 load jl %ecx.0c t.1a addl load Time t.1b %ecx.1a addl t.1c %ecx.1b addl %ecx.1c 24 Ding Yuan, ECE454 2025-05-27

Executing with Loop Unrolling: vsum %edx.2 5 5 6 6 addl 7 7 %edx.3 load %ecx.i +1 cmpl 8 8 cc.3 load jl 9 9 %ecx.2c t.3a addl load addl addl 10 10 %edx.4 t.3b %ecx.3a addl load load %ecx.i +1 cmpl %ecx.i +1 cmpl 11 11 t.3c cc.4 cc.4 %ecx.3b i=6 addl load load jl jl 12 12 %ecx.3c t.4a t.4a addl addl load load 13 13 Iteration 3 t.4b t.4b %ecx.4a %ecx.4a addl addl Predicted Performance Can complete iteration in 3 cycles Should give CPE of 1.0 14 14 Cycle Cycle t.4c t.4c %ecx.4b %ecx.4b i=9 addl addl 15 15 %ecx.4c %ecx.4c Iteration 4 25 Ding Yuan, ECE454 2025-05-27

Why 3 times? %edx.0 addl %edx.1 load %ecx.i +1 cmpl %edx.0 cc.1 load jl addl %edx.1 t.1a addl load %ecx.i +1 cmpl %edx.0 t.1b %ecx.1a cc.1 addl load jl addl %edx.1 %ecx.0c t.1a %ecx.1b addl load %ecx.i +1 cmpl t.1b %ecx.1a cc.1 addl load jl %ecx.0c t.1a %ecx.1b addl t.1b Unroll 2 times: what is the problem? %ecx.1a addl %ecx.1b 26 Ding Yuan, ECE454 2025-05-27

Vector multiply function: vprod .L24: imull (%eax,%edx,4),%ecx # prod *= data[i] incl %edx cmpl %esi,%edx jl .L24 # Loop: # i++ # i:length # if < goto Loop %edx.0 incl %edx.1 load cmpl void vprod4(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); int prod = 1; for (i = 0; i < length; i++) prod *= data[i]; *dest = prod; } cc.1 jl %ecx.0 t.1 Time imull %ecx.1 27 Ding Yuan, ECE454 2025-05-27

3 Iterations of vprod Unlimited Resource Analysis Assume operation can start as soon as operands available Operations for multiple iterations overlap in time %edx.0 %edx.0 %edx.0 incl incl incl 1 1 1 %edx.1 %edx.1 %edx.1 load load load cmpl cmpl cmpl incl incl incl 2 2 2 %edx.2 %edx.2 cc.1 cc.1 cc.1 cc.1 jl jl jl load load load cmpl cmpl cmpl incl incl 3 3 3 %ecx.0 %edx.3 %edx.3 t.1 t.1 t.1 cc.2 cc.2 cc.2 cc.2 %ecx.0 4 4 4 jl jl jl load load cmpl cmpl i=0 i=0 i=0 t.2 t.2 t.2 cc.3 cc.3 jl jl 5 5 5 imull imull imull t.3 t.3 6 6 6 7 7 7 %ecx.1 %ecx.1 %ecx.1 Iteration 1 Iteration 1 8 8 8 Performance on CPU: Limiting factor becomes latency of integer multiplier Data hazard Gives CPE of 4.0 9 9 9 imull imull imull i=1 i=1 i=1 10 10 10 Cycle Cycle Cycle 11 11 11 %ecx.2 %ecx.2 %ecx.2 Iteration 2 Iteration 2 12 12 12 13 13 13 imull imull i=2 i=2 14 14 14 15 15 15 %ecx.3 %ecx.3 28 Ding Yuan, ECE454 2025-05-27 Iteration 3 Iteration 3

Unrolling: Performance Summary Unrolling Degree vsum Relevant FU Latency 1 cycle 1 2 3 4 8 16 2.00 1.50 1.33 1.50 1.25 1.06 vprod 4 cycles 4.00 vFPsum 3 cycles 3.00 vFPprod 5 cycles 5.00 Only helps integer sum for our examples Other cases constrained by functional unit latencies Effect is nonlinear with degree of unrolling Many subtle effects determine exact scheduling of operations can we do better for vprod? 2025-05-27 29 Ding Yuan, ECE454

Visualizing vprod Computation ((((((((((((1 * x0) * x1) * x2) * x3) * x4) * x5) * x6) * x7) * x8) * x9) * x10) * x11) 1x0 * x1 * x2 * x3 Performance N elements, D cycles/operation N*D cycles = N * 4 * x4 * x5 * x6 * x7 * x8 * x9 * x10 * x11 * 30 Ding Yuan, ECE454 2025-05-27

Parallel Unrolling Method1: vprod void vprod5pu1(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-1; int *data = get_vec_start(v); int x = 1; int i; Code Version Integer product Optimization Multiply pairs of elements together And then update product for (i = 0; i < limit; i+=2) { x = x * (data[i] * data[i+1]); } if (i < length) // fix-up x0 *= data[i]; *dest = x; } Performance CPE = 2 Assume unlimited hardware resources from now on 31 Ding Yuan, ECE454 2025-05-27

%edx.0 addl %edx.1 load cmpl addl load jl load cmpl load jl Still have the data dependency btw. multiplications, but it s one dep. every two elements tmp=data[i] * data[i+1] mull mull x=x*tmp mull mull 32 Ding Yuan, ECE454 2025-05-27

1 1 x x0 0 1 1 x x0 0 Order of Operations Can Matter! * * x x1 1 x x1 1 * * x x2 2 x x2 2 * * x x3 3 x x3 3 * * x x4 4 x x4 4 /* Combine 2 elements at a time */ for (i = 0; i < limit; i+=2) { x = (x * data[i]) * data[i+1]; } * * x x5 5 x x5 5 * * x x6 6 x x6 6 * * x x7 7 x x7 7 * * x x8 8 x x8 8 * * x x9 9 x x9 9 CPE = 4.00 All multiplies performed in sequence * * x x10 10 x x10 10 Compiler can do it for you * * x x11 11 x x11 11 * * /* Combine 2 elements at a time */ for (i = 0; i < limit; i+=2) { x = x * (data[i] * data[i+1]); } x x0 0 x x0 0 x x0 0 x x1 1 x x1 1 x x1 1 * * * 1 1 1 1 x x2 2 x x2 2 x x2 2 x x3 3 x x3 3 x x3 3 * * * * * x x4 4 x x4 4 x x4 4 x x5 5 x x5 5 x x5 5 * * * * * x x6 6 x x6 6 x x6 6 x x7 7 x x7 7 x x7 7 CPE = 2 Multiplies overlap * * * * * x x8 8 x x8 8 x x8 8 x x9 9 x x9 9 x x9 9 * * * * * x x10 10 x x10 10 x x10 10 x x11 11 x x11 11 x x11 11 * * * * * * * 33 Ding Yuan, ECE454 2025-05-27

Parallel Unrolling Method2: vprod void vprod6pu2(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-1; int *data = get_vec_start(v); int x0 = 1; int x1 = 1; int i; for (i = 0; i < limit; i+=2) x0 *= data[i]; x1 *= data[i+1] } if (i < length) // fix-up x0 *= data[i]; *dest = x0 * x1; } Code Version Integer product Optimization Accumulate in two different products Can be performed simultaneously Combine at end Performance CPE = 2.0 2X performance Compiler won t do it for you 34 Ding Yuan, ECE454 2025-05-27

Method2: vprod6pu2 visualized 1 1 x x0 0 1 1 x x0 0 1 1 x x0 0 1 1 x x0 0 * * * * x x1 1 x x1 1 x x1 1 x x1 1 * * * * x x2 2 x x2 2 x x2 2 x x2 2 * * * * x x3 3 x x3 3 x x3 3 x x3 3 for (i = 0; i < limit; i+=2) x0 *= data[i]; x1 *= data[i+1] } *dest = x0 * x1; * * * * x x4 4 x x4 4 x x4 4 x x4 4 * * * * x x5 5 x x5 5 x x5 5 x x5 5 * * * * x x6 6 x x6 6 x x6 6 x x6 6 * * * * x x7 7 x x7 7 x x7 7 x x7 7 * * * * x x8 8 x x8 8 x x8 8 x x8 8 * * * * x x9 9 x x9 9 x x9 9 x x9 9 * * * * x x10 10 x x10 10 x x10 10 x x10 10 * * * * x x11 11 x x11 11 x x11 11 x x11 11 * * * * 35 Ding Yuan, ECE454 2025-05-27

Trade-off for loop unrolling Benefits Reduce the loop operations (e.g., condition check & loop variable increment) Reason for the integer add (vsum5) to achieve a CPE of 1 Enhance parallelism (often require manually rewrite the loop body) Harm Increased code size Register pressure Lots of Registers to hold sums/products IA32: Only 6 usable integer registers, 8 FP registers When not enough registers, must spill temporaries onto stack Wipes out any performance gains 36 Ding Yuan, ECE454 2025-05-27

Needed for Effective Parallel Unrolling: Mathematical Combining operation must be associative & commutative OK for integer multiplication Not strictly true for floating point OK for most applications Hardware Pipelined functional units, superscalar execution Lots of Registers to hold sums/products 37 Ding Yuan, ECE454 2025-05-27

Summary 38 Ding Yuan, ECE454 2025-05-27

Summary of Optimizations Version vsum vsum1 Vsum2 vsum3 vsum4 vsum5 vprod4 vprod5pu1 vprod5pu2 Optimization -g -O2 LICM Inlining + LICM mem-ref reduction unrolling (3x) (same as vsum4) par. unrolling 1 par. unrolling 2 Applied to Manual or GCC CPE 42.06 31.25 20.66 6.00 2.00 1.33 4.00 GCC manual manual manual -funroll-loops* vec_length() get_vec_element() *dest for loop for loop for loop manual manual 2.0 2.0 39 Ding Yuan, ECE454 2025-05-27

Punchlines Before you start: will optimization be worth the effort? profile to estimate potential impact Get the most out of your compiler before going manual trade-off: manual optimization vs readable/maintainable Exploit compiler opts for readable/maintainable code have lots of functions/modularity debugging/tracing code (enabled by static flags) Limit use of pointers pointer-based arrays and pointer arithmetic function pointers and virtual functions (unfortunately) For super-performance-critical code: zoom into assembly to look for optimization opportunities consider the instruction-parallelism capabilities of CPU 40 Ding Yuan, ECE454 2025-05-27

How to know what the compiler did? run: objdump d a.out prints a listing of instructions , with inst address and encoding void main(){ int i = 5; int x = 10; inst encoding 08048394 <main>: inst address 8048394: 55 push %ebp 8048395: 89 e5 mov %esp,%ebp 8048397: 83 ec 10 sub $0x10,%esp 804839a: c7 45 f8 05 00 00 00 movl $0x5,-0x8(%ebp) 80483a1: c7 45 fc 0a 00 00 00 movl $0xa,-0x4(%ebp) 41 Ding Yuan, ECE454 2025-05-27

Advanced Topics Profile-Directed Feedback (PDF) Basically feeding gprof-like measurements into compiler Allows compiler to make smarter choices/optimizations Eg., handle the if (one-in-a-million) case well Just-in-Time Compilation and Optimization (JIT) Performs simple version of many of the basic optimizations Does them dynamically at run-time, exploits online PDF Eg., Java JIT Current hot topics in compilation (more later): Automatic parallelization (exploiting multicores) 42 Ding Yuan, ECE454 2025-05-27