Advanced Forecasting Models with ARIMA Errors

Learn how to incorporate ARIMA errors into regression models for improved forecasting accuracy. Explore the modeling procedure and the challenges associated with autocorrelated errors in regression analysis.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

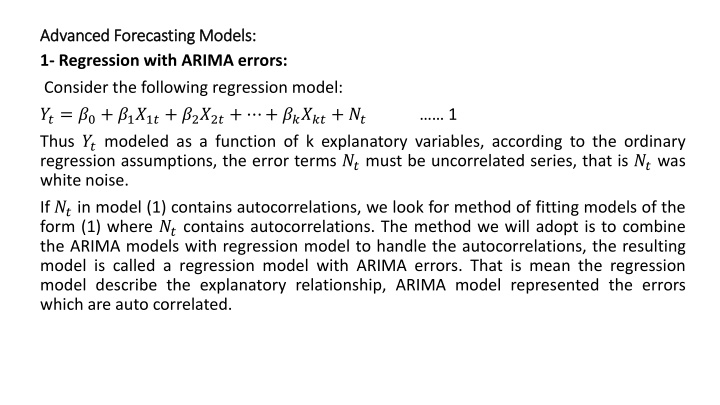

Advanced Forecasting Models: Advanced Forecasting Models: 1- Regression with ARIMA errors: Consider the following regression model: ??= ?0+ ?1?1?+ ?2?2?+ + ?????+ ?? Thus ??modeled as a function of k explanatory variables, according to the ordinary regression assumptions, the error terms ??must be uncorrelated series, that is ??was white noise. If ??in model (1) contains autocorrelations, we look for method of fitting models of the form (1) where ??contains autocorrelations. The method we will adopt is to combine the ARIMA models with regression model to handle the autocorrelations, the resulting model is called a regression model with ARIMA errors. That is mean the regression model describe the explanatory relationship, ARIMA model represented the errors which are auto correlated. 1

For example, if the errors ??can be modeled as ARIMA(1,1,1), model (1) can be written as following; ??= ?0+ ?1?1?+ ?2?2?+ + ?????+ ?? where , 1 1? 1 ? ??= (1 ?1?)?? ??is white noise series, that is ??~??(0,?2). Note that B is the back shift operator (???= ?? 1). We must distinguish between ??and ??, in model (2), we called ??the errors, and ??is called residuals. Under the assumption of ordinary regression, ??in model (1) be white noise so (??= ??). In model (2) we assumed that the errors ??are non stationary so we modeling them as ARIMA(1,1,1). Now if we difference all the variables in model (2), we obtain a regression model with ARMA(1,1) errors; ?? = ?1?1? that is after differencing all the variables the errors ?? becomes stationary series. 2 3 + ?? + ?2?2? + + ????? 4

Where; ??= 1 ? ??= ?? ??1 = 1 ? ???= ??? ??? 1 ?? = 1 ? ??= ?? ?? 1 In general, any regression model with an ARIMA error can be re-written as a regression with an ARMA error by differencing all variables in the model with the same differencing operator s in ARIMA model. ???

Modeling procedure: There are two main problems with applying OLS estimation to a regression problem with auto correlated errors: 1- the resulting estimates are no longer the best way to compute the coefficients as they do not take account of the time relationships in the data. 2- the standard errors of the coefficients are incorrect when there are auto correlated in the errors. This also invalidate the t-test , F-test and prediction intervals. The second problem is more serious than the first, it can lead to misleading results. If the standard errors obtained using OLS are smaller than they should be, some explanatory variables may appear to be significant, when in fact they are not, this is known as spurious regression. So regression with auto correlation errors needs a different approach from that in OLS. Instead of OLS estimation we can use either generalized least squares estimation or maximum likelihood estimation.

Generalized least squares estimators are obtained by minimizing; ? = ???????? 5 Where ??and ??are weights based on the pattern of auto correlations. Note that instead of only summing the squared errors, we also sum the cross-products of errors. Generalized least squares estimation only work for stationary errors, that is ??must follow an ARMA model. If a non-stationary model is required for the errors, then the model can be estimated by first differencing all the variables and then fitting a regression with an ARMA model for the errors. Following the modeling procedure ( based on Pankratz, 1991 ): 1- Fit the regression model with a proxy AR(1) or AR(2) model for errors, and then estimate ??= ?? ??. 2- Study ??as a separate time series. If ??is stationary; a- Determined the degree of the model from ARMA models according to Box-Jenkins procedure.

b- suppose that ??follow AR(1) model, that is mean; ?? ?? 1= ?? or ??= ?? 1+ ?? so, the suggested new model became; ??= ?0+ ?1?1?+ ?2?2?+ + ?????+ ?? 1+ ?? c- using GLS to estimate the parameters of the new model (6), and using Box-Jenkins procedure to check that ??( the residual series ) look like white noise. 3- If ??appear to be nonstationary, then; a- differencing all the variables. b- Fit the model using differenced variables and then estimate ??, and follows the same steps when ??is stationary. 6

Forecasting using regression with ARIMA errors: Forecasting using regression with ARIMA errors require to estimate the parameters using GLS and get the estimated model; ??+ = ?1 ?1,?+ + ?2 ?2,?+ + + ?? ??,?+ + ?? To find the Forecast ??+ we need to find the future forecast for the regression part, and the future forecast for the ARMA part using Box-Jenkins procedure. As for the explanatory variables ( ? s ), they are may be known or unknown, if they are unknown, so we must find forecasts about them by building a separate forecasting model for each explanatory variable, may be using time series models or cross section data models depending on other variables. If they are known in this case we do not need for making forecasts about them. The prediction intervals are calculated by combining the effects of the regression and ARIMA parts to the model. There are four sources of variation which ought to be accounted for in the prediction intervals; 1- the variation due to the error series ??. 2- the variation due to the error in forecasting the explanatory variables ( where necessary ). 3- the variation due to the estimating the regression part of the model. 4- the variation due to the estimating the ARIMA part of the model. 7