Advanced Representation Learning Techniques and Theoretical Insights

Explore advanced methods in representation learning such as neural networks, deep convolution networks, and linear discriminant analysis. Dive into the theoretical aspects of creating suitable linear spaces for classification, along with the concept of random hyperplanes and their applications in data representation.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Representation learning Usman Roshan

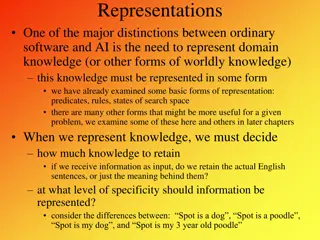

Representation learning Given input data X of dimensions n x d we want to learn a new data matrix X of dimensions n x d Objective is to achieve better classification Most methods attempt to find a new representation where the data is linearly separable.

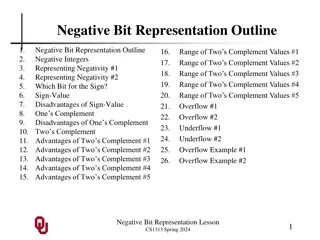

Methods Dimensionality reduction Linear discriminant analysis aims to maximize signal to noise ratio Feature selection Univariate methods like signal to noise Multivariate classification based Kernels Implicit dot products in a different feature space

Methods Neural networks Solving XOR with one hidden layer In fact a single layer can approximate any function: Universal Approximation theorem by Cybenko (1989) and Hornik (1991) K-means based feature learning Applied to image recognition (Coates. et. al. 2011) Protein sequence classification (Melman and Roshan 2017)

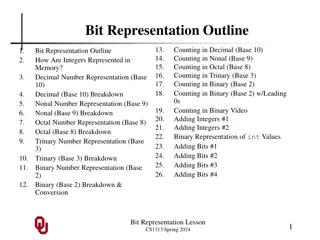

Methods Deep convolution networks (CNN) Several layers of convolution, pooling, and dense layers (dense usually at the end) ImageNet classification with deep CNNs (Krizhevsky et. al. 2012) Random hyperplanes Extreme learning machine Random bit regression Deep networks with random weights

Theory What do we know theoretically about creating a linear space from input data? Thomas Cover (1965): Every set of n points has a trivial n dimensional linear representation. Simply assign each point to n vertices of an n-1 dimensional simplex But what about creating a new linear space suitable for classification? (We want generalization to test example not just linearity in training.)

Random hyperplanes - what do we know? Lindenstrauss-Johnson lemma: Distances between vectors are preserved upto epsilon Is the margin preserved? First, what can we say about the probability that a random hyperplane will place two points on the opposite side of the plane? In other words, their predictions will be opposite.

Random hyperplanes Pick a random vector w from Rdof length 1 (each coordinate is a random Gaussian drawn independently) Consider points xiand xjon the unit hypersphere (data is normalized row-wise to length 1). Let be the angle between them (in radians). Then q Pr(sign(wTxi) sign(wTxj))=q p Goemans and Williamson, 1995, Journal of ACM

Random hyperplanes Based on previous theorem Normalized vectors with small angle between them will have short Hamming distances Balcan et. al 2006 and Shi et. al. 2012 show that random projections preserve margin upto a degree