Analysis of Scalability Metrics and Speedup in Computer Systems

Explore the factors influencing the scalability of computer systems, including metrics like machine size, CPU time, and memory demand. Learn about speedup and efficiency, essential for optimizing performance and cost in parallel computing architectures.

Uploaded on | 1 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

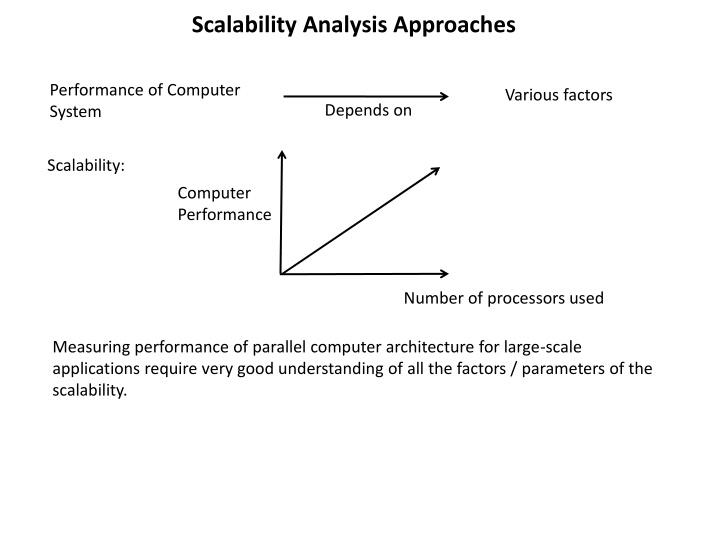

Scalability Analysis Approaches Performance of Computer System Various factors Depends on Scalability: Computer Performance Number of processors used Measuring performance of parallel computer architecture for large-scale applications require very good understanding of all the factors / parameters of the scalability.

Scalability Metrics: Machine Size CPU Time Computer Cost I/O Demand Scalability of (architecture, algorithm) combination Memory Demand Clock Rate Communication Overhead Programming Cost Problem Size Machine Size (n): More number of processors -> More computing power Clock rate (f=1/T MHz): Shorter the clock cycle time -> more number of cycles per second -> more number of operations can be performed per unit time. Problem size (s): amount of computational workload, directly proportional to sequential execution time T(s,1) on single processor system CPU Time (T): time consumed by program to execute on parallel machine with n processors T(s,n), parallel execution time. I/O demand (d): degree of movement of program, data, results between various components of a system during program execution. IO operations may overlap CPU operations.

Memory capacity (m): It is the memory demanded dynamically during program execution. Communication overload (h): It is the amount of time spent on inter-processor communication, synchronization, remote memory access, etc. Overhead h(s,n) is not part of T(s,n) Computer Cost (c): hardware software resources required for the execution of the parallel program. Programming overhead (p): These are the application program development overheads and can affect software productivity. Note: Computer cost and programming cost can be ignored in scalability analysis. A goal (or objective) is to optimize the resources for highest performance with lowest cost.

Speedup: For a given architecture, algorithm, and problem size s, the best asymptotic speedup S(s, n) can be obtained by varying number of processors. Let, T(s, 1) sequential execution time on one processor T(s, n) parallel execution time with n processors h(s, n) lump sum communication and I/O overhead Then, asymptotic speedup can be defined as: ) 1 , ( ) , ( h n s T + T s = S s n ( , ) ( , ) s n Efficiency: System efficiency can be defined as: ( , ) S s n = ( , ) E s n n Best speedup is linear S(s, n) = n. Therefore, ideal efficiency E(s, n) = n/n = 1 A system is scalableif the system s efficiency is 1 for all algorithms, any n number of processors, for any problem size s.

Scalability: The scalability (s, n) of a machine for a given algorithm is: (s, n) = Asymptotic Speedup S(s,n) on real machine/an ideal Asymptotic speedup SI(s,n) ) 1 , ( ) , ( n s h n s T + T s ( ) 1 , n s T s = S s n = ( , ) S s n and ) , ( I ( , ) ( , ) T I If we ignore all communication overheads h(s, n), then Scalability is defined as: ( , ) S s n = ( , ) s n ( , ) S s n I ( ) 1 , s T ( , ) T s n ( , ) T s n = = ( , ) I s n ( ) 1 , s T ( , ) T s n ( , ) T s n I In ideal case, SI(s, n) = n, therefore Scalability = Efficiency= S(s, n) / n We know that best speedup is linear, i.e. S(s, n) = n, then scalability is also improved, which also improve the efficiency E.

Supporting Issues: Extended areas of attention: Software scalability: associating right algorithm with right architecture Reducing communication overhead: Synchronize large number of processors, growth of memory-access latency in very large systems, Enhancing programmability: memory organization: central large address space vs distributed memory with individual processing unit Providing longevity and generality: Longevity: requires architecture with large address space Generality: support wide variety of languages and binary migration of programs.