Automatic Differentiation and Backpropagation Explained

Dive into the world of automatic differentiation and backpropagation in the context of computer science, focusing on the three ways to perform AD and its relevance in machine learning. Understand the importance of forward AD and backward AD and their connection to backpropagation algorithms.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Automatic Differentiation and Backpropagation Thomas Reps CS 703, Fall 2023

Goals of Todays Lecture Goals of Today s Lecture Last time: path problems (sum of products) Today: three ways to perform automatic differentiation (AD) Via a differentiation arithmetic Forward AD as a path problem Backward AD as a path problem Why is AD interesting? Backward AD is the engine behind back-propagation in machine learning Why is it interesting to understand it as a path problem? Gives a trivial explanation of why forward AD and backward AD compute the same quantities Forward AD is intuitive; backward AD as it is usually presented is a bit confusing 2

Automatic Differentiation TransformF F ?(?0) F(x0) Goals: method to evaluate F at x0 simple minimize round-off error Automatic Differentation ? ?? F (x0) ? (?0)

1995 vs. 1996: A Tale of Two Explanations June 1995: O Hare Airport, Chicago, IL It s actually pretty simple; it really boils down to why programmers notation is better than mathematicians notation Christian Bischof July 1996: a DARPA meeting in Lake Placid, NY Richard Zippel 4

Automatic Differentiation ?-ary version of ?(?) ?(?) = ?(?) ? (?) + ? (?) ?(?) ? ? ? = ??(?) ?=1 ? ?? ? ? (?) = ??(?) ?=1 ? ? + (?1 ?? 1?? ??+1 ??) +

Automatic Differentiation float F(float x) { int i; float ans = 1.0; } for(i = 1; i <= n; i++) { ans = ans * f[i](x); } return ans; float delta = . . .; /* small constant */ float F (float x) { return (F(x+delta) - F(x)) / delta; }

Automatic Differentiation float F (float x) { int i; float ans = 1.0; for(i = 1; i <= n; i++) { } } return ans ; ans = ans * f[i](x);

Automatic Differentiation float F (float x) { int i; float ans = 0.0; float ans = 1.0; } for(i = 1; i <= n; i++) { ans = ans * f [i](x) + ans * f[i](x); ans = ans * f[i](x); } return ans ;

Automatic Differentiation ? ?? ? ? (?) = ??(?) ?=1 ? ? ans = ans * f [i](x) + ans * f[i](x); ans = ans * f[i](x); Iter. ans ans 0 0 1 f1 (x) 2 f1*f2 + f1 *f2 3 f1*f2*f3 + f1*f2 *f3 + f1 *f2*f3 f1*f2*f3 ... 1 f1(x) f1*f2 ...

Differentiation Arithmetic class FloatD { public: float val'; float val; FloatD(float); }; FloatD operator+(FloatD a, FloatD b) { FloatD ans; ans.val'= a.val' + b.val'; ans.val = a.val + b.val; return ans; }

Differentiation Arithmetic class FloatD { public: float val'; float val; FloatD(float); }; FloatD operator*(FloatD a, FloatD b) { FloatD ans; ans.val'= a.val * b.val' + a.val' * b.val; ans.val = a.val * b.val; return ans; }

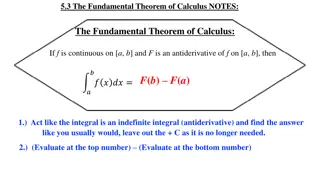

Laws for F c = 0 x = 1 (c + F) (x) = F (x) (c * F) (x) = c * F (x) (F + G) (x) = F (x) + G (x) (F * G) (x) = F (x) * G(x) + F(x) * G (x)

Differentiation Arithmetic float F(float x) { int i; float ans = 1.0; for(i = 1; i <= n; i++) { } } return ans; ans = ans * f[i](x);

Differentiation Arithmetic FloatD F(FloatD x) { int i; FloatD ans = 1.0; for(i = 1; i <= n; i++) { } } return ans; ans = ans * f[i](x);

Differentiation Arithmetic FloatD F(FloatD x) { int i; FloatD ans = 1.0; // ans.val = 0.0 // ans.val = 1.0 for(i = 1; i <= n; i++) { } } return ans; ans = ans * f[i](x);

Differentiation Arithmetic FloatD F(FloatD x) { int i; FloatD ans = 1.0; for(i = 1; i <= n; i++) { Similarly transformed version off[i] } } return ans; ans = ans * f[i](x);

Differentiation Arithmetic FloatD F(FloatD x) { int i; FloatD ans = 1.0; for(i = 1; i <= n; i++) { Overloaded operator* } } return ans; ans = ans * f[i](x);

Differentiation Arithmetic FloatD F(FloatD x) { int i; FloatD ans = 1.0; for(i = 1; i <= n; i++) { } } return ans; ans = ans * f[i](x); Overloaded operator=

Differentiation Arithmetic F v F(v) Automatic Differentiation v F(v) Differentiating version of F w F (v)*w

Chain Rule G F v G(v) F(G(v)) Automatic Differentiation Automatic Differentiation v Differentiating version of G Differentiating version of F G(v) G (v) F(G(v)) 1.0 F (G(v))*G (v)

Goals of Todays Lecture Goals of Today s Lecture Last time: path problems (sum of products) Today: three ways to perform automatic differentiation (AD) Via a differentiation arithmetic Forward AD as a path problem Backward AD as a path problem Why is AD interesting? Backward AD is the engine behind back-propagation in machine learning Why is it interesting to understand it as a path problem? Gives a trivial explanation of why forward AD and backward AD compute the same quantities Forward AD is intuitive; backward AD as it is usually presented is a bit confusing 24

Interface of the Path Interface of the Path- -Problem Framework Problem Framework Inputs: Space of weights ?, , , ,1,0 ? partially ordered under , with no infinite ascending chains ? closed under and associative; associative and commutative distributes over Identity elements 1 and 0, for and , respectively Graph, with a weight on each edge Source node ? Output: Solution to the equation system for the graph: ???? ???? ?, representing the sum-of-products over all paths from ? to each node Inputs Output 25

Declarative (global) and Equational (Local) Formulations Declarative global path formulation: ?1 ?2 ?? 1 ?? . . . Path ?: ?(?) = ??? ? ? ???= ?1 ?2 ?? 1 ?? ? ??? ?(?,?) ?(?) = ?? Equational formulation: ?(?) = ?(??) ?[??,?] ?? ? ? ??,? ????? s exit s exit m1 ?(?) = Id n mk 26

Convert MS/ST to SS/MT: Reverse Edges ?1 ? ?1 SS/MT: ?? ?? ?1 x2 x i + x2 x i + ?? y2 yj ?1 ?1 ?1 ? ? ?? ?? ?? 1 1 MS/ST SS/MT 27

Forward Solving vs. Backward Solving Forward Solving vs. Backward Solving Because is associative, it doesn t matter whether you solve in the forward or backward direction Backward solving is interesting for, e.g., demand dataflow analysis for property ?: What is the value of ?[?,?]? Don t care about any ?[?,?] for ? ?, so only find the ?[?,?] values that contribute to the value of ?[?,?] Same answer, although the value is associated with different nodes of the control-flow graph Forward: obtain ?[?,?] at node ? Backward: obtain ?[?,?] at node ? 28

Automatic Differentiation as a Path Problem Automatic Differentiation as a Path Problem Will use as a running example ?,? = = ?2? + 5??2+ 4?3 Thus ? ?? = 2?? + 5?2 ? ?? =?2+ 10?? + 12?2 Evaluated at the point ?0= (3,5) 3,5 = 920 ? + 4? ? + ? ? ? ??(3,5) = 155 ? ??(3,5) = 459 Key arithmetic properties is associative + is associative and commutative distributes over + 29

Expression DAG for DAG for ? + ?? Expression ? + ? ? (? + 4?) * ( ? + ? ?) 920 ( ? + ? ?) ? + 4? 40 23 + + ? + ? 8 4? 20 + + 4 ? ? 4 ? ? Symbolic Evaluated at ?0= (3,5) Used for evaluation

Computation Graph for Computation Graph for ? + ?? ? + ? ? (? + 4?) * ( ? + ? ?) 920 ( ? + ? ?) ? + 4? 40 23 + + 1 5 ? 1 ? + ? 8 4? 20 1 1 + + 1 1 4 4 ? 1 1 5 4 ? ? 4 ? ? Symbolic Evaluated at ?0= (3,5) Preparation for computing derivatives

Computation Graph for Computation Graph for ? + ?? Once the graph is labeled with the red items, these operators are irrelevant to the path problem to be solved ? + ? ? ? + ? ? 40 23 ? + 4? + + 5 ? 1 1 1 1 ? + ? 8 + + 1 1 4 4 1 1 ? 5 4 ? ? 4 ? ? Symbolic Evaluated at ?0= (3,5) Preparation for computing derivatives

Computation Graph for Computation Graph for ? + ?? Once the graph is labeled with the red items, these operators are irrelevant to the path problem to be solved ? + ? ? ? + ? ? 40 23 ? + 4? 5 ? 1 1 1 1 ? + ? 8 1 1 4 4 1 1 ? 5 4 ? To compute derivatives, we solve a path problem on this graph. (However, I will continue to show the irrelevant + and opererators.) ? 4 ? ? Symbolic Evaluated at ?0= (3,5) Preparation for computing derivatives

Edge Labeling for Sum of Products + ?? ?? 1 1 ?? ?? ?? ?? ? ? ? ?

? ?? = 1 40 + 1 5 23 = 155 (3,5) 40 23 Compute the single-source path-problem value for the root node, starting from leaf ?, with + + 5 1 1 8 + 1 4 1 5 4 ? ? ? ?? = 4 1 40 + (1 5 + 8) 23 = 459 (3,5) 40 23 + 5 1 1 8 + 1 4 1 5 4 ? ?

? ??= 1 ? + ? ? + 1 ? ? + 4? = 2?? + 5?2 ? + ? ? ? + 4? + ? 1 1 ? + ? + 1 4 1 ? 4 ? ? ? ??= 4 1 ? + ? ? + (1 ? + ? + ?) (? + 4?) = ?2+ 10?? + 12?2 ? + ? ? ? + 4? + ? 1 1 ? + ? + 1 4 1 ? 4 ? ?

Inductive Argument for Forward AD products up to here computes ? + ? Then the sum of ?? ?? 1 +?? + ?? 1 1 1 ?? ?? ?? ?? ? Assume that the sum of products up to here computes ? Assume that the sum of products up to here computes ?

Inductive Argument for Forward AD Then the sum of products up to here computes ? ? + ? ? ?? ?? ? +?? ?? ?? 1 +?? + ?? ? ?? 1 ? ? 1 1 ?? ?? ?? ?? ?? ?? ?? ?? ? ? Assume that the sum of products up to here computes ? Assume that the sum of products up to here computes ?

Forward Mode vs. Reverse Mode ? 1 ??3 ??3 . . . ? ? ??3 ? 2 ? ? ??3 . . . ? ? ??? ? 1 ??? ? 2 ??? ? ? ??? . . . . . . 1 2 . . . ? . . . ? ?? ?1 ?2 ?3 ?4 ?5 ?? . . . . . .

Forward Mode vs. Reverse Mode Because is associative, both forward and backward AD compute the same set of derivatives ? ? ???1 ? ?,1 ? ? 1 2 . . . ? . . . ? The derivatives themselves are associated with different nodes via the two methods: Forward mode: with the output nodes Reverse mode: with the input nodes ?? ? ? ??? ?1 ? ? ??1 ?2 ? ? ??2 ?3 ? ? ??3 ?4 ? ? ??4 ?5 ? ? ??5 ?? ? ? ??? . . . . . . . . . . . . ? 2 ??1 ? 2 ??2 ? 2 ??3 ? 2 ??4 ? 2 ??5 ? 2 ??? ? 2 ??? . . . . . .

? ?? = 1 40 + 1 5 23 = 155 (3,5) 40 23 40 23 + + 5 1 1 8 5 1 1 + 8 1 + 4 1 1 5 4 1 5 4 ? ? ? ?? = 40 1 + 23 5 1 = 155 4 ? ? ? ?? (3,5) = 4 1 40 + (1 5 + 8) 23 = 459 (3,5) 40 23 40 23 + + 5 1 1 8 5 1 1 + 8 + 1 4 1 1 4 5 1 5 4 ? ? ? ?? 4 ? ? = 40 1 4 + 23 5 1 + 23 8 = 459 (3,5)

Other Operators ??? ?1, ,??, ,?? ??? ?? ?1 ?? ?? ??,?.1 2? ?2 ? ? 1 ? ?(1 ?) ? ? 2? ? ? ? ?

Instantiation of the Framework for the AD Problem Instantiation of the Framework for the AD Problem 40 23 + ?, , , ,1,0 = { }, , ,+,1,0 5 1 1 8 + 1 Propagate sum-of-products over a DAG ? Input: Equation system E ? for ?, distinguished node ? Output: ???, where ??? = ?(???) 4 1 5 ??? ( , , , , ) ???? 1 for (each node ? reachable from ? in topological order) { ???? ?,? ?????? ???? ???? ? ?,? } 4 ? ? ? ?? = 40 1 + 23 5 1 = 155 (3,5) 43

Neural Network with One Hidden Layer ?1 ?2 ?3 Output layer Hidden layer Input layer . . . ?1 ?2 ??

Circuit for Training Neural Network ???? ? ??1,?2.1 ??1,?2.1 ??1,?2.1 Training layer 2 2 2 2?1 ?2 2?1 ?2 2?1 ?2 ?1 ?2 ?3 ?1 ?2 ?3 Output layer Hidden layer Input layer . . . ?1 ?2 ??

Node in a Neural Network . . . ? . . . ?1 ?2 ?? . . . ?1 ?2 ??

Neural Network ???? ?3 Desired output for ? Training Layer ?2 ?1 Output for ? ?1?2?3 ?? To know the relative amounts to adjust weights, we want the set of partial derivatives Large number of parameters Neural Network ????? ???1 ? ? ?2 ?1 ?1?2?3 ??????

Cost of Forward Mode vs. Reverse Mode ? 1 ??3 ??3 . . . ? ? ??3 ? 2 ? ? ??3 . . . ? ? ??? ? 1 ??? ? 2 ??? ? ? ??? . . . . . . 1 2 . . . ? . . . ? ?? ?1 ?2 ?3 ?4 ?5 ?? . . . . . .

Cost of Forward Mode vs. Reverse Mode 1 2 . . . ? . . . ? ?? ? ? ??? ?1 ? ? ??1 ?2 ? ? ??2 ?3 ? ? ??3 ?4 ? ? ??4 ?5 ? ? ??5 ?? ? ? ??? . . . . . . . . . . . . ? 2 ??1 ? 2 ??2 ? 2 ??3 ? 2 ??4 ? 2 ??5 ? 2 ??? ? 2 ??? . . . . . .

Forward Mode vs. Reverse Mode for a Neural Network ????? ??? ????? ??3 ???? ?? ?1 ?2 ?3 ?4 ?5 ?? . . . . . . ????? ??1 ????? ??2 ????? ??3 ????? ??4 ????? ??5 ????? ??? ????? ??? . . . . . .

???? Backpropagation ? 1 1 1 ??1,?2.1 ??1,?2.1 ??1,?2.1 2 2 2 2?1 ?2 2?1 ?2 2?1 ?2 ?2 ?2 ?1 ?1 ?1 ?2 ?3 ?3 ?3 ?2 ?1 ?3 ?1(1 ?1) ?3(1 ?3) ?2(1 ?2) ? 1 ? 1 ? 1 1 1 1 1 1 1 1 1 2 1 2 3 3 2 3 ?3 ?6 ?9 ?1 ?4 ?7 ?2 ?5 ?8 ?3 ?7 ?4 ?5 ?6 ?9 ?1 ?2 ?8 3 2 1 3(1 3) 2(1 2) 1(1 1) ? ? ? 1 1 1 1 1 1 ?1 ?2 ?1 ?1 ?2 ?2 ?10 ?12 ?14 ?11 ?13 ?15 ?11 ?12 ?13 ?15 ?14 ?10 ?1 ?2

???? Backpropagation ? 1 1 1 ??1,?2.1 ??1,?2.1 ??1,?2.1 2 2 2 2?1 ?2 2?1 ?2 2?1 ?2 ?2 ?2 ?1 ?1 ?1 ?2 ?3 ?3 ?3 ?2 ?1 ?3 ?1(1 ?1) ?3(1 ?3) ?2(1 ?2) ? 1 ? 1 ? 1 1 1 1 1 1 1 1 1 2 1 2 3 3 2 3 ?3 ?6 ?9 ?1 ?4 ?7 ?2 ?5 ?8 ?3 ?7 ?4 ?5 ?6 ?9 ?1 ?2 ?8 3 2 1 ????? ?4 = 1 ?2 ?2 ?21 ?2 1 1 3(1 3) 2(1 2) 1(1 1) ? ? ? 1 1 1 1 1 1 ?1 ?2 ?1 ?1 ?2 ?2 ?10 ?12 ?14 ?11 ?13 ?15 ?11 ?12 ?13 ?15 ?14 ?10 ?1 ?2

???? Backpropagation ? 1 1 1 ??1,?2.1 ??1,?2.1 ??1,?2.1 2 2 2 2?1 ?2 2?1 ?2 2?1 ?2 ?2 ?2 ?1 ?1 ?1 ?2 ?3 ?3 ?3 ?2 ?1 ?3 ?1(1 ?1) ?3(1 ?3) ?2(1 ?2) ? 1 ? 1 ? 1 1 1 1 1 1 1 1 1 2 1 2 3 3 2 3 ?3 ?6 ?9 ?1 ?4 ?7 ?2 ?5 ?8 ?3 ?7 ?4 ?5 ?6 ?9 ?1 ?2 ?8 3 2 1 3(1 3) 2(1 2) 1(1 1) ? ? ? 1 1 1 1 1 1 ?1 ?2 ?1 ?1 ?2 ?2 ?10 ?12 ?14 ?11 ?13 ?15 ?11 ?12 ?13 ?15 ?14 ?10 ?1 ?2