Automatic Topic Title Assignment Using Word Embedding

Discover how TAWE method combines LDA with word embedding to automatically assign titles to topics inferred from text data, exemplified on climate change tweets, enhancing efficiency and accuracy in topic modeling procedures.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

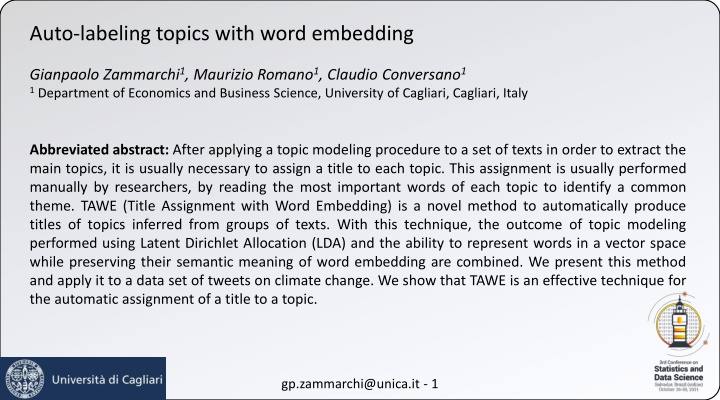

Auto-labeling topics with word embedding Gianpaolo Zammarchi1, Maurizio Romano1, Claudio Conversano1 1Department of Economics and Business Science, University of Cagliari, Cagliari, Italy Abbreviated abstract: After applying a topic modeling procedure to a set of texts in order to extract the main topics, it is usually necessary to assign a title to each topic. This assignment is usually performed manually by researchers, by reading the most important words of each topic to identify a common theme. TAWE (Title Assignment with Word Embedding) is a novel method to automatically produce titles of topics inferred from groups of texts. With this technique, the outcome of topic modeling performed using Latent Dirichlet Allocation (LDA) and the ability to represent words in a vector space while preserving their semantic meaning of word embedding are combined. We present this method and apply it to a data set of tweets on climate change. We show that TAWE is an effective technique for the automatic assignment of a title to a topic. gp.zammarchi@unica.it - 1

Problem, Data, Previous Works Challenge: The amount of textual data produced every day by social media and other sources is enormous. Topic modeling helps to identify topics in a collection of documents to have a more precise idea of the documents content. LDA is one of the most important techniques used for topic modeling. This method assumes that the process of creating a document is a random mixture over latent topics and that topics are characterized by a distribution over words. When a topic is created, it is useful to assign a title. This operation is often performed manually by the researcher. Solution: To tackle the problem of the manual assignment of titles to topics, which might be time-consuming and biased, we propose a two-fold approach: 1) 2) Create topics using LDA Assign titles using word embedding gp.zammarchi@unica.it - 2

Methods Document d Topic k king d k man queen woman topics words Word embedding preserves the semantic meaning of words Each topic will have a different word distribution and each document a topic distribution Using LDA we are able to detect which are the main topicsdiscussedin a collectionof documents We used word embedding to map a selection of the most relevant words for each topic in a vector space, in order to be able to find the mostrepresentative word that is chosenas the topic title We applied this methodto a data set of tweetson climatechange gp.zammarchi@unica.it - 3

Results and Conclusions Topic Topic 1 Topic 2 Topic 3 Topic 4 Topic 5 Top words report, president, administration, disasters, temperature president, trump, government, fight, long plan, part, president, fight, national emissions, energy, greenhouse gas, reduce, countries public, earth, state, action, west Title disasters government senator emissions state Take-home message: Starting from an LDA model, we extracted the most important words for each topic and their weights. Using word embedding we transformed words into vectors and found the medoid. The word closest to the medoid was selected as the most representative word and proposed to be an automatically assigned title of the topic gp.zammarchi@unica.it - 4