Belief Propagation from a Constraint Perspective

Explore the power of belief propagation through a constraint propagation lens, encompassing concepts like distributed belief propagation, Bayesian networks, and arc consistency. Delve into the applications, benefits, and challenges of belief propagation in various problem domains.

Uploaded on | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

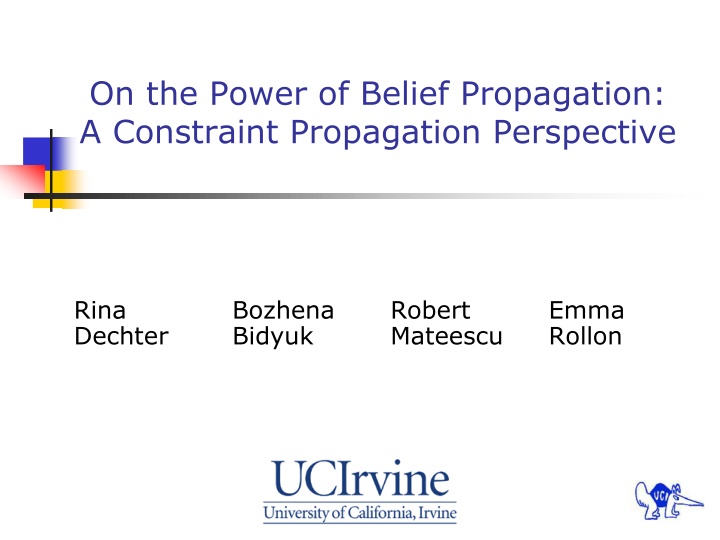

On the Power of Belief Propagation: A Constraint Propagation Perspective Rina Dechter Bozhena Bidyuk Robert Mateescu Emma Rollon search@UCI

Distributed Belief Propagation 5 5 5 4 3 2 1 5 5 1 2 3 4 How many people?

Distributed Belief Propagation Causal support Diagnostic support

BP on Loopy Graphs U U U 3 1 2 ( ) 3x ( ) 2x 1 U 1 U ( ) 1u 1 X ( ) 2u 1 X X X 1 2 Pearl (1988): use of BP to loopy networks McEliece, et. Al 1988: IBP s success on coding networks Lots of research into convergence and accuracy (?), but: Why IBP works well for coding networks Can we characterize other good problem classes Can we have any guarantees on accuracy (even if converges)

Arc-consistency A < B A B 1 2 1 1 2 3 2 2 < 3 3 A B Sound B = C A < D Incomplete 1 1 1 2 = < 2 2 2 3 Always converges (polynomial) 3 3 D C D C < 1 1 2 2 D < C 3 3 1 2 2 3

Flattening the Bayesian Network A P(A) 1 2 3 A 1 2 3 .2 .5 .3 0 A 1 1 2 2 3 3 B 2 3 1 3 1 2 P(B|A) .3 .7 .4 .6 .1 .9 0 A B 1 1 2 2 3 3 2 3 1 3 1 2 A C P(C|A) 1 2 3 2 1 1 0 A C 1 3 1 1 2 2 A A A A A A 2 2 3 3 AB AC AB AC B B A A B B C C A B D P(D|A,B) 1 2 3 1 3 2 2 1 3 2 3 1 3 1 2 3 2 1 A B D 1 2 1 3 2 1 2 3 3 1 3 2 4 4 5 5 3 2 3 1 2 1 1 1 1 1 1 1 0 B C F P(F|B,C) 1 2 3 3 2 1 B C F 1 2 3 2 ABD BCF ABD BCF 3 1 1 1 0 6 6 F F D D DFG DFG D F G P(G|D,F) 1 2 3 2 1 3 D F G 1 2 2 1 3 3 1 1 0 Flat constraint network Belief network

Belief Zero Propagation = Arc-Consistency elim(i, ne = = j i k j i k ( ( )) ( ( )) h R h h p h )} ( ) i l i k ne i i i ij j) { ( k i j 1 2 2 2 2 2 2 1h 4h 5h 1h 4 h B B 1 2 3 5h B B 1 3 A A A A A B B P(B|A) 1 2 1 3 2 1 2 3 3 1 3 2 P(B|A) >0 >0 >0 >0 >0 >0 >0 >0 >0 >0 >0 >0 0 0 A A h h1 12 2(A) 1 2 3 (A) >0 >0 >0 >0 >0 >0 0 0 B B h h1 12 2(B) 1 2 3 (B) >0 >0 >0 >0 >0 >0 0 0 B B h h1 12 2(B) 1 3 (B) >0 >0 >0 >0 0 0 A A B B 1 1 2 2 3 3 A A 1 2 3 2 3 2 3 1 3 1 2 AB AC A B C B 5 4 ABD BCF 6 F D DFG Updated relation: Updated belief: = = = = 2 2 4 2 5 2 2 4 2 5 ( , ) ( | ) ( , ) ( , ) Bel A B P B A h h h R A B R A B h h h 1 1 Bel Bel (A,B) (A,B) >0 >0 >0 >0 >0 >0 >0 >0 0 0 A A B B 1 2 2 3 A A B B 1 2 2 3 3 1 3 1 = = 3 1 3 1

Flat Network - Example A P(A) 1 R 1 .2 2 .5 R 3 .3 2 0 A B P(B|A) R 1 1 2 .3 3 1 3 .7 A C P(C|A) A 2 1 .4 1 2 1 A 3 A 2 2 3 .6 3 2 1 3 1 .1 AB AC 0 3 2 .9 0 B A C B R R 5 5 4 4 B C F P(F|B,C) A B D P(D|A,B) ABD BCF 1 2 3 1 1 2 3 1 6 1 3 2 1 F 3 2 1 1 D DFG 2 1 3 1 0 2 3 1 1 R 3 1 2 1 6 3 2 1 1 D F G P(G|D,F) 0 1 2 3 1 2 1 3 1 0

IBP Example Iteration 1 A P(A) 1 R 1 >0 3 >0 R 0 2 A B P(B|A) R 1 1 3 1 3 2 1 >0 A C P(C|A) A 2 3 >0 1 2 1 A 3 A 2 3 1 1 3 2 1 0 AB AC 0 B A C B R R 5 5 4 4 B C F P(F|B,C) A B D P(D|A,B) ABD BCF 1 3 2 1 1 2 3 1 6 2 3 1 1 F 3 2 1 1 D DFG 3 1 2 1 0 3 2 1 1 R 0 6 D F G P(G|D,F) 2 1 3 1 0

IBP Example Iteration 2 A P(A) 1 R 1 >0 3 >0 R 0 2 A B P(B|A) R 1 1 3 1 3 3 1 1 A C P(C|A) A 0 1 2 1 A 3 A 2 3 2 1 AB AC 0 B A C B R R 5 5 4 4 B C F P(F|B,C) A B D P(D|A,B) ABD BCF 1 3 2 1 3 2 1 1 6 3 1 2 1 F 0 D DFG 0 R 6 D F G P(G|D,F) 2 1 3 1 0

IBP Example Iteration 3 A P(A) 1 R 1 >0 3 >0 R 0 2 A B P(B|A) R 1 1 3 1 0 3 A C P(C|A) A 1 2 1 A 3 A 2 3 2 1 AB AC 0 B A C B R R 5 5 4 4 B C F P(F|B,C) A B D P(D|A,B) ABD BCF 1 3 2 1 3 2 1 1 6 3 1 2 1 F 0 D DFG 0 R 6 D F G P(G|D,F) 2 1 3 1 0

IBP Example Iteration 4 A P(A) 1 R 1 1 0 R 2 A B P(B|A) R 1 1 3 1 3 0 A C P(C|A) A 1 2 1 A 3 A 2 3 2 1 AB AC 0 B A C B R R 5 5 4 4 B C F P(F|B,C) A B D P(D|A,B) ABD BCF 1 3 2 1 3 2 1 1 6 0 F 0 D DFG R 6 D F G P(G|D,F) 2 1 3 1 0

IBP Example Iteration 5 A P(A) A B C D 1 3 F G 1 Belief 1 0 1 R 1 1 2 2 3 0 R 2 A B P(B|A) R 1 1 3 1 3 0 A C P(C|A) A 1 2 1 A 3 A 2 0 AB AC B A C B R R 5 5 4 4 B C F P(F|B,C) A B D P(D|A,B) ABD BCF 1 3 2 1 3 2 1 1 6 0 F 0 D DFG R 6 D F G P(G|D,F) 2 1 3 1 0

IBP Inference Power for Zero Beliefs Theorem: Iterative BP performs arc-consistency on the flat network. Soundness: Inference of zero beliefs by IBP converges All the inferred zero beliefs are correct Incompleteness: Iterative BP is as weak and as strong as arc-consistency Continuity Hypothesis: IBP is sound for zero - IBP is accurate for extreme beliefs? Tested empirically

Experimental Results We investigated empirically if the results for zero beliefs extend to -small beliefs ( > 0) Network types: Coding Linkage analysis* Grids* Two-layer noisy-OR* CPCS54, CPCS360 Algorithms: IBP IJGP Measures: Exact/IJGP histogram Recall absolute error Precision absolute error Have determinism? YES NO * Instances from the UAI08 competition

Networks with Determinism: Coding N=200, 1000 instances, w*=15

Nets w/o Determinism: bn2o w* = 24 w* = 27 w* = 26

Nets with Determinism: Linkage Exact Histogram IJGP Histogram Recall Abs. Error Precision Abs. Error pedigree1, w* = 21 Absolute Error Percentage pedigree37, w* = 30 Absolute Error Percentage i-bound = 3 i-bound = 7

The Cutset Phenomena & irrelevant nodes Observed variables break the flow of inference IBP is exact when evidence variables form a cycle-cutset Unobserved variables without observed descendents send zero- information to the parent variables it is irrelevant In a network without evidence, IBP converges in one iteration top-down X Y X

Nodes with extreme support Observed variables with xtreme priors or xtreme support can nearly-cut information flow: 0.0002 Root B1 C1 B2 C2 Sink A 0.00015 B1 C1 0.0001 EB EC B2 C2 0.00005 D EC EB 0 0.00001 0.01 0.2 0.5 0.8 0.99 0.99999 ED prior Average Error vs. Priors

Conclusion: For Networks with Determinism IBP converges & sound for zero beliefs IBP s power to infer zeros is as weak or as strong as arc-consistency However: inference of extreme beliefs can be wrong. Cutset property (Bidyuk and Dechter, 2000): Evidence and inferred singleton act like cutset If zeros are cycle-cutset, all beliefs are exact Extensions to epsilon-cutset were supported empirically.