Belief Update in Bayesian Networks

Explore the concept of belief update in Bayesian networks, including exact inference, Bayesian network definition, independence, trees, and more. Learn about updating beliefs in trees and interpreting Bayesian networks.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Exact Inference Belief Update .

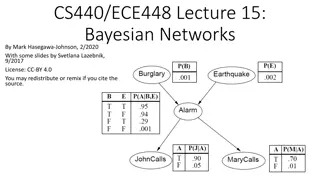

Bayesian Networks (Directed Acyclic Graphical Models) Definition: A Bayesian Network is a pair (P,D) where P is a Probability distribution over variables X1, , Xn and D is a Directed Acyclic Graph (DAG) over vertices X1, , Xn with the relationship: ? P(x1, , xn ) = ?=1 P(xi | pai) for all values xi of Xi and where paiis the set of parents of vertex xi in D. (When Xi is a source vertex then paiis the empty set of parents and P(xi | pai) reduces to P(xi).) DAG = directed graph without any directed cycles. 2

Independence in Bayesian networks n = i = ( , , ) ( | , ) p x x p x x x 1 1 1 n i i 1 = n = i = pa ( , , ) ( | ) (*) p x x p x 1 n i i 1 Implies for every topological order d ? Spoiler: Yes ? ?(? ?(1, ,? ?(? = ?(??(? |???(? ?=1 = ? ?(? ?(1, ,? ?(? = ?(??(? |?d(1 , ??(? 1 ?=1

Belief Update in Trees (Markov and Bayesian networks alike) P(x) = u,v,w,y,zP(u, ,z,x) U W V X Z Y P(x) = u,v,w,y,z P(u) P(x|u) P(v|u) P(w|u) P(y|x) P(z|x) P(x) = u,v,w,y,z g1(x,u) g2(v,u) g3(w,u) g4(y,x) g5(z,x) P(x) = u g1(x,u) v g2(v,u) w g3(w,u) y g4(y,x) z g5(z,x) 4

Belief Update in Trees P(x|e) = P(x,e) / x P(x,e) ; P(x,e) = u,v,w,y,z P(u, ,z,x,e) U W V X E1 Z Y E2 P(x,e) = u,v,w,y,z g1(x,u) g2(v,u) g3(w,u,e1) g4(y,x) g5(z,x,e2) P(x,e) = u g1(x,u) v g2(v,u) w g3(w,u,e1,) y g4(y,x) z g5(z,x,e2) 5

Belief Update in Trees ( Interpretation for Bayesian Networks) U W V X Z Y 6

Update all variables given the evidence Desired Query (update all beliefs): P(x|e), ,P(v|e) ? U W V X E1 Z Y E2 Solution: Keep all partial sums on the links in both directions Messages sent inwards first. 7

Now add a link from W to Z. What changes? U W V X E1 Z Y E2 P(x,e) = u g1(x,u) v g2(v,u) w g3(w,u,e1,) y g4(y,x) z g5(z,x,w,e2) 8

Variable Elimination Order T L We wish to compute P(l,do) for every value l of L given evidence D = do. A X D Good summation order (variable A is summed last): P(l, do) = a,l,t,xP(a,t,x,l,do) = a p(a) p(do|a) p(l|a) t p(t|a) x p(x|a) Bad summation order (variable A is summed first): P(l, do) = a,l,t,xP(a,t,x,l,do) = x t a p(a) p(l|a) p(do|a) p(t|a) p(x|a) Yields a high dimensional temporary table How to choose a reasonable order ? (generate Small Intermediate Tables) 9

Belief Update in General BNs Input: A Bayesian network, a set of nodes E with evidence E=e, an ordering x1, ,xm of all variables not in E. Output: P(x1,e) for every value x1 of X1. 2 x x m e = (1 x , ) ( | ) P P x pa The query: i i x i 1 m Set the evidence in all local probability tables that are defined over some variables from E. Iteratively (in some optimal or good order) Move all irrelevant terms outside of innermost sum Perform innermost sum, getting a new term Insert the new term into the product 10

Variable elimination in Bayes networks S V p(s) p(v) g1(t,v) g5(l,s) S V p(t|v) T p(l|s) L T g6(b,s) g2(a,t,l) L p(b|s) p(a|t,l) B A g3(d,a,b) B A g4(x,a) p(d|a,b) p(x|a) X D X D S S h1(t) g5(l,s) g5(l,s) T L T V,X g6(b,s) g6(b,s) L g2(a,t,l) h2(a,l) B A g3(d,a,b) B A h4(a) g3(d,a,b) 11

Update all variables in a general network Desired Query (update all N beliefs): P(A|D=d0), ,P(X|D=d0) ? Can we still compute them all N at the cost of computing one term twice? S V g1(t,v) g6(l,b,s)=p(b|s)p(l|s) g5(a,l,b)=1 L T g2(a,t,l) B A g3(d,a,b) g4(x,a) X D P(a, ,x) = K g1(t,v) g2(a,t,l) g3(d,a,b) g4(x,a)g5(a,l,b)g6(l,b,s) 12

Keep Partial Results in a Tree of Cliques S V g1(t,v) g6(l,b,s) g5(a,l,b) L T g2(a,t,l) B A g3(d,a,b) g4(x,a) X D T,V L,B,S D,A,B A,L,B A,T,L X,A 13

Keep Partial Results in a Tree of Cliques P(a, ,x) = g1(t,v) g2(a,t,l) g3(d,a,b) g4(x,a) g5(a,l,b) g6(l,b,s) d a T,V L,B,S s D,A,B A,L,B l b,d a,l t,l T A v A,T,L t,l x A X,A Solution: Keep all partial sums on the links in both directions Messages sent inwards first. 14

A Graph-Theoretic View Eliminating vertex v from an undirected graph G theprocess of making NG(v) a clique and removing v and its incident edges from G. NG(v) is the set of vertices that are adjacent to v in G. Elimination sequence of G an order of all vertices. 16

Treewidth The width w of an elimination sequence s is the size of the largest clique (minus 1) being formed in the elimination process, namely, ws = maximumv|NG(v)|. The treewidth tw of a graph G is the minimum width among all elimination sequences, namely, tw=minimums ws Examples. All trees have tw = 1, All graphs with isolated cycles have tw = 2, cliques of size n have tw=n-1. 17

Another Example x1 x2 x3 Order 1: Corners first Largest clique size 4 Width=3 x4 x5 x6 x7 x8 x9 Order 2: x2, x5, Largest clique size 5 Width=4 x1 x2 x3 x4 x5 x6 Exercise: Build clique trees. x7 x8 x9 18

Results about treewidth Sequence of Theorems: There are several algorithms that produce treewidth tw with a small constant factor error at time complexity of Poly(n)ctw, where c is a constant and n is the number of vertices. Main idea. Find a vertex minimum (A,B) cutset S (error by some factor). Make S a clique. Solve recursively G[A,S] and G[B,S]. Observation. The above theorem is practical if the constants and c are low enough because computing posterior belief also requires complexity of at most Poly(n)ktw where k is the size of the largest variable domain. 19

Observations Theorem. Finding an elimination sequence that produces the treewidth or more precisely just finding if tw = c is NP-hard. Simple Greedy heuristic. At each step eliminate a vertex v that produces the smallest clique, namely, minimizes |NG(v)|. 20

Summary so far Every elimination order creates a tree of cliques that helps store partial sums, if so desired. Update belief is time exponential in the largest clique in the undirected graph with the add-in edges. Update belief for all variables requires only twice the number of computations needed for one variable update. 21

Elimination Sequence with Weights There is a need for cost functions for optimizing time complexity that take the number of states into account. Elimination sequence of a weighted graph G an order of the vertices of G, writtenasX = (X (1) , ,X (n) ),where is a permutation on {1, ,n}. The cost of eliminating vertex v from a graph Gi is the product of weights of the vertices in NGi(v). 22

Elimination Sequence with Weights The residual graph Gi is the graph obtained from Gi-1 by eliminating vertex X (i-1). (G1 G). The cost of an elimination sequence X is the sum of costs of eliminating X (i) from Gi, for all i. = n = ( ) ( ) C X C G X i ( ) i 1 i 23

Example Original Bayes network. V S L T Weights of vertices (#of states): yellow nodes: w = 2 blue nodes: w = 4 A B D X V S L T A B Undirected graph Representation, called the moral graph. I-map of the original D X 24

Example Suppose the elimination sequence is X =(V,B,S, ): G2 G3 G1 S S V S L L L T T T A B A A B D D D X X X = = = = ( ) 2 * 4 . 8 ( ) 4 * 2 * 2 * 2 32 . CG V CG B 1 2 = + + + = + + + ( ) ( ) ( ) ( ) ... 8 32 4 ... C X C V C B C S G G G 1 2 3 25

Finding Good Elimination Order Optimal elimination sequence: one with minimal cost. NP-complete. Repeat until the graph becomes empty 1. Compute the elimination cost of each variable in the current graph. 2. Choose a vertex v among the k lowest at random (flip a coin according to their current elimination costs). 3. Eliminate vertex v from the graph (make its neighbors a clique) Repeat these steps until using 5% of the estimated time needed to solve the inference problem with the elimination order found so far (estimated by the sum of state space size of all cliques). 26

Claim 1: Each vertex Xi in a Bayesian Network is d- separated of all its non-descendants given its parents pai. Proof : Each vertex Xi is connected to its non-descendantsi via its parents or via its descendants. All paths via its parents are blocked because pai are given and all paths via descendants are blocked because they pass through converging edges Z were Z is not given. Hence by definition of d-separation the claim holds: ID(Xi, pai, non-descendantsi). 28

Claim 2: Each topological order d in a BN entails the same set of independence assumptions. Proof : By Claim 1: ID(Xi, pai, non-descendandsi) holds. For each topological order d on {1, ,n}, it follows IP(Xd(i), pad(i), non-descendsd(i)) holds as well. From soundness (Theorem 9) IP(Xd(i), pad(i), non-descendsd(i)) holds as well. By the decomposition property of conditional independence IP(Xd(i), pad(i), S) holds for every S that is a subset of non-descendsd(i) . Hence, Xi is independent given its parents also from S ={all variables before Xi in an arbitrary topological order d}. 29