Big-Oh in Algorithms: More on Efficiency and Complexity

Today's presentation covers more advanced concepts of Big-Oh notation beyond Chapter 1. Understanding linear and binary search algorithms, their efficiency, and how to apply them in code. Dive into the math and code aspects of asymptotic analysis with practical examples.

Uploaded on Feb 20, 2025 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Big Oh part 2 CS 244 This presentation requires Sound Enabled Brent M. Dingle, Ph.D. Game Design and Development Program Department of Mathematics, Statistics, and Computer Science University of Wisconsin Stout 2014

Things to Note Homework 2 is Due Soon Homework 3 is Posted on D2L Do NOT delay in starting it

From Last Time Algorithms Review Big-Oh Introduction Linear Search Binary Search

For Today More on Big-Oh This extends beyond that presented in Chapter 1 of your book, but start there Side Comment There are many ways to approach Big-Oh You will see several of the possible approaches Some are math/logic based, some more human intuition based Some are faster, less error prone, and better than others NONE are randomly guessing

Marker Slide Any General Questions ? Next up Searching Arrays Summary Review from last time Big-Oh The Math side of things Asymptotic Analysis The Code side of things Examples, how to get the math function

Searching Arrays: Linear Search and Binary Search Searching Find an element in a large amount of data Typically checking if an array contains a valuematching the sought element s key value So far you have seen at least: Linear searching (sequential search) Binary searching

Sequential (Linear) Search Begins at the start of the list and continues until the item is found or the entire list has been searched Compare each array element withsearch key If search key found, return element index If search key not found, return 1 (invalid index) Works best for small or unsorted arrays Inefficient for larger arrays

Binary Search, on Ordered Lists Uses a divide-and-conquer strategy to find an element in a list (eliminates half for each pass) Compare middle array element to search key If element equals key, then return array index If element is less than key, then repeat search on first half of array If element is greater then key, then repeat search on second half of array Continue search until Element equals search key (success) Efficient for large, sorted arrays

Searching: Big-Oh Reference Linear Search O(n) Binary Search O(lg n) yet to be proven/shown how spoiler: (integer-wise) lg n is the number of times n can be divided by 2

Marker Slide Any Questions On: Searching Arrays Summary Review from last time Next up Big-Oh The Math side of things Asymptotic Analysis Growth Rate Comparisons (input growth versus time growth) The Code side of things Examples, how to get the math function

Definitions An algorithm is a sequence of steps to solve a problem. The performance of an algorithm when implemented on a computer may vary depending on the approach used to solve the problem and the actual steps taken. To compare the performance of algorithms, computer scientists use big-O notation. We will study several algorithms during this course and analyze their performance using big-O notation.

Measuring Algorithm Efficiency There are different mechanisms for measuring efficiency. Measure actual system characteristics (practical) processor time used, memory size used, and execution time. (practical) Measure Time to develop the program (developer time). Analyze the number of operations an algorithm performs to measure its complexity. (theoretical) You can analyze the space (memory or disk) that the algorithm uses to perform its computation. (theoretical) Often, algorithms make a time vs. space trade-off. That is, the algorithm may run faster if given more space. Most of this class will put focus here

Best, Worst, and Average Case Few algorithms have the exact same performance every time performance of an algorithm depends on the size of the inputs it processes The best case performance of the algorithm is the most efficient execution of the algorithm on the "best" data inputs. The worst case performance of the algorithm is the least efficient execution of the algorithm on the "worst" data inputs. Most of this class will put focus here on worst case The average case performance of the algorithm is the average efficiency of the algorithm on the set of all data inputs. The analysis of all cases typically expresses efficiency in terms of the input size, n, of the data.

Big-Oh: Used for What (again) Big-O notation is a mechanism for quickly communicating the efficiency of an algorithm. Big-O notation measures the worst case performance of the algorithm by bounding the formula expressing the efficiency. cg(n) bounds f(n)

Asymptotic Execution Time Suppose f(n) is a formula describing the exact execution run time of some algorithm for an input size of n. That algorithm is then O( g(n) ) If there exist constants c and n0 such that: f(n) c*g(n), for all n > n0 cg(n) bounds f(n) For Example Searching a dictionary is O( log n ) Searching an unordered list is O( n )

Big-Oh Notation Given functions f(n) and g(n), we say that f(n) is O(g(n)) if there are positive constants c and n0 such that f(n) cg(n) for n n0 In other words: a function f(n) is O(g(n)) if f(n) is bounded above by some constant multiple of g(n) for all large n. So, f(n) cg(n) for all n > no .

Big-Oh Notation Given functions f(n) and g(n), we say that f(n) is O(g(n)) if there are positive constants c and n0 such that f(n) cg(n) for n n0 10,000 3n 2n+10 1,000 n Example: 2n+10 is O(n) 2n+10 cn (c 2) n 10 n 10 (c 2) Pick c = 3 and n0 = 10 100 10 1 1 10 100 1,000 n

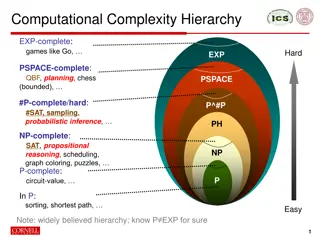

7 Functions Seven functions commonly used to examine the complexity of an algorithm are: constant, log2n, n, n log2n, n2, n3, 2n These are listed in lowest complexity to highest complexity More details follow In this class the subscript 2 may be omitted: log2n log n but in the context of this class log n should be taken to mean log base 2 of n, unless explicitly stated otherwise. Base Conversion Reminder: ???10(?) ???10(2) You may also see log2n abbreviated lg n Note your calculator will not understand this ???2(?) =

Common Big-Oh Functions Seven functions that often appear in algorithm analysis: Constant 1 Logarithmic log n Linear n N-Log-N n log n Quadratic n2 Cubic n3 Exponential 2n (n ) n Log n (n ) Log 1

Common Big-Oh Functions Seven functions that often appear in algorithm analysis: Constant 1 Logarithmic log n Linear n N-Log-N n log n Quadratic n2 Cubic n3 Exponential 2n n 2 3 n 2 n (n ) n Log

Running Time Comparisons * just too big of a number to calculate

Ranking of Execution Times fast ok kinda slow way too long function common name n! factorial 2n exponential nd , d > 3 polynomial n3 cubic n2 quadratic n ? n log n n linear ? square root log n logarithmic 1 constant

Constant Factors and Big Oh With regard to Big Oh Analysis The growth rate is not affected by constant factors, c, or lower-order terms Examples 100 n+ +105 is a linear function O(n) constant factor lower order term constant factors lower order term 40 n2+ + 108n is a quadratic function O(n2)

Big Oh Examples: Applying Definition 7n 2 7n-2 is O(n) need c > 0 and n0 1 such that 7n-2 c n for n n0 this is true for c = 7 and n0 = 1 3n3 + 20n2 + 5 3n3 + 20n2 + 5 is O(n3) need c > 0 and n0 1 such that 3n3 + 20n2 + 5 c n3 for n n0 this is true for c = 4 and n0 = 21 3 (log n) + 5 3 log n + 5 is O(log n) need c > 0 and n0 1 such that 3 log n + 5 c log n for n n0 this is true for c = 8 and n0 = 2

Marker Slide Any Questions On: Searching Arrays Big-Oh The Math side of things Asymptotic Analysis Next up Big-Oh The Math side of things Growth Rate Comparisons The Code side of things Nested For-Loops, Sigma Notation Practice Exercises Book Example

Big-Oh: Relates to Growth Rate As Big-Oh notation is based on asymptotic analysis Big-Oh notation gives an upper bound on the growth rate of a function The statement f(n) is O(g(n)) means that the growth rate of f(n) is no more than the growth rate of g(n) (f(n) cg(n) for n n0 Thus We can use the big-Oh notation to rank functions according to their growth rate f (n) c g (n)) Are we allowed to use derivatives in a Computer Science Class? YES! f(n) is O(g(n)) g(n) is O(f(n)) g(n) grows more f(n) grows more Same growth Yes No Yes No Yes Yes

Summarizing: Why Growth Rate Matters Aside c is just a constant Pretend it is 1 and make it go away if it helps if runtime is... time for n + 1 time for 2 n time for 4 n c lg n c lg (n + 1) c (lg n + 1) c(lg n + 2) c n c (n + 1) 2c n 4c n ~ c n lg n + c n 2c n lg n + 2cn 4c n lg n + 4cn consider O(n^2) c n lg n c n2 ~ c n2 + 2c n 16c n2 4 cn2 c n3 ~ c n3 + 3c n2 8c n3 64c n3 c 2n c 2 n+1 c 2 2n c 2 4n

Summarizing: Why Growth Rate Matters where input size doubles Aside c is just a constant Pretend it is 1 and make it go away if it helps if runtime is... time for n + 1 time for 2 n time for 4 n c lg n c lg (n + 1) c (lg n + 1) c(lg n + 2) c n c (n + 1) 2c n 4c n ~ c n lg n + c n 2c n lg n + 2cn 4c n lg n + 4cn consider O(n^2) c n lg n then runtime quadruples c n2 ~ c n2 + 2c n 16c n2 4 cn2 c n3 ~ c n3 + 3c n2 8c n3 64c n3 c 2n c 2 n+1 c 2 2n c 2 4n

Summarizing: Why Growth Rate Matters if runtime is... time for n + 1 time for 2 n time for 4 n c lg n c lg (n + 1) c(lg n + 2) c (lg n + 1) consider O(lg n) c n c (n + 1) 2c n 4c n ~ c n lg n + c n 2c n lg n + 2cn 4c n lg n + 4cn c n lg n c n2 ~ c n2 + 2c n 4 cn2 16c n2 c n3 ~ c n3 + 3c n2 8c n3 64c n3 c 2n c 2 n+1 c 2 2n c 2 4n

Summarizing: Why Growth Rate Matters where input size doubles if runtime is... time for n + 1 time for 2 n time for 4 n then runtime increases by 1 step c lg n c lg (n + 1) c(lg n + 2) c (lg n + 1) consider O(lg n) c n c (n + 1) 2c n 4c n ~ c n lg n + c n 2c n lg n + 2cn 4c n lg n + 4cn c n lg n c n2 ~ c n2 + 2c n 4 cn2 16c n2 c n3 ~ c n3 + 3c n2 8c n3 64c n3 c 2n c 2 n+1 c 2 2n c 2 4n

Examples: Running Time Comparisons i.e. 8n versus n 8 times LONGER 8 times longer = 3*8 = 24 minutes Say it takes 3 minutes for an input size of 10 (i.e. n = 10) Now if we had an input size of 80 (i.e. 8n) How long would it take? 10 8 10= 3 ? X = 3 * 8

Examples: Running Time Comparisons i.e. 8n versus n 8 times LONGER 64 times longer = 3*64 = 192 minutes 64 times LONGER Say it takes 3 minutes for an input size of 10 (i.e. n = 10) Now if we had an input size of 80 (i.e. 8n) How long would it take? (10)2 (8 10)2= 3 ? X = 3 * 82 = 3 * 64

Examples: Running Time Comparisons i.e. 8n versus n 8 times LONGER 64 times LONGER same time 3 * 1 = 3 minutes Say it takes 3 minutes for an input size of 10 (i.e. n = 10) Now if we had an input size of 80 (i.e. 8n) How long would it take? 1 1= 3 ? X = 3 * 1 = 3

Examples: Running Time Comparisons i.e. 8n versus n 8 times LONGER 64 times LONGER Be careful with this one it is not a times longer same time And these follow in similar fashion but rather a plus steps

Marker Slide Any Questions On: Searching Arrays Big-Oh The Math side of things Asymptotic Analysis Growth Rate Comparisons Next up Big-Oh The Code side of things Nested For-Loops, Sigma Notation Practice Exercises Book Example

Sigma Summation To more formally analyze algorithms we typically need to perform mathematical summations. The summation sign is used to denote the summation of a large series of numbers. The summation sign is the Greek letter sigma. Example: n = i + + + + = 1 2 3 ... n i 1

Sigma Summation Rules The sum of numbers from 1 to n has a simple formula: ) 1 + * ( n n n This would be a good formula to have memorized = i = i 2 1 Multiplied constants can be moved outside the summation: = i ) 1 + * ( n n n n = = 3 * 3 * 3 * i i 2 = 1 1 i Added constants can be summed separately: + * ( ) 1 n n n n n n n 2 = i = i = i = i = i + = + = + = + ( ) 3 3 3 * 1 3 * i i i n 1 1 1 1 1

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) n cout << j << endl; Executes n times (worst case), Uses n units of time ? math function: ?=1 ???

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) n n cout << j << endl; Executes n times (worst case), Uses n units of time ? ? math function: ?=1 ?=1 ???

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) n n cout << j << endl; 1 Constant time: Mark it as using ONE unit of time ? ? math function: ?=1 ?=1 1

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ?=1 ?=1 1 Simplifies to n

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ?=1 ?=1 1 = ?=1 ? = Simplifies to n

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ?=1 ?=1 1 = ?=1 ? = nis a constant take it out of the summation

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ? ?=1 ?=1 1 = ?=1 ? = ? ?=1 1 = nis a constant take it out of the summation

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ? ?=1 ?=1 1 = ?=1 ? = ? ?=1 1 = Simplifies to n

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ? ?=1 ?=1 1 = ?=1 ? = ? ?=1 1 = ? ? Simplifies to n

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ? ?=1 ?=1 1 = ?=1 ? = ? ?=1 1 = ? ? = Multiply out

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ? 1 = ? ? = ?2 ?=1 ?=1 1 = ?=1 ? = ? ?=1 Multiply out

Example: Using the Sum Using summations determine the Big-Oh of the following code: for (int i =1; i <= n; i++) for (int j=1; j <= n; j++) cout << j << endl; ? ? ? ? 1 = ? ? = ?2 ?=1 ?=1 1 = ?=1 ? = ? ?=1 Math function for this is: n2 Algorithm is O(n2)

Marker Slide Any Questions On: Searching Arrays Big-Oh The Math side of things Asymptotic Analysis Growth Rate Comparisons The Code side of things Nested For-Loops, Sigma Notation Next up Big-Oh The Code side of things Practice Exercises Book Example