Can near-data processing accelerate dense MPI collectives? An MVAPICH Approach

Memory growth trends like DRAM, the MVAPICH2 Project for high-performance MPI library support, and the importance of MPI collectives in data-intensive workloads are discussed in this presentation by Mustafa Abduljabbar from The Ohio State University.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Can near-data processing accelerate dense MPI collectives? An MVAPICH Approach Talk by: Mustafa Abduljabbar Network-based Computing Laboratory Department of Computer Science and Engineering The Ohio State University

Memory growth trends (e.g. DRAM) The end of the Dennard scaling made it more complex to sustain the DRAM growth The gap between applications and growth is increasing 2 Network Based Computing Laboratory

Overviewof the MVAPICH2 Project High Performance open-source MPI Library Support for multiple interconnects InfiniBand, Omni-Path, Ethernet/iWARP, RDMA over Converged Ethernet (RoCE), AWS EFA, OPX, Broadcom RoCE, Intel Ethernet, Rockport Networks, Slingshot 10/11 Support for multiple platforms x86, OpenPOWER, ARM, Xeon-Phi, GPGPUs (NVIDIA and AMD) Started in 2001, first open-source version demonstrated at SC 02 Used by more than 3,290 organizations in 90 countries Supports the latest MPI-3.1 standard More than 1.63 Million downloads from the OSU site directly Empowering many TOP500 clusters (Nov 22 ranking) http://mvapich.cse.ohio-state.edu 7th , 10,649,600-core (Sunway TaihuLight) at NSC, Wuxi, China Additional optimized versions for different systems/environments: MVAPICH2-X (Advanced MPI + PGAS), since 2011 19th, 448, 448 cores (Frontera) at TACC MVAPICH2-GDR with support for NVIDIA (since 2014) and AMD (since 2020) GPUs MVAPICH2-MIC with support for Intel Xeon-Phi, since 2014 34th, 288,288 cores (Lassen) at LLNL MVAPICH2-Virt with virtualization support, since 2015 46th, 570,020 cores (Nurion) in South Korea and many others MVAPICH2-EA with support for Energy-Awareness, since 2015 MVAPICH2-Azure for Azure HPC IB instances, since 2019 MVAPICH2-X-AWS for AWS HPC+EFA instances, since 2019 Available with software stacks of many vendors and Linux Distros (RedHat, SuSE, OpenHPC, and Spack) Tools: OSU MPI Micro-Benchmarks (OMB), since 2003 Partner in the 19th ranked TACC Frontera system OSU InfiniBand Network Analysis and Monitoring (INAM), since 2015 Empowering Top500 systems for more than 16 years 3 Network Based Computing Laboratory

Why collectives? MPI collectives are used by many data intensive (map-reduce) workloads In MPI libraries, they are the heaviest in terms of computation and communication (interconnect/mem buses) Collective performance (such as alltoall and allreduce) is based on many factors, including but not limited to: The algorithmic choice The underlying pt-to-pt performance (inter-node vs intra-node) The platform characteristics (e.g. CPU/Memory model) 4 Network Based Computing Laboratory

Architecture of our experimental platform Model Intel Xeon Platinum 8280M ("Cascade Lake") Total cores per CLX node: 112 cores on four sockets (28 cores/socket) Hardware threads per core: 1 Hyperthreading is not currently enabled on Frontera Clock rate: 2.7GHz nominal Memory: 2.1 TB NVDIMM Cache: 32KB L1 data cache per core; 1MB L2 per core; 38.5 MB L3 per socket. 384 GB DDR4 RAM configured as an L4 cache Each socket can cache up to 66.5 MB (sum of L2 and L3 capacity). Local storage: 144GB /tmp partition on a 240GB SSD 4x 833 GB /mnt/fsdax[0,1,2,3] partitions on NVDIMM 3.2 TB usable local storage 5 Network Based Computing Laboratory

The bigmem (Optane) vs smallmem node Bigmem Node Smallmem Node Model Intel Xeon Platinum 8280M ("Cascade Lake") Model Intel Xeon Platinum 8280 ("Cascade Lake") Total cores per CLX node: 112 cores on four sockets (28 cores/socket) Total cores per CLX node: 56 cores on two sockets (28 cores/socket) Hardware threads per core: 1, Hyperthreading is not currently enabled on Frontera Hardware threads per core: 1, Hyperthreading is not currently enabled on Frontera Clock rate: 2.7GHz nominal Clock rate: 2.7GHz nominal Memory: 2.1 TB NVDIMM Memory: 192GB (2933 MT/s) DDR4 Cache: 32KB L1 data cache per core; 1MB L2 per core; 38.5 MB L3 per socket. 384 GB DDR4 RAM configured as an L4 cache Each socket can cache up to 66.5 MB (sum of L2 and L3 capacity). Cache: 32KB L1 data cache per core; 1MB L2 per core; 38.5 MB L3 per socket. Each socket can cache up to 66.5 MB (sum of L2 and L3 capacity). 144GB /tmp partition on a 240GB SSD 4x 833 GB /mnt/fsdax[0,1,2,3] partitions on NVDIMM 3.2 TB usable local storage Local storage: Local storage: 144GB /tmp partition on a 240GB SSD 6 Network Based Computing Laboratory

STREAM bandwidth with PMEM memory modes BW (MB/s) Memory mode Memory mode App direct App direct Total memory required (GB) NOWLAB system: MRI 4.6 4.6 457.8 457.8 Core(s) per socket: 28 Socket(s): 2 L2 cache: 1280K (70MB in total) L3 cache: 43008K (84MB in total) DRAM: 256GB Optane: 991GB Copy 156246.3 2311.2 20249.0 2453.7 Scale 174390.6 2411.8 16097.6 2202.2 Add 194115.5 3831.0 17414.0 2910.5 Triad 197335.6 3854.9 22558.8 2776.7 7 Network Based Computing Laboratory

Quick I/O sanity check shows 3 different BW/latency on the Intel-Optane node Single core, hence, not maximizing streaming BW usage SSD -> PM -> DRAM 2x BW increase on each step 10x latency decrease on each step SSD PM DRAM SSD PM DRAM 8 Network Based Computing Laboratory

MV2 Pt-to-Pt based with PMEM memory modes P2P performance with different buffer allocations P2P performance with different buffer allocations Memory mode PMEM-PMEM PMEM-DRAM DRAM-DRAM Memory mode PMEM-PMEM PMEM-DRAM DRAM-DRAM 15 1250000 6.3x 1000000 10 Latency (us) 750000 Latency (us) 2.2x 500000 5 250000 0 0 524288 0 1 2 4 8 16 32 64 128 256 512 1024 2048 4096 8192 16384 32768 65536 131072 262144 1048576 2097152 4194304 8388608 16777216 33554432 67108864 134217728 268435456 536870912 1073741824 #Msg Size #Msg Size 9 Network Based Computing Laboratory

MV2 Pt-to-Pt based with PMEM memory modes P2P performance with different buffer allocations P2P performance with different buffer allocations Memory mode PMEM-PMEM PMEM-DRAM DRAM-DRAM Memory mode PMEM-PMEM PMEM-DRAM DRAM-DRAM 25000 25000 20000 20000 15000 Bandwidth (MB/s) Bandwidth (MB/s) 15000 10000 10000 L2 L3 cache cache 5000 5000 0 524288 1048576 2097152 4194304 8388608 16777216 33554432 67108864 134217728 268435456 536870912 1073741824 0 1 2 4 8 16 32 64 128 256 512 1024 2048 4096 8192 16384 32768 65536 131072 262144 #Msg Size #Msg Size 10 Network Based Computing Laboratory

Alltoall collective behavior Average Latency of Single Node AlltoAll on Frontera "Small Mem" vs "Big Mem" 134217728 67108864 Around 1 TB of alltoall exchange without drop in scaling 33554432 16777216 8388608 4194304 2097152 1048576 Proper collective tuning choice and techniques to push the boundaries of effective resource usage 524288 262144 131072 65536 Latency (us) 32768 16384 8192 Observed increase in latency due to higher latency 4096 2048 1024 512 256 128 small_mem_28ppn 64 big_mem_28ppn 32 16 small_mem_56ppn 8 big_mem_56ppn 4 2 1 64 KB 128 KB 256 KB 512 KB 1 MB 2 MB 4 MB 8 MB 16 MB 32 MB 64 MB 128 256 MB 512 MB 1 GB MB Message Size (bytes) 11 Network Based Computing Laboratory

Allreduce collective behavior Average Latency of Single Node Allreduce on Frontera "Small Mem" vs "Big Mem" 4194304 2097152 1048576 Similar performance up to L2 (1MB), and smallmem outperforms bigmem beyond L3 size (32M) 524288 262144 131072 65536 32768 16384 8192 However, lower degradations because allreduce is more computationally heavy Latency (us) 4096 2048 1024 512 256 128 64 small_mem_28ppn On par with DRAM with the small range ( < 1MB message size) 32 big_mem_28ppn 16 8 small_mem_56ppn 4 big_mem_56ppn 2 1 64 KB 128 KB 256 KB 512 KB 1 MB 2 MB 4 MB 8 MB 16 MB 32 MB 64 MB 128 MB 256 MB 512 MB 1 GB Message Size (bytes) 12 Network Based Computing Laboratory

Using PMEMs for out-of-RAM sorting We use usort GitHub - hsundar/usort: Fast distributed sorting routines using MPI and OpenMP Compare and evaluate the performance of one PMEM node with multiple DRAM ones when data cannot fit into a single DRAM at application level Configuration Core(s) per socket Socket(s) L2 cache L3 cache DRAM Optane DRAM node 28 2 1024K 39424K 186G N/A PMEM node 28 4 1024K 39424K 186G 1.9T 13 Network Based Computing Laboratory

Behavior of distributed sorting (i.e. complexity) We used the usort (disk-to-disk sorting) as a use-case for large collectives (alltoall and allreduce) The scale-up mode is scalable according to our tests sorting 1.1 TB of keys on a single PMEM node Generally speaking, this approach is cost effective (i.e. in terms of performance, operation and energy) 14 Network Based Computing Laboratory

Scale-out vs Scale-up (usort) Scale-up Scale-out 15 Network Based Computing Laboratory

Observations on the Multi-tiered Approach Intel PMEMs do not function as HBMs, however, they provide for a cost- effective approach for scale-up and scale-out performance compared to multiple expensive DRAM, HBM nodes For volatile usage, use in Memory mode (DRAM as an L4 cache) Intel is discontinuing this series to up their game in advanced interconnects (CXL, CCIX) Using more DRAMs has only a small benefit of 10 18% speedup in the 4-node case More cache capacity More memory channels Higher cost 16 Network Based Computing Laboratory

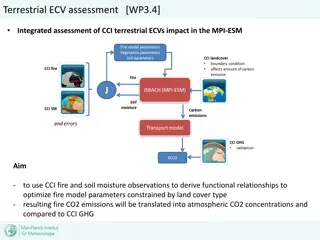

Can we close the performance gap? To recap, performance is impacted by Lower aggregate memory bandwidth In the scale-up approach, lower number of core resource (i.e. caches) We are collaborating with ETRI to close this gap Scale-out and process near- memory Figure: showing the interaction between MVAPICH and the MEX hardware (in development) - courtesy to ETRI 17 Network Based Computing Laboratory

CXL lends hand to advanced near-memory schemes CXL Builds on PCIe Interfaces: Utilizes physical and electrical interfaces of PCIe. Introduces protocols for coherency and software stack simplification. Maintains compatibility with existing PCIe standards. CXL 3.0 supports up to 64 GB/s on top of PCIe 5, and 128 GB/s bi- directional over x16 PCIe 6 Memory pooling from CXL 2.0: CXL 2.0 enables memory pooling through switching technology. A host with CXL 2.0 can access multiple devices from the memory pool. Hosts need to be CXL 2.0-enabled to use this feature. Memory devices in the pool can be a mix of CXL 1.0, 1.1, and 2.0 hardware. CXL 1.0/1.1 devices behave as a single logical device, accessible by only one host at a time. CXL 2.0 devices can be partitioned into multiple logical devices. Allows up to 16 hosts to simultaneously access different parts of the memory on a CXL 2.0 device. Enhanced Connectivity and Memory Coherency: Significantly improves computing performance and efficiency at a low cost. Memory Expansion Capabilities: Enables additional memory capacity and bandwidth beyond direct- attach DIMM slots. Facilitates adding more memory to a CPU host processor via CXL- attached devices. Adaptable to Persistent Memory: Low-latency CXL link pairs effectively with persistent memory. Allows CPU host to use additional memory alongside DRAM 18 Network Based Computing Laboratory

Context How can MPI be geared toward interconnect advancements Start a systematic approach to understand the existing behaviors and propose performant designs Step 1 (status: almost done): Optimizing the performance of device-accelerated MPI using different devices (e.g. GPUs and FPGAs) Step 2 (status: started): Thorough performance analysis schemes to breakdown the timings Step 3 (status: started): Understand the architectural knobs to build a representative model Better co-design of collective acceleration hardware A model that can generalize to the devices, interconnects and memory subsystems at hand 19 Network Based Computing Laboratory

Offloading Benchmark (Motivation) MPI Collectives Disaggregation Evaluate the performance benefit of offloading communication to an accelerator like FPGA, GPU or MEX. Host-based vs Device-based performance Device-accelerated Node Help understand system characteristics CPU What parameters contribute to the offloading performance? What are the bottlenecks? Processes-to-device mapping Disaggregation Interconnect A device does communication on behalf of processes Avoid wasting CPU cycles for data transfer Processes are available to do meaningful computation Device Communication operations: Allreduce and Alltoall 20 Network Based Computing Laboratory

Offloading Algorithm (Root-based) MPI Collectives Disaggregation (baseline) Device-accelerated Node 1. Root gathers data from peers CPU 2. Root copies data to its device buffer R 3. Root invokes reduction kernel 4. Root copies data back to its buffer PCIe 5. Peer retrieves results from root R Root 6. Note: Processes have different device contexts, so a process cannot access device data stored in another context Device 21 Network Based Computing Laboratory

Offloading Algorithm (Root-based) MPI Collectives Disaggregation (baseline) CPU CPU CPU R Root Process R R R PCIe PCIe PCIe Device Device Device Root transfer all data to the device invokes reduction kernel and take back the data Root scatters the results to peers Root gathers peers buffers 22 Network Based Computing Laboratory

Emulation Environment Clusters used in experiments: Lassen MRI Cluster Host Arch Device Arch Interconnect Interconnect BW/lane 2 GB/s MRI AMD Milan NV A100 (Newer gen) NV V100 (Older gen) PCIe 4.0 Lassen IBM Power 9 25 GB/s (Faster link) NVLink 4.0 23 Network Based Computing Laboratory

Performance results (1) 0.20x 2.75x On MRI, device-based performs worse than host-based due to PCIe bottleneck Conversely, on Lassen with NVlink, the trend is reversed. 24 Network Based Computing Laboratory

Performance results (2) 0.44x 6.25x With more processes, the trend on MRI remains the same. On Lassen, device-based is not able to beat host-based. 25 Network Based Computing Laboratory

THANK YOU! Network Based Computing Laboratory Network-Based Computing Laboratory http://nowlab.cse.ohio-state.edu/ The High-Performance Big Data Project http://hibd.cse.ohio-state.edu/ The High-Performance MPI/PGAS Project http://mvapich.cse.ohio-state.edu/ The High-Performance Deep Learning Project http://hidl.cse.ohio-state.edu/ 32 Network Based Computing Laboratory

Experimental results - Alltoall Average Latency of Single Node AlltoAll on Frontera "Small Mem" vs "Big Mem" Run Alltoall up to 1GB on both nodes to form a baseline Bigmem runs for larger messages due to higher capacity Total memory consumed exceeds DRAM capacity Smallmem_28ppn Per process memory req. = 128 * 28 * 2 = 7168MB Total memory req. = 7168 * 28 ~ 200GB 200GB exceeds DRAM size 134217728 67108864 33554432 16777216 8388608 4194304 2097152 1048576 524288 262144 131072 65536 32768 Latency (us) 16384 small_mem_28ppn 8192 big_mem_28ppn 4096 small_mem_56ppn 2048 1024 big_mem_56ppn 512 256 128 64 32 16 8 4 2 1 64 KB 128 KB 256 KB 512 KB 1 MB2 MB4 MB8 MB 16 32 MB 64 MB 128 MB 256 MB 512 MB 1 GB MB Message Size (bytes) 33 Network Based Computing Laboratory

Experimental results - Allgather Average Latency of Allgather on Frontera Small Mem Vs. Big Mem Run Allgather up to 1GB on both nodes to form a baseline Bigmem runs for larger messages due to higher capacity Total memory consumed exceeds DRAM capacity Smallmem_28ppn Per process memory req. = 256 * 28 ~ 7168MB Total memory req. = 7168 * 28 ~ 200GB 200GB exceeds DRAM size 134217728 67108864 33554432 16777216 8388608 4194304 2097152 1048576 524288 262144 131072 65536 Latency (us) 32768 16384 small_mem_28ppn 8192 big_mem_28ppn 4096 2048 small_mem_56ppn 1024 big_mem_56ppn 512 256 128 64 32 16 8 4 2 1 64 KB 128 KB 256 KB 512 KB 1 2 4 8 16 MB 32 MB 64 MB 128 MB 256 MB 512 MB 1 MB MB MB MB GB Message Size (bytes) 34 Network Based Computing Laboratory