Capsule Network for Multi-Label Image Classification Study

Explore a Capsule Network for Hierarchical Multi-Label Image Classification through a detailed analysis of classification approaches, hierarchical structure, and CapsNet models. This study delves into Class Taxonomy, Hierarchical Classifiers, CNN-Based Classifiers, and Capsule Networks' innovative features. Discover the implications of dynamic routing between capsules and the importance of hierarchical labeling in image classification.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

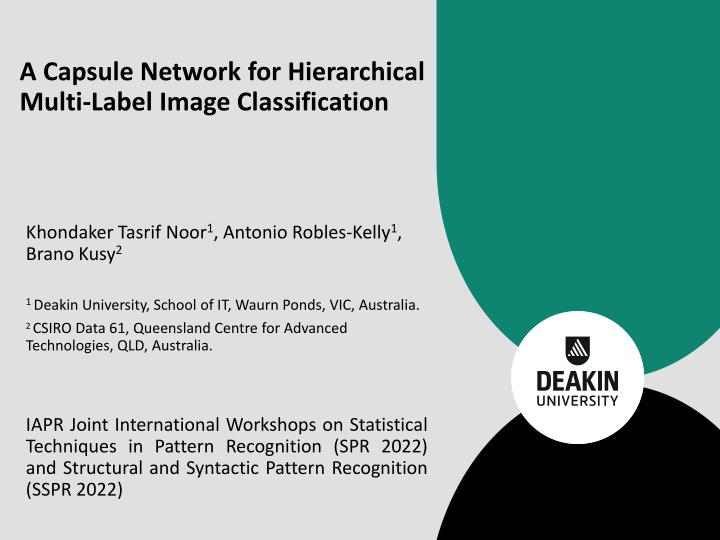

A Capsule Network for Hierarchical Multi-Label Image Classification Khondaker Tasrif Noor1, Antonio Robles-Kelly1, Brano Kusy2 1 Deakin University, School of IT, Waurn Ponds, VIC, Australia. 2 CSIRO Data 61, Queensland Centre for Advanced Technologies, QLD, Australia. IAPR Joint International Workshops on Statistical Techniques in Pattern Recognition (SPR 2022) and Structural and Syntactic Pattern Recognition (SSPR 2022)

Contents Introduction Motivation ML-CapsNet Description Results and Discussion Conclusion 2

Introduction Class Taxonomy Top-to-Bottom / Coarse-to-Fine Paradigm Multi-label Classification Level Consistency What is Hierarchical Classification? Tree Structure Taxonomy / Hierarchy Manually / Unsupervised Learning Hierarchical Label Tree 3

Tree-based Hierarchical Structure CIFAR-10 Dataset Animal Transport Sky Water Road Bird Reptile Pet Medium Airplane Ship Automobile Truck Bird Frog Cat Dog Deer Horse Figure: Sample hierarchical label tree for CIFAR-10 dataset. Classes in hierarchical label tree: Coarse Level, ???????= 2 Medium Level, ???????= 7 Fine Level, ??????? = 10 Here, ? ?? ? ? ????? ????? 4

Hierarchical Classifiers Hierarchical Classification Approaches Flat classification Local classifier / top-down Global classifier / big-bang Local classifier per node (LCN) Fine-classes/leaf nodes Entire class hierarchy Overlook parent-child relationships Local classifiers per parent node (LCPN) Local classifiers per level (LCL) 5

CNN Based Classifiers Global Hierarchical Classifier Branch CNN (B-CNN1) oRelates Hierarchical levels to Conv layers oSeparate prediction layer oGlobal classification approach Input Instance Conv-1 Flatten-1 Conv-2 Prediction- 1 Flatten-2 Prediction- 2 Conv-N Flatten-N Prediction- N Figure: B-CNN1 architecture. 1. X. Zhu and M. Bain. B-cnn: branch convolutional neural network for hierarchical classification. arXiv preprint arXiv:1709.09890, 2017. 6

Capsule Networks Capsule Network (CapsNet2) CapsNet CNN Features and their transformations Part-whole relation Recognition by parts Object-features hierarchy relationship Equivalence nature Translation invariant and equivariant. Less training data 7 2. S. Sabour, N. Frosst, and G. E. Hinton. Dynamic routing between capsules. Advances in neural information processing systems, 30, 2017.

Capsule Networks Face Neuron (L,O,S) Capsule Network (CapsNet2) Eye (L,O,S) Mouth (L,O,S) Nose (L,O,S) Likelihood and features properties Outputs a vector Detect the inconsistency Equivariance Nature Routing Algorithm Agreement capsule layers (E.g: ? & ?) Iterative routing to update ???(sum to 1) +30 (face neuron) (L,O,S) Eye (L,O,S) Mouth (L,O,S) Nose (L,O,S) Capsule Layers Secondary Capsule (??) Primary Capsule (??) ???: coupling coefficients (likeliness and the feature properties) ??= ?????? ? 8 2. S. Sabour, N. Frosst, and G. E. Hinton. Dynamic routing between capsules. Advances in neural information processing systems, 30, 2017.

Multi-label Capsule Network (ML-CapsNet) Input Takes raw input instance Feature Extraction Primary Capsule (P) . . . Extract local features from input instance Shared among all the levels Feature Extraction Secondary Capsule (S1) Secondary Capsule (S2) Secondary Capsule (SN) . . . Stores features into capsule Outputs to all the capsules in next layer Primary Capsule . . . Prediction Layer (Y1) Prediction Layer (Y2) Prediction Layer (YN) Contains K number of capsules (K= Class number) Dynamic Routing algorithm Secondary Capsule . . . . . . Loss (L1) Loss (L2) Loss (LN) Predict class label Based on the levels Prediction Layer Concatenate Loss (LR) Takes prediction output Combines decoder from all the levels into a final one Decoder Figure: Architecture of multi-label capsule network (ML-CapsNet). 9

Loss Function ?: Reconstruction loss weight ??: Reconstruction loss ??: Hierarchical level loss weight ??: Class prediction loss ?: Hierarchical level ?: Total number of hierarchical levels Overall Loss Function: ? ??= ???+ (1 ?) ???? ?=1 Reconstruction loss: ?: Input instance ?: Reconstruction instance 2 ??= ? ?2 K: Class label ?? : Always 1 if class is present else 0 ??: Output vector from Secondary Capsules ?, ?+, ? : Hyper-parameters Class prediction loss: ??= ?? ??? 0,?+ ?? + ? 1 ?? max 0, ?? ? 2 2 10

Results and Discussion Datasets: Traditional Models: MNIST 1,3 Fashion-MNIST 4,5 B-CNN1 : oCNN based tree architecture. Coarse Level (K = 5) Coarse Level (K = 2) Medium Level (K = 6) Fine Level (K = 10) Fine Level (K = 10) oGlobal hierarchical classifier. CapsNet2 : CIFAR-10 1,6 CIFAR-100 1,6 oNot a hierarchical model oFlat Classifier Coarse Level (K = 2) Medium Level (K = 7) Fine Level (K = 10) Coarse Level (K = 8) Medium Level (K = 20) Fine Level (K = 100) 1. 2. 3. 4. 5. 6. X. Zhu and M. Bain. B-cnn: branch convolutional neural network for hierarchical classification. arXiv preprint arXiv:1709.09890, 2017. S. Sabour, N. Frosst, and G. E. Hinton. Dynamic routing between capsules. Advances in neural information processing systems, 30, 2017. L. Deng. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE signal processing magazine, 29(6):141 142, 2012. H. Xiao, K. Rasul, and R. Vollgraf. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747, 2017. Y. Seo and K.-s. Shin. Hierarchical convolutional neural networks for fashion image classification. Expert systems with applications, 116:328 339, 2019. L. Deng. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE signal processing magazine, 29(6):141 142, 2012. 11

Accuracy on training epoch Accuracy as a function of training epoch for all the models under consideration on the MNIST and Fashion-MNIST datasets. ML-CapsNet achieved maximum model accuracy. Fashion-MNIST dataset MNIST dataset 12

Accuracy on training epoch Accuracy as a function of training epoch for all the models under consideration on the CIFAR-10 and CIFAR-100 datasets. ML-CapsNet achieved maximum model accuracy. CIFAR-10 dataset CIFAR-100 dataset 13

Predictive Analytics Level-Wise Metrics Confusion Matrix Per Hierarchy oAccuracy, Precision, Recall and F1-Score per level Hierarchical Metrics Hierarchical Precision (HP), Hierarchical Recall (HR), Hierarchical F1-Score (HF1), Exact Match and Consistency. Figure: Confusion Matrix of ML-CapsNet on Fashion-MNIST Dataset Coarse Level Medium Level Fine Level 14

Measurements Per Level Model Accuracy Per Hierarchical Level 100% Accuracy on Test Dataset 99.73% 99.65% 99.57% 99.50% 99.30% 99.28% 99.20% 97.52% 96.52% 95.93% 95.63% 92.65% 92.04% 91.20% 80% 89.27% 86.95% 85.72% 84.95% 74.75% 71.04% 70.42% 70.15% 60% 61.94% 60.18% 55.52% 40% 34.93% 20% 0% Coarse Fine Coarse Medium Fine Coarse Medium Fine Coarse Medium Fine MNIST Fashion-MNIST CapsNet CIFAR-10 CIFAR-100 B-CNN ML-CapsNet Precision, Recall and F1-Score Per Hierarchical Level 100% 99.8% 99.8% 99.6% 99.6% 99.6% 99.6% 99.6% 99.3% 99.3% 99.3% 99.3% 99.3% 99.2% 99.2% 99.2% 99.1% 98.8% 98.6% 98.5% 98.4% 98.4% 98.4% 97.3% 97.0% 96.1% 96.1% 96.1% 95.4% 95.3% 95.3% 95.3% 95.2% 93.7% 93.3% 91.8% 91.8% 91.8% 91.2% 91.2% 91.2% 91.1% 80% 88.6% 88.2% 88.0% 87.8% 87.2% 87.1% 87.1% 85.2% 84.7% 84.6% 84.5% 84.5% 84.2% 77.9% 77.4% 76.9% 76.7% 71.9% 71.1% 70.7% 70.4% 60% 70.3% 70.1% 69.3% 68.9% 63.0% 62.6% 62.3% 62.0% 59.0% 57.3% 56.6% 56.4% 51.4% 40% 34.9% 33.8% 33.7% 20% 0% CapsNet B-CNN ML-CapsNet CapsNet B-CNN ML-CapsNet CapsNet B-CNN ML-CapsNet Precision Recall F1-Score MNIST Coarse Fashion-MNIST Fine CIFAR-100 Coarse MNIST Fine CIFAR-10 Coarse CIFAR-100 Medium Fashion-MNIST Coarse CIFAR-10 Medium CIFAR-100 Fine Fashion-MNIST Medium CIFAR-10 Fine 15

Hierarchical Measurement Precision, Recall and F1-Score considering the hierarchy: Hierarchical Precision (HP) Hierarchical Recall (HR) Hierarchical F1-Score (HF1) 100% 99.7% 99.6% 99.6% 99.4% 99.3% 99.3% 99.31% 99.1% 99.0% 96.2% 96.2% 96.0% 95.8% 95.8% 95.5% 92.2% 91.7% 91.5% 91.4% 91.20% 91.2% 91.2% 90.2% 80% 89.3% 75.6% 73.4% 71.9% 70.42% 70.4% 70.4% 60% 69.5% 67.9% 64.4% 40% 34.93% 34.9% 34.9% 20% 0% MNIST F-MNIST CIFAR-10 CIFAR-100 B-CNN MNIST ML-CapsNet F-MNIST CIFAR-10 CIFAR-100 MNIST F-MNIST CIFAR-10 CIFAR-100 CapsNet Exact Match: Measures the percentage of predictions that match exactly the ground truth for all levels Consistency: Estimates the proportion of test examples that are consistent with the hierarchy structure, regardless of the ground truth Exact Match Consistency 100% 100% 100% 100% 100% 99.7% 99.3% 99.3% 98.6% 98.4% 98.0% 96.8% 95.5% 91.3% 91.2% 80% 90.0% 89.7% 85.2% 79.0% 70.4% 60% 68.9% 56.9% 50.3% 40% 38.9% 34.9% 20% 0% MNIST F-MNIST CIFAR-10 CIFAR-100 ML-CapsNet MNIST F-MNIST CIFAR-10 CIFAR-100 CapsNet B-CNN 16

Reconstructed Images ML-CapsNet Reconstruction: Sharper and Less Blurred Better reconstruction loss Fine Classes T- Ankle boot Trouser Pullover Dress Coat Sandal Shirt Sneaker Bag shirt/top Input Image ML- CapsNet CapsNet Figure: Sample reconstructed images for the Fashion-MNIST dataset 17

Conclusion Capsule network for hierarchical classification. Dedicated Capsule layer per hierarchy. Loss function for balancing hierarchical level contribution. Consistency among the label structure. Improvement for hierarchical classification. 18

Thank You More information: Khondaker Tasrif Noor HDR Student School of Information and Technology, Deakin University, Australia Email: knoor@deakin.edu.au 19