Coefficient of Variation in Probability Distributions

Explore the dynamics of random variables through concepts like squared coefficient of variation, Erlang distribution analysis, and generalized Erlang models. Learn how different distributions impact traffic patterns and service mechanisms.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

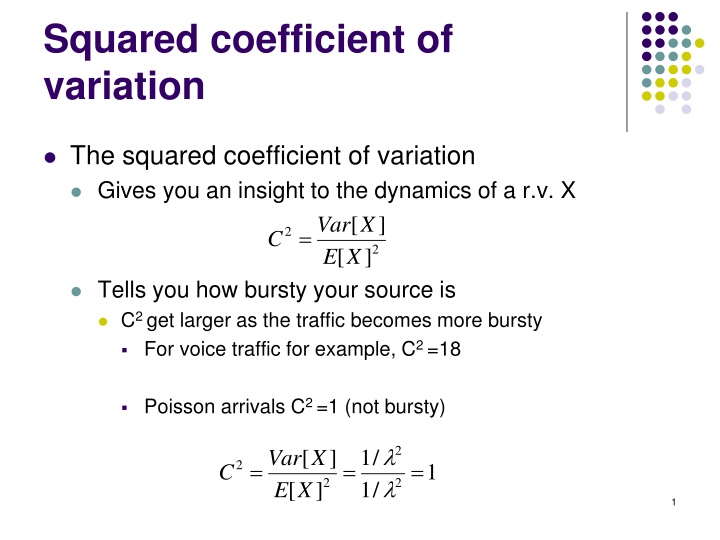

Squared coefficient of variation The squared coefficient of variation Gives you an insight to the dynamics of a r.v. X [ X ] Var X C = 2 2 [ ] E Tells you how bursty your source is C2 get larger as the traffic becomes more bursty For voice traffic for example, C2 =18 Poisson arrivals C2 =1 (not bursty) 2 [ X ] / 1 Var X = = = 2 1 C 2 2 [ ] / 1 E 1

Erlang, Hyper-exponential, and Coxian distributions Mixture of exponentials Combines a different # of exponential distributions Erlang E4 Service mechanism Hyper-exponential 1 P1 H3 2 P2 P3 3 Coxian C4 2

Erlang distribution: analysis = = 2 2 x x ( ) . 2 . ; ( ) . 2 . f x e f x e 1 2 1/2 1/2 1 2 X X 1 2 E2 . 2 = = * X * X ( ) ( ) f s f s + . 2 s 1 2 2 2 + = * ( ) f s Y 2 s Mean service time E[Y] = E[X1] + E[X2] =1/2 + 1/2 = 1/ Variance Var[Y] = Var[X1] + Var[X2] = 1/4 2 v +1/4 2 = 1/2 2 3

Squared coefficient of variation: analysis constant exponential C2 Erlang 0 1 Hypo-exponential X is a constant X = d => E[X] = d, Var[X] = 0 => C2 =0 X is an exponential r.v. E[X]=1/ ; Var[X] = 1/ 2 => C2 = 1 X has an Erlang r distribution E[X] = 1/ , Var[X] = 1/r 2 => C2 = 1/r fX *(s) = [r /(s+r )]r 4

Probability density function of Erlang r Let Y have an Erlang r distribution 1 . . r r y .( . . r ) . r r y e = ( ) f y Y ( 1 )! r = 1 Y is an exponential random variable r is very large The larger the r => the closer the C2 to 0 Er tends to infintiy => Y behaves like a constant E5 is a good enough approximation 5

Generalized Erlang Er Classical Erlang r E[Y] = r/ r r r Y Var[Y] = r/ 2 Generalized Erlang r Phases don t have same + r 1 2 Y + + = * ( ) . .... 1 2 r f s Y s s s 1 2 r + ... = 1 2 r + + ( )( )...( ) s s s 1 2 r 6

Generalized Erlang Er: analysis + ... = * 1 2 ( ) r f s Y + + ( )( )...( ) s s s 1 2 r If the Laplace transform of a r.v. Y Has this particular structure Y can be exactly represented by An Erlang Er Where the service rates of the r phase Are minus the root of the polynomials 7

Hyper-exponential distribution 1 P1 2 . . X P2 Pk k P1 + P2 + P3 + + Pk =1 Pdf of X? = + + ( ) . ( ) ... . ( ) f x P f x P f x 1 X X k X 1 k k = i = . x . e . P i i i 1 8

Hyper-exponential distribution:1st and 2nd moments * . ) ( s i i + = k = = i f s P X i 1 k P = [ ] i E X 1 i i 2 k = = 2 [ ] . E X P i 2 1 i i = 2 2 [ ] [ ] [ ] Var X E X E X P P = + [ ] 1 2 E X Example: H2 1 2 2 2 = + 2 [ ] . . E X P P 1 2 2 2 1 2 2 2 2 P P = + + [ ] . . 1 2 Var X P P 9 1 2 2 2 1 2 1 2

Hyper-exponential: squared coefficient of variation C2 = Var[X]/E[X]2 C2 is greater than 1 Example: H2 , C2 > 1 ? 2 2 + 2 / 2 P / P P = 2 1 1 1 1 2 2 C + 2 ( / / ) P 1 1 2 2 2 2 . 2 . P P P P P P + 0 1 2 1 2 1 P P 2 2 2 2 2 P P . 1 2 1 2 1 1 1 ( 1 P ) 1 ( 2 P ) . 2 . + 0 1 2 1 2 2 2 . 1 2 1 2 1 1 2 1 1 + = 2 ( ) 0 10 2 2 1 2 1 2 1 2

Coxian model: main idea Idea Instead of forcing the customer to get r exponential distributions in an Er model The customer will have the choice to get 1, 2, , r services Example C2 : when customer completes the first phase He will move on to 2nd phase with probability a Or he will depart with probability b (where a+b=1) a 11 b

Coxian model 2 3 4 1 a2 a3 a1 b1 b2 b3 1 b1 a1 b2 1 2 a1 a2 b3 1 2 3 a1 a2 a3 1 2 3 4 12

Coxian distribution: Laplace transform Laplace transform of Ck Is a fraction of 2 polynomials The denominator of order k and the other of order < k Polynomial _ order k = _ Polynomial order k Implication A Laplace transform that has this structure Can be represented by a Coxian distribution Where the order k = # phases, Roots of denominator = service rate at each phase = i 1 i k = l = + * ( ) 1 ( ) .... l f s a a a a a b 0 0 1 2 1 i i + s 13 1 l

Coxian model: conclusion Most Laplace transforms Are rational functions => Any distribution can be represented Exactly or approximately By a Coxian distribution 14

Coxian model: dimensionality problem A Coxian model can grow too big And may have as such a large # of phases To cope with such a limitation Any Laplace transform can be approximated by a Coxian 2 a 1 2 b=1-a The unknowns (a, 1, 2) can be obtained by Calculating the first 3 moments based on Laplace transform And then matching these against those of the C2 15

Some Properties of Coxian distribution Let X be a random variable Following the Coxian distribution With parameters 1, 2, and a PDF of this distribution ?1?2 ?2 ?1? ?1?+ ?1?2 ?1 ?2? ?2? ??? = 1 ? ?1? ?1?+ ? Laplace transform ?1 ?1 ?2 ?1? 1 ? +?1?2 ?2+ ?1+?2?+?1?2 ? = 1 ? ?? ?+?1+ ? ?+?2= ?+?1 16

First three moments of Coxian distribution By using ? ???? ?=0= 1??[??] ?? We have 1 ? ?2 ? ? = ?1+ 2??1?2 2? ?1+?22 ?1 12??1?2?1+?2 6? ?1+?23 ?1 ? ?2=2(1 ?) 2 2?2 2 ?1 ? ?3=6(1 ?) 3 3?2 3 ?1 Squared Coefficient of variation ?2= 1 2??1?2 ?1(1 ?) => ?2 [0.5, ) ?2+??12 For a Coxian k distribution => ?2 [1 17 ?, )

Approximating a general distribution Case I: c2 > 1 Approximation by a Coxian 2 distribution Method of moments Maximum likelihood estimation Minimum distance estimation Case II: c2 < 1 Approximation by a generalized Erlang distribution 18

Method of moments The first way of obtaining the 3 unknowns ( 1 2 a) 3 moments method Let m1, m2, m3 be the first three moments of the distribution which we want to approximate by a C2 The first 3 moments of a C2 are given by 1 ] [ a = + E X 1 2 2 a + 2 . 2 2 ( ) b a = 2 [ ] 1 2 1 2 E X 2 2 2 + 1 b 1 2 a + 3 6 12 ( ) 6 ( ) a = 3 1 2 1 2 1 2 [ ] E X 3 3 3 1 1 2 by equating m1=E(X), m2=E(X2), and m3 = E(X3), you get 19

3 moments method The following expressions will be obtained ? =?2 ?1?1?1 1 Let X= 1+ 2 and Y= 1 2, solving for X and Y ?2?1 6?2 4?1 ?3 1 2 and ?2= ? ?1 6?1 3 2 ?1+?2? 1 and ? = ? = 2?1 ?+ ?2 4? => ?1= 2 However, The following condition has to hold: X2 4Y >= 0 => 3?2 2< 2?1?3 20 Otherwise, resort to a two moment approximation

Two-moment approximation If the previous condition does not hold You can use the following two-moment fit 1 c = a 2 . 2 2 1 = = , 1 2 2 . m m c 1 1 General rule Use the 3 moments method If it doesn t do => use the 2 moment fit approximation 21

Maximum likelihood estimation A sample of observations from arbitrary distribution is needed Let sample of size N be x1, x2, , xN Likelihood of sample is: ? ??(??) Where ??? is the pdf of fitted Coxian distribution Product to summation transformation = ?=1 log(????) ? ? = ?=1 ? ??? ? ? Maximum likelihood estimate: ? = Maximize L subject to ?? 0,? = 1,2 and 0 ? 1 ?1, ?2, ? 22

Minimum distance estimation Objective Minimize distance Between fitted distribution and observed one => a sample observation of size N x1, x2, , xN is needed Computing formula for the distance 1 ? 0.5 ? , where ?2= 12?+ ?=1 ???? ? X(i) is the ith order statistic and F (x) is the fitted C2 A solution is obtained by minimizing the distance No closed form solution exists => a non-linear optimization algorithm can be used 23

Concluding remarks on case I If exact moments of arbitrary distribution are known Then the method of moments should be used Otherwise, Maximum likelihood or minimum distance estimations Give better results 24

Case II: c2 < 1 Generalized Erlang k can be used To approximate the arbitrary distribution In this case Service ends with probability 1-a or Continues through remaining k-1 phases with probability a The number of stages k should be such that 1 ? ?2 1 ? 1 Once k is fixed, parameters can be obtained as: ? = 1 2??2+? 2 ?2+4 4??21/2 2(?2+1)(? 1) ? =1+ ? 1 ? 25 ?1