Connectivity in Log N-Space Random Walks

Explore various algorithms such as random walks and non-uniform methods to determine connectivity between vertices in graphs when limited to O(log n) space. Delve into concepts like one-dimensional random walks and 2-SAT problem solving approaches.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Connectivity froms to t in log n space random walks and non uniform algorithms

Is there is a path from s to t in an undirected graph If you have (n) space you can do BFS. But the question is what if there is an O(log n) space. No BSF. You can only hold a constant number of vertices at the same time. We are going to see how to do it with high probability via random walks. And how to give a non uniform algorithm.

One dimensional Random walk A random walk on a line. A line is n 0 1 2 3 4 5 If the walk is on 0 it goes into 1. Else it goes to i+1 or to i-1 with probability1/2 What is the expected number of steps to go to n?

The expected time function T(n)=0 T(i)=1+(T(i+1)+T(i-1))/2, i 0 T(0)=1+T(1) Add all equation gives T(n-1)=2n-1. From that we get: T(n-2)=4n-4 T(i)=2(n-i)n-(n-i)2 T(0)=n2

2-SAT: definition A 2-CNF formula: (x+ y)& ( x+ z) &(x+y) &(z+ x) (x+y) and the others are called CLAUSES CNF means that we want to satisfy all of them (x+ y) is satisfied if X=T or y=F. The question: is there a satisfying assignment? 2n is not a number the computer can run even for n=70

A random algorithm for 2-SAT Take an unsatisfied clause with variables x and y. Pick at random one of x and of y and change its value. Consider the distance to a satisfying assignment. You know that it can not be that the value of both x and y are the same as in a satisfying assignment. Because you took an unsatisfied clause and the satisfying assignment, satisfied all clauses.

Continued At most one of the x,y can have the same value as the satisfying assignment. In the worst case, say that x has the same value as the satisfying assignment and y has the opposite value to the satisfying assignment. Hence if we choose y the distance to the satisfying assignment drops by 1. If we choose x the distance goes up by 1. This is exactly like a random walk on a line and the expected number of steps until the satisfying assignment is at most n2.

Use the Markov inequality The expected time to reach a satisfying assignment is n2 So by the Markov inequality after 2n2 steps with probability at least we would find the assignment. This is the definition of an RP algorithm. This algorithm is due to Papadimitriou

Remark: the case of two literals very special If three literals per clause: NPC even if you can satisfy all clauses. Not only that, without any guarantee you can not be approximated better than 7/8 Given (x+ y+ z) if we draw a random value with probability 2/3 we increase the gap from a satisfying assignment and with probability 1/3 we decrease the distance.

Random Walks on undirected graphs Given a graph choose a neighbor at random with probability 1/d(v)

Random Walks Given a graph choose a vertex at random.

Random Walks Given a graph choose a vertex at random.

Random Walks Given a graph choose a vertex at random.

Random Walks Given a graph choose a vertex at random.

Markov chains Generalization of random walks on undirected graph. The graph is directed The sum over the values of outgoing edges is 1 but it does not need to be uniform. Column i: the probabilities pji Say we start with probability xito be in vertex i. 0 =(x1,x2, .,xn)

Changing from state to state Probability(state=i)= j xj * pji This is the inner product of the current state and column i of the matrix. Therefore if we are in distribution 0 after one step the distribution is at state: 1= 0 *P And after i stages its in i= 0 *(P)i What is a steady state?

Steady state Steady state is so that: *P= . For any i and any round the probability to be at i is the same. Conditions for convergence: Let hiithe time to get back to i from i. It must be finite. The graph needs to be aperiodic and irreducible.

Being irreducible and aporiodic Irreducible: If the graph is not strongly connected, you can get into a subgraph with no out edges. Depending on where you start you may get into different such sets. There is no way to get to a steady state. Being Aperiodic. This means that you can not have zero probability to be at some state i in even times if you start at some vertex u and you can not be in i in odd times if you start at a vertex v. This does not allow a steady state.

Undirected case: aperiodic means not bipartite graph If the graph is bipartite and V1and V2are its sets then if we start with V1we can not be on V1vertex in after odd number of transitions. Therefore a steady state is not possible. One way to avoid the graph being bipartite: make the probability to go to a neighbor 1/(2d) and add a self loop whose probability is . Namely, you stay at the same vertex with probability .

Fundamental theorem of Markov chains Theorem: Given an aperiodic irreducible MC so that hii is not infinite for any i, then: 1) There is a unique steady state so that *P= 2) hii=1/ i . Geometric distribution argument.

Because its unique you just have to find the correct For random walks in an undirected graph we claim that the steady state is: (2d1/m,2d2/m, ,2dm/m) It is trivial to show that this is the steady state. Multiply this vector and the i column. The only important ones are neighbors of i. So sum(j,i) E 1/dj*(2dj/m)=2di/m

The expected time to visit all the vertices of a graph A matrix is doubly stochastic if and only if all columns and all rows sum to 1. Exercise: show that doubly stochastic matrices imply uniform, namely, {1/n} steady state. Define a new Markov chain of edges with directions which means 2m states. It is very simple to compute the probability of going from an edge e (with direction) to an edge e (with direction). Exercise: show that the Matrix is doubly stochastic.

The time we spend on every edge By the above, we spend the same time on every edge in the both directions over all edges (of course this happens in the infinity: you have to curve the noise of the probabilities we started with). Now, say that I want to bound hijthe expected time we get from i to j.

Showing hij+hji 2m Assume that we start at i--->j By the Markov chain of the edges it will take 2m steps until we do this move again Forget about how you got to j (no memory). Since we are doing now i---->j again we know that: a) As it was in j, it returned to i. This is half the inequality hji b) Now it goes i----> j. This takes at most hij c) Since this takes at most 2m the claim follows.

Consider a spanning tree and any walk on the spanning tree Choose any paths that traverses the tree so that the walk goes from a parent to a child once and back once. Let i be the parent of j. Per parent child we have hij+hji 2m Thus over the n-1 edges of the graph the cover time is at most 2m(n-1)<n3

Tight example clique n/2 vertices u1 un/2 u2

Bridges Remark: for bridge edges hij+hji=2m (follows from proof). Say you are the intersection vertex u1of the clique and of the path. Its harder to go right than left. Thus it takes about n2time to go from u1to u2and the same time to go from u2to u3 etc. This gives (n3) Funny name: Lollipop graph.

Universal sequences Say that the graph is d-regular. And the edges of every vertex are denoted by 1,2, .,d. A walks is a series of integers in {1, .,d}. If the series is say 3,4,5,2 and start at u, then you go on the edge marked 3 of u, say over the edge uv. Then you go over the edge marked 4 at v and so on.

The length of the sequence Let L=4dn2(dn+2)(log2n+1) 2 m (n-1)<2n2 d Let N=4dn2, and think of the above as (dn+2)(log2n+1) different parts of sequences of length N. By previous proof with probability at most a fixed graph is not covered after N steps.

The probability that a graph is not covered. Since in N steps the probability that a graph is not covered is at most , doing it (dn+2)(log2n+1) times gives Probability n-2 log n n*d of a fixed graph not to be covered. We now use the union bound.

The union bound Thus we have to choose n*d edges out of less than n2to get a graph which is d regular. This is bounded by n2n*d graphs. Recall that the probability that a graph is not covered is exp(-nd*lg n) The union bound implies that with high probability all graphs are covered. Hence there is a sequence for which all graphs are covered with probability 1.

Non uniform algorithms These are algorithms that for any n, gets a string of size poly(n) (one string regardless of the different inputs) and then solves the problem. This class of problems that can be solved in polynomial time, in this way, is called P/poly It is known that BPP P/poly. We saw that the problem of checking if s and t are in the same connected component with O(log n) space belongs to P/poly. It also means that there is a polynomial circuit solving the problem. Still we do not know how to find the advise or the circuit and remove the randomization.

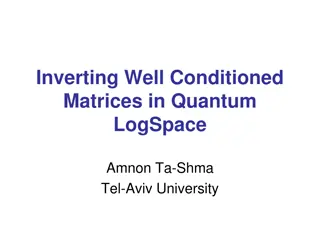

Omer Reingold: s,t connectivity with log(n) space. Omer Reingold used ideas very similar to Dinur to solve this problem that was open for ages. The idea here is to make the graph into an constant degree expander keeping in the new graph s and t in the same connected component. How much is a graph an expander depends on the second largest eigenvalue. An undirected graph, has a symmetric matrix and so the eigenvalues are real. If the graph d-regular, d is an eigenvalue.

Normalization Divide every value of the matrix by d. This makes the biggest eigenvalue 1. The expansion properties depend on how far Is the second eigenvalue from 1. If there is a constant distance, for example the second largest eigenvalue is at most 0.99, the graph is an expander

How to make the second eigenvalue smaller There is a lift stage using Rotation Maps, Zig Zag graph products that makes the second eigenvalue smaller. But the degree goes up by a lot. We have to return to bounded degree graphs (which means constant degree) which is done by replacing large degree vertices with constant degree expanders.

How the eigenvalues change. The distance of the second eigenvalue from 1 doubles at each stage. At start it is 1-1/n. Then 1-2/n, 1-4/n and so on. This means that after O(log n) iterations the second eigenvalue becomes bellow 0.99. The degree remain some constant.

After it is an expander The degree is constant say d. Put labels 1 to d on the edges of every vertex. Since the distance from s to t is O(log n) a single path is O(log n) numbers between 1 and d. Because d is constant it is possible to enumerate all paths between s and t in O(d log n)=O(log n) space. Without doubt: brilliant paper.