Convergence of Big Data and HPC: Applications, Benchmarks, and Libraries

This content discusses the convergence of big data and high-performance computing (HPC), focusing on applications, benchmarks, and libraries. It covers various aspects such as data volume, software architecture, components, and features in unified classification. The discussion includes the intersection of geospatial information systems with HPC simulations, linear algebra kernels, Internet of Things, optimization methodologies, and data classification. The content also touches on performance metrics, data velocity, variety, veracity, and communication structures, providing valuable insights into the world of big data and extreme-scale computing.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Big Data, Simulations and HPC Convergence BDEC: Big Data and Extreme-scale Computing June 15-17 2016 Frankfurt http://www.exascale.org/bdec/meeting/frankfurt Geoffrey Fox, Judy Qiu, Shantenu Jha, Saliya Ekanayake, Supun Kamburugamuve June 16, 2016 gcf@indiana.edu http://www.dsc.soic.indiana.edu/, http://spidal.org/ Department of Intelligent Systems Engineering School of Informatics and Computing, Digital Science Center Indiana University Bloomington http://hpc-abds.org/kaleidoscope/ 5/17/2016 1

Components in Big Data HPC Convergence Applications, Benchmarks and Libraries 51 NIST Big Data Use Cases, 7 Computational Giants of the NRC Massive Data Analysis, 13 Berkeley dwarfs, 7 NAS parallel benchmarks Unified discussion by separately discussing data & model for each application; 64 facets Convergence Diamonds -- characterize applications Pleasingly parallel or Streaming used for data & model; O(N2) Algorithm relevant to model for big data or big simulation Lustre v. HDFS just describes data Volume large or small separately for data and model Characterization identifies hardware and software features for each application across big data, simulation; complete set of benchmarks (NIST) Software Architecture and its implementation HPC-ABDS: Cloud-HPC interoperable software: performance of HPC (High Performance Computing) and the rich functionality of the Apache Big Data Stack. Added HPC to Hadoop, Storm, Heron, Spark; will add to Beam and Flink Work in Apache model contributing code Run same HPC-ABDS across all platforms but data management nodes have different balance in I/O, Network and Compute from model nodes Optimize to data and model functions as specified by convergence diamonds Do not optimize for simulation and big data 5/17/2016 2

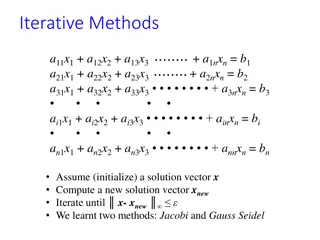

64 Features in 4 views for Unified Classification of Big Data and Simulation Applications Both Geospatial Information System HPC Simulations 10D 9 8D Data Source and Style View (Not surprising! Nearly all Data) Global (Analytics/Informatics/Simulations) Analytics (Model for Data) Local (Analytics/Informatics/Simulations) Simulations Linear Algebra Kernels/Many subclasses Internet of Things Metadata/Provenance Shared / Dedicated / Transient / Permanent 7D 6D Evolution of Discrete Systems Archived/Batched/Streaming S1, S2, S3, S4, S5 5D Streaming Data Algorithms Optimization Methodology Data Search/Query/Index HDFS/Lustre/GPFS 4D Nature of mesh if used Recommender Engine Iterative PDE Solvers Base Data Statistics Particles and Fields Files/Objects Enterprise Data Model SQL/NoSQL/NewSQL Multiscale Method Data Classification Micro-benchmarks 3D 2D 1D Graph Algorithms Spectral Methods N-body Methods Data Alignment Core Libraries Visualization Learning Convergence Diamonds Views and Facets D 4 M 4 D 5 D 6 M 6 M 8 D 9 M 9 D 10 M 10 M 11 D 12 M 12 D 13 M 13 M 14 1 2 3 7 22 M 21 M 20 M 19 M 18 M 17 M 16 M 11 M 10 M 9 M 8 M 7 M 6 M 5 M 4 M 15 M 14 M 13 M 12 M 3 M 2 M 1 M Data Metric = M / Non-Metric =N Data Metric = M / Non-Metric =N =NN /= N Execution Environment; Core libraries Dynamic = D/ Static = S Dynamic = D/ Static = S Model Abstraction Performance Metrics Flops per Byte/Memory IO/Flops per watt Data Volume Model Size Data Velocity Data Variety Model Variety Veracity Communication Structure Regular=R / Irregular =I Data Regular=R / Irregular =I Model Data Abstraction Iterative /Simple Simulation (Exascale) Processing Diamonds Big Data Processing Diamonds 1 2 Pleasingly Parallel Classic MapReduce Map-Collective Map Point-to-Point Processing View (All Model for simulations & Data Analytics) 3 4 5 Map Streaming Shared Memory 6 Single Program Multiple Data Bulk Synchronous Parallel 7 8 Execution View (The details : Mix of Data and Model) Fusion Dataflow 9 10 Agents 11M Problem Architecture View (Nearly all combination of Data+Model) Workflow 12 5/17/2016 3

HPC-ABDS Kaleidoscope of (Apache) Big Data Stack (ABDS) and HPC Technologies 17) Workflow-Orchestration: ODE, ActiveBPEL, Airavata, Pegasus, Kepler, Swift, Taverna, Triana, Trident, BioKepler, Galaxy, IPython, Dryad, Naiad, Oozie, Tez, Google FlumeJava, Crunch, Cascading, Scalding, e-Science Central, Azure Data Factory, Google Cloud Dataflow, NiFi (NSA), Jitterbit, Talend, Pentaho, Apatar, Docker Compose, KeystoneML 16) Application and Analytics: Mahout , MLlib , MLbase, DataFu, R, pbdR, Bioconductor, ImageJ, OpenCV, Scalapack, PetSc, PLASMA MAGMA, Azure Machine Learning, Google Prediction API & Translation API, mlpy, scikit-learn, PyBrain, CompLearn, DAAL(Intel), Caffe, Torch, Theano, DL4j, H2O, IBM Watson, Oracle PGX, GraphLab, GraphX, IBM System G, GraphBuilder(Intel), TinkerPop, Parasol, Dream:Lab, Google Fusion Tables, CINET, NWB, Elasticsearch, Kibana, Logstash, Graylog, Splunk, Tableau, D3.js, three.js, Potree, DC.js, TensorFlow, CNTK 15B) Application Hosting Frameworks: Google App Engine, AppScale, Red Hat OpenShift, Heroku, Aerobatic, AWS Elastic Beanstalk, Azure, Cloud Foundry, Pivotal, IBM BlueMix, Ninefold, Jelastic, Stackato, appfog, CloudBees, Engine Yard, CloudControl, dotCloud, Dokku, OSGi, HUBzero, OODT, Agave, Atmosphere 15A) High level Programming: Kite, Hive, HCatalog, Tajo, Shark, Phoenix, Impala, MRQL, SAP HANA, HadoopDB, PolyBase, Pivotal HD/Hawq, Presto, Google Dremel, Google BigQuery, Amazon Redshift, Drill, Kyoto Cabinet, Pig, Sawzall, Google Cloud DataFlow, Summingbird 14B) Streams: Storm, S4, Samza, Granules, Neptune, Google MillWheel, Amazon Kinesis, LinkedIn, Twitter Heron, Databus, Facebook Puma/Ptail/Scribe/ODS, AzureStream Analytics, Floe, Spark Streaming, Flink Streaming, DataTurbine 14A) Basic Programming model and runtime, SPMD, MapReduce: Hadoop, Spark, Twister, MR-MPI, Stratosphere (Apache Flink), Reef, Disco, Hama, Giraph, Pregel, Pegasus, Ligra, GraphChi, Galois, Medusa-GPU, MapGraph, Totem 13) Inter process communication Collectives, point-to-point, publish-subscribe: MPI, HPX-5, Argo BEAST HPX-5 BEAST PULSAR, Harp, Netty, ZeroMQ, ActiveMQ, RabbitMQ, NaradaBrokering, QPid, Kafka, Kestrel, JMS, AMQP, Stomp, MQTT, Marionette Collective, Public Cloud: Amazon SNS, Lambda, Google Pub Sub, Azure Queues, Event Hubs 12) In-memory databases/caches: Gora (general object from NoSQL), Memcached, Redis, LMDB (key value), Hazelcast, Ehcache, Infinispan, VoltDB, H-Store 12) Object-relational mapping: Hibernate, OpenJPA, EclipseLink, DataNucleus, ODBC/JDBC 12) Extraction Tools: UIMA, Tika 11C) SQL(NewSQL): Oracle, DB2, SQL Server, SQLite, MySQL, PostgreSQL, CUBRID, Galera Cluster, SciDB, Rasdaman, Apache Derby, Pivotal Greenplum, Google Cloud SQL, Azure SQL, Amazon RDS, Google F1, IBM dashDB, N1QL, BlinkDB, Spark SQL 11B) NoSQL: Lucene, Solr, Solandra, Voldemort, Riak, ZHT, Berkeley DB, Kyoto/Tokyo Cabinet, Tycoon, Tyrant, MongoDB, Espresso, CouchDB, Couchbase, IBM Cloudant, Pivotal Gemfire, HBase, Google Bigtable, LevelDB, Megastore and Spanner, Accumulo, Cassandra, RYA, Sqrrl, Neo4J, graphdb, Yarcdata, AllegroGraph, Blazegraph, Facebook Tao, Titan:db, Jena, Sesame Public Cloud: Azure Table, Amazon Dynamo, Google DataStore 11A) File management: iRODS, NetCDF, CDF, HDF, OPeNDAP, FITS, RCFile, ORC, Parquet 10) Data Transport: BitTorrent, HTTP, FTP, SSH, Globus Online (GridFTP), Flume, Sqoop, Pivotal GPLOAD/GPFDIST 9) Cluster Resource Management: Mesos, Yarn, Helix, Llama, Google Omega, Facebook Corona, Celery, HTCondor, SGE, OpenPBS, Moab, Slurm, Torque, Globus Tools, Pilot Jobs 8) File systems: HDFS, Swift, Haystack, f4, Cinder, Ceph, FUSE, Gluster, Lustre, GPFS, GFFS Public Cloud: Amazon S3, Azure Blob, Google Cloud Storage 7) Interoperability: Libvirt, Libcloud, JClouds, TOSCA, OCCI, CDMI, Whirr, Saga, Genesis 6) DevOps: Docker (Machine, Swarm), Puppet, Chef, Ansible, SaltStack, Boto, Cobbler, Xcat, Razor, CloudMesh, Juju, Foreman, OpenStack Heat, Sahara, Rocks, Cisco Intelligent Automation for Cloud, Ubuntu MaaS, Facebook Tupperware, AWS OpsWorks, OpenStack Ironic, Google Kubernetes, Buildstep, Gitreceive, OpenTOSCA, Winery, CloudML, Blueprints, Terraform, DevOpSlang, Any2Api 5) IaaS Management from HPC to hypervisors: Xen, KVM, QEMU, Hyper-V, VirtualBox, OpenVZ, LXC, Linux-Vserver, OpenStack, OpenNebula, Eucalyptus, Nimbus, CloudStack, CoreOS, rkt, VMware ESXi, vSphere and vCloud, Amazon, Azure, Google and other public Clouds Networking: Google Cloud DNS, Amazon Route 53 Cross- Cutting Functions 1) Message and Data Protocols: Avro, Thrift, Protobuf 2) Distributed Coordination : Google Chubby, Zookeeper, Giraffe, JGroups 3) Security & Privacy: InCommon, Eduroam OpenStack Keystone, LDAP, Sentry, Sqrrl, OpenID, SAML OAuth 4) Monitoring: Ambari, Ganglia, Nagios, Inca 21 layers Over 350 Software Packages January 29 2016 4

HPC-ABDS Activities of NSF14-43054 Level 17: Orchestration: Apache Beam (Google Cloud Dataflow) Level 16: Applications: Datamining for molecular dynamics, Image processing for remote sensing and pathology, graphs, streaming, bioinformatics, social media, financial informatics, text mining Level 16: Algorithms: Generic and application specific; SPIDAL Library Level 14: Programming: Storm, Heron (Twitter replaces Storm), Hadoop, Spark, Flink. Improve Inter- and Intra-node performance; science data structures Level 13: Runtime Communication: Enhanced Storm and Hadoop (Spark, Flink, Giraph) using HPC runtime technologies, Harp Level 11: Data management: Hbase and MongoDB integrated via use of Beam and other Apache tools; enhance Hbase Level 9: Cluster Management: Integrate Pilot Jobs with Yarn, Mesos, Spark, Hadoop; integrate Storm and Heron with Slurm Level 6: DevOps: Python Cloudmesh virtual Cluster Interoperability 5/17/2016 5

Convergence Language: Recreating Java Grande 128 24 core Haswell nodes on SPIDAL Data Analytics Best Java factor of 10 faster than out of the box ; comparable to C++ Speedup compared to 1 process per node on 48 nodes Best MPI; inter and intra node MPI; inter/intra node; Java not optimized Best Threads intra node; MPI inter node 5/17/2016 6

Some Confusing Issues; Missing Requirements; Missing Consensus I Different Problem Types Data Management v. Data Analytics Every problem has Data & Model; which is Big/Important? Streaming v Batch; Interactive v Batch Science Requirements v. Commercial Requirements; are they similar?; what are important problems ; how big are they and are they global or locally parallel? Broad Execution Issues Pleasingly Parallel (Local Machine Learning) v. Global Machine Learning Fine grain v. Coarse Grain parallelism; workflow (dataflow with directed graph) v. parallel computing (tight synchronization and ~BSP)) Threads v Processes Objects v files; HDFS v Lustre 5/17/2016 7

Local and Global Machine Learning Many applications use LML or Local machine Learning where machine learning (often from R or Python or Matlab) is run separately on every data item such as on every image But others are GML Global Machine Learning where machine learning is a basic algorithm run over all data items (over all nodes in computer) maximum likelihood or 2with a sum over the N data items documents, sequences, items to be sold, images etc. and often links (point-pairs). GML includes Graph analytics, clustering/community detection, mixture models, topic determination, Multidimensional scaling, (Deep) Learning Networks Note Facebook may need lots of small graphs (one per person and ~LML) rather than one giant graph of connected people (GML) 5/17/2016 8

Some confusing issues; Missing Requirements; Missing Consensus II Qualitative Aspects of Approach Need for Interdisciplinary Collaboration Trade-off between Performance and Productivity What about software sustainability? Should we do all with Apache? Academic v. Industry; who is leading? Many choices in all parts of System Virtualization: HPC v Docker v OpenStack (OpenNebula) Apache Beam v. Kepler for orchestration and lots of other HPC v Apache or Apache v Apache choices e.g. Beam v. Crunch v. NiFi What Language should be used: Python/R/Matlab, C++, Java 350 Software systems in HPC-ABDS collection with lots of choice HPC simulation stack well defined and highly optimized; user makes few choices 5/17/2016 9

Some confusing issues; Missing Requirements; Missing Consensus III What is the appropriate hardware? Depends on answers to what are requirements and software choices What is flexible cost effective hardware; at universities? In public clouds? HPC v. HTC (high throughput) v. Cloud Value of GPU s and other innovative node hardware Miscellaneous Issues Big Data Performance analysis often rudimentary (compared to HPC) What is the Big Data Stack? Trade-off between integrated systems versus using a collection of independent components What are parallelization challenges? Library of hand optimized code versus automatic parallelization and domain specific libraries Can DevOps be used more systematically to promote interoperability Orchestration v. Management; TOSCA v. BPEL (Heat v. Beam) 5/17/2016 10

Some confusing issues; Missing Requirements; Missing Consensus IV Status of field What problems need to be solved? What is pretty universally agreed? What is understood (by some) but not broadly agreed? What is not understood and needs substantial more work? Is there an interesting Big Data Exascale Convergence? Role of Data Science? Curriculum of Data Science? Role of Benchmarks 5/17/2016 11

51 Detailed Use Cases: Contributed July-September 2013 Covers goals, data features such as 3 V s, software, hardware 26 Features for each use case Biased to science http://bigdatawg.nist.gov/usecases.php https://bigdatacoursespring2014.appspot.com/course (Section 5) Government Operation(4): National Archives and Records Administration, Census Bureau Commercial(8): Finance in Cloud, Cloud Backup, Mendeley (Citations), Netflix, Web Search, Digital Materials, Cargo shipping (as in UPS) Defense(3): Sensors, Image surveillance, Situation Assessment Healthcare and Life Sciences(10): Medical records, Graph and Probabilistic analysis, Pathology, Bioimaging, Genomics, Epidemiology, People Activity models, Biodiversity Deep Learning and Social Media(6): Driving Car, Geolocate images/cameras, Twitter, Crowd Sourcing, Network Science, NIST benchmark datasets The Ecosystem for Research(4): Metadata, Collaboration, Language Translation, Light source experiments Astronomy and Physics(5): Sky Surveys including comparison to simulation, Large Hadron Collider at CERN, Belle Accelerator II in Japan Earth, Environmental and Polar Science(10): Radar Scattering in Atmosphere, Earthquake, Ocean, Earth Observation, Ice sheet Radar scattering, Earth radar mapping, Climate simulation datasets, Atmospheric turbulence identification, Subsurface Biogeochemistry (microbes to watersheds), AmeriFlux and FLUXNET gas sensors Energy(1): Smart grid 5/17/2016 12

7 Computational Giants of NRC Massive Data Analysis Report http://www.nap.edu/catalog.php?record_id=18374 Big Data Models? 1) G1: 2) G2: 3) G3: 4) G4: 5) G5: 6) G6: 7) G7: Basic Statistics e.g. MRStat Generalized N-Body Problems Graph-Theoretic Computations Linear Algebraic Computations Optimizations e.g. Linear Programming Integration e.g. LDA and other GML Alignment Problems e.g. BLAST 5/17/2016 13

HPC (Simulation) Benchmark Classics Linpack or HPL: Parallel LU factorization for solution of linear equations NPB version 1: Mainly classic HPC solver kernels MG: Multigrid CG: Conjugate Gradient FT: Fast Fourier Transform IS: Integer sort EP: Embarrassingly Parallel BT: Block Tridiagonal SP: Scalar Pentadiagonal LU: Lower-Upper symmetric Gauss Seidel Simulation Models 5/17/2016 14

13 Berkeley Dwarfs Dense Linear Algebra Sparse Linear Algebra Spectral Methods N-Body Methods Structured Grids Unstructured Grids MapReduce Combinational Logic Graph Traversal 10) Dynamic Programming 11) Backtrack and Branch-and-Bound 12) Graphical Models 13) Finite State Machines 1) 2) 3) 4) 5) 6) 7) 8) 9) Largely Models for Data or Simulation First 6 of these correspond to Colella s original. (Classic simulations) Monte Carlo dropped. N-body methods are a subset of Particle in Colella. Note a little inconsistent in that MapReduce is a programming model and spectral method is a numerical method. Need multiple facets to classify use cases! 5/17/2016 15

Data and Model in Big Data and Simulations Need to discuss Data and Model as problems combine them, but we can get insight by separating which allows better understanding of Big Data - Big Simulation convergence (or differences!) Big Data implies Data is large but Model varies e.g. LDA with many topics or deep learning has large model Clustering or Dimension reduction can be quite small for model Simulations can also be considered as Data and Model Model is solving particle dynamics or partial differential equations Data could be small when just boundary conditions Data large with data assimilation (weather forecasting) or when data visualizations are produced by simulation Data often static between iterations (unless streaming); Model varies between iterations 5/17/2016 16

Functionality of 21 HPC-ABDS Layers 1) Message Protocols: 2) Distributed Coordination: 3) Security & Privacy: 4) Monitoring: 5) IaaS Management from HPC to hypervisors: 6) DevOps: 7) Interoperability: 8) File systems: 9) Cluster Resource Management: 10) Data Transport: 11) A) File management B) NoSQL C) SQL 12) In-memory databases&caches / Object-relational mapping / Extraction Tools 13) Inter process communication Collectives, point-to-point, publish-subscribe, MPI: 14) A) Basic Programming model and runtime, SPMD, MapReduce: B) Streaming: 15) A) High level Programming: B) Frameworks 16) Application and Analytics: 17) Workflow-Orchestration: Here are 21 functionalities. (including 11, 14, 15 subparts) 4 Cross cutting at top 17 in order of layered diagram starting at bottom 5/17/2016 17

5/17/2016 18

Improvement of Storm (Heron) using HPC communication algorithms 5/17/2016 19

Dual Convergence Architecture Running same HPC-ABDS across all platforms but data management machine has different balance in I/O, Network and Compute from model machine C D D C C C D D C D D C C C D D C C C C C D D C C D D C D D C D D C C C C C C C C D D C D D C D D C C C C D D C C C C C C D D C D D C D D C C C C D D C C C C C Data Management Model for Big Data and Big Simulation 5/17/2016 20