Cross-Modal Generative Error Correction Framework

A groundbreaking framework, Whispering LLaMA, is presented in this paper. It introduces a two-pass rescoring paradigm utilizing advanced models to enhance speech recognition accuracy and error correction. The method integrates Whisper and LLaMA models for improved transcription outcomes, highlighting the benefits of incorporating language models and acoustic features. The study showcases a multi-task encoder-decoder transformer speech model trained on extensive multilingual data, emphasizing the fusion of linguistic and acoustic elements for transcription refinement.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

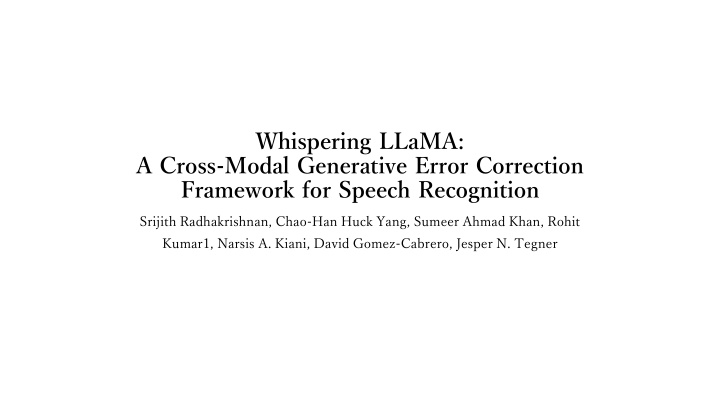

Whispering LLaMA: A Cross-Modal Generative Error Correction Framework for Speech Recognition Srijith Radhakrishnan, Chao-Han Huck Yang, Sumeer Ahmad Khan, Rohit Kumar1, Narsis A. Kiani, David Gomez-Cabrero, Jesper N. Tegner

Introduction Two-pass rescoring paradigm The 1st pass ASR system generates n-best hypotheses using an E2E acoustic model. The 2nd pass re-ranks these hypotheses by incorporating a language model (LM). Advantages LLM often captures a more comprehensive understanding of language structures. Adapting the two-pass paradigm to accommodate domain shifts is much easier as only the language model needs to be fine-tuned on the new dataset. In this paper, we present a token-level fusion framework, merging two foundation pre-trained models(Whisper and LLaMA) into a recognition error correction paradigm.

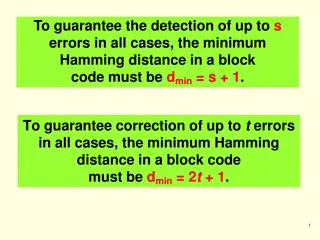

Related Work Transformer-based language models Approach the two-pass paradigm by utilizing the summation of negative log-likelihoods of individual tokens from the language model to re-score the n-best output. Deliberation method and Audio-attention based rescoring Improving ASR-LM rescoring with the incorporation of acoustic features. Decoder prompting or encoder-decoder based error correction Demonstrated benefits in using an external language model for reducing the transcription error rate.

Method Whisper Multi-task encoder-decoder based transformer speech model. Trained on 680,000 hours of multilingual data. Encode audio representations and generate transcripts of n-best hypothesis. LLaMA Decoder-based large language transformer model Generate error corrected transcripts by utilizing the n-best hypotheses via prompt and audio representations via our proposed framework as input.

Method ?,?? ?) after self-attention Incorporate two residual adapter modules(?? modules (??? ?? attention mechanism. ?? autoencoder mechanism. ?) of the frozen LLaMA in each layer. ?: The layer i used to fine-tune the LLaMA model using a scaled dot product ?: The layer i used to fuse Whisper features with the LLaMA model by following an

Method ? ?? ?? ?, incorporate a learnable matrix ?? In each layer of ?? ??: The dimension of the adapter embeddings. ??: The dimension of LLaMA embeddings. We repurpose the frozen LLaMA linear layers ?????? modules (??? The language embedding feature extracted from the pretrained LLM is represented by ?? ? ? from LLaMA self-attention ,?????? ?into key and value pairs. ?) to transform ?? ?for each layer.

Method To integrate the audio representations and key-value tensors from the Whisper decoder cross-attention module, we introduce two additional linear frozen transformations ?? ????? ,?? ????? By applying the audio representations to these additional linear transformations, we generate the key- value pairs that mirror the ones produced by Whisper. Apply two learnable projection matrix ?? ?? ??: The size of Whisper encoded audio representations ??????: The hidden representation from Whisper encoder ? ? ?? ? ?? ? ? ????? ? , ???

Method Once obtain the corresponding Whisper key and value pairs, we apply the padding mechanism mechanism to adjust the shape of ?? ????? computation of multi head attention and to preserve the latent structure of the Whisper key and value embeddings. padding to enable ? ? and ?? ????? ? to match ?????? Then, we utilize a gated fusion mechanism, Whispering-LLaMA (WL), to fuse all the modules together.

Method Weight Initialization Padding : We initialize a matrix of zeros of shape ??????? ?? ????? ???????and fill the principal diagonal of the last two dimensions with ones. We then place ?? ????? ,?? ????? on the top left corner of the padding template. NH: Number of heads T : context length HS : Head size ????? , ??? ? ? ? ?: Identity matrices

Experimental Setup Models LLaMA-7B(Alpaca) Whisper-Large V2(Whisper-Tiny for generating transcripts) Our proposed framework with ??=10 and r=8, 16, 32 named ? ?, ? ?, ? ?(? ? using two separate ??for key and value) Dataset The Airline Travel Information System(semantically correct, domain-specific) Audio recordings of individuals querying flight information. GigaSpeech(noisy, real-world setting) Audio from audiobooks, podcasts and YouTube videos on diverse topics.

Experimental Setup Training Input consists encoded audio representations(Whisper- Large) and 15-best transcripts(Whisper-Tiny). Using prompt template of Alpaca model Adam optimizer, learning rate: 0.01, 0.001, 0.0005 Epochs: 25, batch size: 32, weight decay: 0.01 A100 x2

Performance ? ?achieves the best performance with a WERR of 37.66%. Sperate adapter does not result in performance improvements.

Performance Using text normalization can improve performance of GigaSpeech dataset Remove the ground truth if it is present among the Whisper generated n-best hypothesis (Ground Truth Match Rate). Calculates the percentage of predictions generated by the model that exactly match the ground-truth.

Conclusion Propose a novel framework to leverage the external knowledge from LLM to improve the transcription accuracy of ASR systems. Presents a parameter-efficient way to integrate large foundational Speech and Language models to achieve competitive WERR improvements. Limitation Incorporating audio representations into the training pipeline extends the training duration by 394.76%. Need a larger volume of data to achieve optimal performance. Future work Integrating this baseline into both Espnet and HyPoradise