Developing ACO Measure Set Maine | Buying Value Analysis

Explore the development of an ACO measure set for Maine through the Buying Value Analysis, addressing the importance of measure alignment and its impact on providers and healthcare systems. Discover insights on national measures landscape, measure selection processes in various states, key policy decisions, and potential next steps for Maine's measure selection process.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Developing an ACO Measure Set for Maine Michael Bailit December 10, 2013

Agenda: 1. National Measures Landscape: Buying Value Analysis 2. Measure Selection Processes in Other States 3. Key Policy Decisions in Measure Selection 4. Potential Next Steps for Maine s Measure Selection Process 2

The Buying Value study Buying Value is a private purchaser-led project to accelerate adoption of value purchasing in the private sector; believe that selection of appropriate performance measures is fundamental to the success of a value-based payment system, and convened a payer and purchaser group to promote measure alignment among private and public purchasers, health plans, providers, and state and federal officials. 3

Why is lack of alignment problematic? The measure misalignment creates what is experienced as measure chaos for providers subject to multiple measure sets, with related accountability expectations and sometimes financial implications. Mixed signals from the market make it difficult for providers to focus their quality improvement efforts and lead them to tune out some payers and some measures. 4

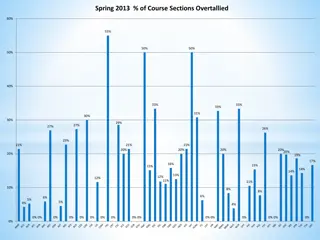

Background on the Buying Value analysis As part of its effort to promote measure alignment, Buying Value commissioned a recent report from Bailit studying the alignment of state-level measure sets. To complete this analysis, Bailit gathered 48 measure sets used for different program types, and designed for different purposes, across 25 different states and three regional collaboratives. Program type examples: ACO, PCMH, Medicaid managed care plan, exchange Program purpose examples: reporting, payment, reporting and payment, multi-payer alignment 5

Measure sets by state 1. AR 2. CA (7) 3. CO 4. FL 5. IA (2) 6. ID 7. IL 8. LA 9. MA (8) 10. MD 11. ME (2) 12. MI 13. MN (2) 14. MO (3) 15. MT 16. NY 17. OH 18. OK 19. OR 20. PA (4) 21. RI 22. TX 23. UT (2) 24. WA 25. WI Reviewed 48 measure sets used by 25 states. Intentionally gave a closer look at two states: CA and MA. Note: If we reviewed more than one measure set from a state, the number of sets included in the analysis is noted above. 6

Analysis included two measure sets from Maine Systems Core Measure Set obtained from the Maine Health Management Coalition Measure set used in Maine s multi-payer PCMH pilot 7

Measure sets significantly ranged in size [max] 108 measures [avg] 29 measures [min] 3 measures Note: This is counting the measures as NQF counts them (or if the measure was not NQF-endorsed, as the program counted them). 8

Buying Value study findings There are many measures in use today. In total, we identified 1367 measures across the 48 measure sets. We identified 509 distinct measures. Current state and regional measure sets are not aligned. Only 20% of the 509 distinct measures were used by more than one program. Non-alignment persists despite preference for standard measures. Most measures are from a known source (e.g., NCQA, AHRQ) and are NQF-endorsed. 9

The distinct measures were evenly distributed across measure domains Access, affordability & inapprop care 11% Comm & care coordination 5% Utilization 8% Sec. Prevention and Treatment 28% Health and well- being 14% Person- centered 11% Safety 19% Infrastructure 4% Distinct measures by domain n = 509 10

States prefer to use standard measures Undeter- mined 6% Other 3% Defining Terms Standard: measures from a known source (e.g., NCQA, AHRQ) Modified: standard measures with a change to the standard specifications Home- grown 15% Homegrown: measures that were indicated on the source document as having been created by the developer of the measure set Standard 59% Modified 17% Undetermined: measures that were not indicated as homegrown , but for which the source could not be identified Measures by measure type n = 1367 Other: a measure bundle or composite 11

Finding: programs show a strong preference for NCQA s HEDIS measures Undetermined 6% Other 3% Homegrown 14% HEDIS 66% Standard and modified non- HEDIS 11% NCQA (HEDIS) measures and the types of the non-HEDIS measures n = 1367 12

But only 16% of the distinct measures come from HEDIS HEDIS 16% Undetermined 15% Resolution Health 5% AHRQ 4% In other words, the 81 HEDIS measures are used over and over again. CMS 4% AMA- PCPRI 4% Homegrown 39% Standard source with less than 10 measures 13% HEDIS measures and the types of the non- HEDIS measures n = 509 13

Finding: little alignment across the measure sets Very little coordination/ sharing across the measure sets Shared 20% Of the 1367 measures, 509 were distinct measures Not shared 80% Only 20% of these distinct measures were used by more than one program Number of distinct measures shared by multiple measure sets n = 509 14

How often are the shared measures shared? Not that often 11-15 sets, 14, 3% 6-10 sets, 21, 4% Shared measures 20% 16-30 sets, 19, 4% 3-5 sets, 20, 4% Measures not shared 80% 2 sets, 28, 5% Only 19 measures were shared by at least 1/3 (16+) of the measure sets Most measures are not shared 15

Categories of the 19 most frequently used measures 4 Other Chronic Conditions 1 Mental Health/Sub- stance Abuse 7 Diabetes Care 6 Preventative Care Comprehensive Diabetes Care (CDC): LDL-C Control <100 mg/dL CDC: Hemoglobin A1c (HbA1c) Control (<8.0%) CDC: Medical Attention for Nephropathy CDC: HbA1c Testing CDC: HbA1c Poor Control (>9.0%) CDC: LDL-C Screening CDC: Eye Exam Breast Cancer Screening Cervical Cancer Screening Childhood Immunization Status Colorectal Cancer Screening Weight Assessment and Counseling for Children and Adolescents Tobacco Use: Screening & Cessation Intervention Controlling High Blood Pressure Use of Appropriate Medications for People with Asthma Cardiovascular Disease: Blood Pressure Management <140/90 mmHg Cholesterol Management for Patients with Cardiovascular Conditions Follow-up after Hospitalization for Mental Illness 1 Patient Experience CAHPS Surveys (various versions) 16

Programs are selecting different subsets of standard measures While the programs may be primarily using standard, NQF-endorsed measures, they are not selecting the same standard measures Not one measure was used by every program Breast Cancer Screening was the most frequently used measure and it was used by only 30 of the programs (63%). Program C Program B Program A Program D Program E 17

State measure set variation Regardless of how we cut the data - by program type, program purpose, domain, and within CA and MA - the programs were not aligned. 18

Conclusions from the Buying Value study 1. Measures sets appear to be developed independently without an eye towards alignment with other sets. 2. The diversity in available measures allows states and regions interested in creating measure sets to select measures that they believe best meet their local needs. 3. Even the few who seek to create alignment struggle due to a paucity of tools to facilitate such alignment. 4. Alignment with other programs won t happen by chance; it must be a goal that is considered throughout the measure set development process. 19

Agenda: 1. National Measures Landscape/ Buying Value Analysis 2. Measure Selection Processes in Other States 3. Key Policy Decisions in Measure Selection 4. Potential Next Steps for Maine s Measure Selection Process 20

Measure selection in other states Bailit has supported multiple states and regions through a measure selection process. On the next slides we will draw from four of those experiences: Colorado Oregon Pennsylvania (AF4Q South Central Pennsylvania) Vermont The slides that follow will provide a brief overview of the different approaches to measure selection and discuss how the factors influenced the unique outcomes. 21

Case Example #1: Colorado Medicaid Status: Measure set in development Purpose: Key performance indicator measure set for Regional Care Collaborative Organizations (RCCOs) within the Medicaid program Type of process: Informal internal deliberation, to be followed by external stakeholder vetting Stakeholders involved: State Medicaid agency staff, thus far 22

Case Example #1: Colorado Medicaid Key criteria for measure selection: Distribution across target populations and within domains of interest Alignment with other state programs Feasibility Unique interests: Coordination of care measures Social determinants of health measures Inclusion of creative non-standardized measures 23

Case Example #2: Oregon Medicaid Status: Two largely overlapping measure sets implemented 1-1-13: CMS waiver accountability and CCO incentive pool Purpose: Incentive measure set for Coordinated Care Organizations (CCOs) required by CMS as a part of its Medicaid section 1115 waiver Type of process: Formal, state-staffed committee process Stakeholders involved: Legislatively-mandated physician advisory committee. CCO representatives and health services researchers. 24

Case Example #2: Oregon Medicaid Key criteria for measure selection: Representative of the array of services provided and beneficiaries served by the CCOs Measures must be valid and reliable Reliance on national measure sets whenever possible Unique interests: Transformative potential State-specific opportunities for improvement relative to national benchmarks 25

Case Example #3: AF4Q South Central Pennsylvania Status: Completed but not implemented Purpose: Multi-payer aligned commercial measure set for PCMHs in the region Type of process: Formal committee process managed by AF4Q staff and key stakeholders Stakeholders involved: One leading payer and one leading hospital system, with intermittent involvement of other local health plans, hospital systems and employers 26

Case Example #3: AF4Q South Central Pennsylvania Key criteria for measure selection: Distribution across domains of interest Alignment with federal measure sets Feasibility Unique interests: Alignment with pre-existing stakeholder-developed measure sets Relevance for patient-centered medical homes 27

Case Example #4: Vermont multi-payer ACO Status of Project: Completed and slated to be implemented 1-1-14 Purpose: Measure set for coordinated Medicaid and commercial insurer ACO pilot program Type of process: Formal, state-staffed committee process Stakeholders involved: Potential ACOs, payers (commercial and Medicaid), consumer advocates, provider associations, quality improvement organization 28

Case Example #4: Vermont multi-payer ACO Key criteria for measure selection: Representative of the array of services provided and beneficiaries NQF-endorsed measures that have relevant benchmarks whenever possible Unique interests: Alignment with Medicare Shared Savings Program Alignment with HEDIS State-specific opportunities for improvement relative to national benchmarks Use of systems measures, utilization measures and pending measures 29

Agenda: 1. National Measures Landscape/ Buying Value Analysis 2. Measure Selection Processes in Other States 3. Key Policy Decisions in Measure Selection 4. Potential Next Steps for Maine s Measure Selection Process 30

Six Key Policy Decisions in Measure Selection 1. Intended use 2. Standardized vs. transformative/creative measures 3. Data source: clinical data vs. claims vs. survey 4. Operationalizing clinical data measures: electronic capture vs. sampling methodology 5. Areas of importance vs. opportunities for improvement 6. Alignment with other programs vs. program-specific measures 31

1. Intended use Measures can be used for multiple purposes. The first question to ask when forming a measure set is measurement to what end ? Measures may be may be more appropriate for some uses and not others at a particular point in time. Some potential measure uses: Assessing performance relative to goals and expectations Qualifying and/or modifying payment Observing states and trends 32

2. Standardized vs. innovative measures Pros Cons Measure already vetted for validity and reliability Enables comparisons with other programs Potentially offers national and/or regional benchmark information Often facilitates implementation due to clearly defined specifications Facilitates alignment with other measure programs Enables programs to customize measures to suit their specific needs Offers a measurement solution for areas in which there is a dearth of standard measures Can be used to develop future standardized measures May not offer an assessment of a specific performance attribute of interest Standard measures Measures may not be valid or reliable Do not support comparisons with other programs, benchmarking or alignment across programs May be challenging to implement Innovative measures 33

3. Data source: clinical data vs. claims vs. patient survey The source of measures often depends on program capacity, electronic infrastructure and resources. Pros Clinical data- based measures Cons Provides opportunity to assess clinical processes and outcomes not found in claims Requires either sophisticated electronic reporting system or resource-intensive chart review Relatively easy to implement Baseline data easier to generate than for clinical data Generally limited to process measures and some outcome measures Claims- based measures Only mechanism for assessing patient experience Resource-intensive Can be burdensome to patients if other surveys are commonly administered Survey measures 34

4. Operationalizing clinical data measures: electronic capture vs. sampling methodology Pros Cons Once infrastructure is in place, can potentially generate a low administrative burden Requires implementation of a sophisticated electronic reporting system especially for ACOs with many independent providers using different EHRs Requires substantial upfront investment Electronic capture Relatively straight-forward to implement Most HEDIS measures have established protocols for chart review Resource-intensive Administratively burdensome Cost is recurring and does not diminish over time Sampling methodology 35

5. Areas of importance vs. opportunities for improvement Should the program focus on measures for areas of importance regardless of baseline performance or focus on known opportunities for improvement? Pros Areas of importance (conditions, procedures etc.) Cons Assesses what matters most to patient health Can facilitate alignment with other measure programs If performance is strong, and there aren t opportunities for improvement, measurement results won t have operational application. Focus important incentive dollars where they can have an impact on quality May lead the program to focus on niche populations May create misalignment with other programs Requires baseline and benchmark information Opportunities for improvement 36

6. Alignment with other programs vs. program-specific measures Pros Cons Facilitates system reform by focusing providers on specific quality goals through consistent market signals Enables comparisons with other programs Potentially offers national and/or regional benchmark information May not address the priority goals of the program May not offer the precise measurement tool desired Aligned measures Enables programs to encourage providers to focus on areas that would otherwise be forgotten Enables programs to select measures to address their specific priorities Program- focused measures May foster measure chaos by sending mixed signals to providers about where they should put their focus May be deprioritized or ignored if the populations or related measure incentives are too small 37

Agenda: 1. National Measures Landscape/ Buying Value Analysis 2. Measure Selection Processes in Other States 3. Key Policy Decisions in Measure Selection 4. Potential Next Steps for Maine s Measure Selection Process 38

Potential next steps for creating an ACO measure set for Maine 1. Determine the type of process and the stakeholders to be involved. 2. Establish a timeline for completion and create a work plan to meet appropriate deadlines. 3. Agree on the goals/objectives for the measure set. 4. Establish measure selection criteria. 5. Utilize your measures library that includes all measure sets currently in use in Maine or recommended for consideration. 39

Potential next steps for creating an ACO measure set for Maine 6. Use the selection criteria to remove measures from the library 7. Agree upon a draft measure set 8. Review the draft set to determine whether it achieves the program s goals, making adjustments as necessary. 9. Finalize the measure set 40

Final considerations Reaching agreement on a core measure set takes considerable time and effort many facilitated meetings, staff work to prepare for each meeting and a willingness to compromise. Measure sets are not static. They need to be reviewed each year and modified based on implementation experience, changing clinical standards, changing priorities, and (hopefully) improved performance. 41

Contact information Michael Bailit, MBA mbailit@bailit- health.com 781-453-1166