Efficient Conformers via Sharing Experts for Speech Recognition

"Explore parameter-efficient Conformers employing Sparsely-Gated Experts for precise end-to-end speech recognition. Leveraging knowledge distillation and weight-sharing mechanisms, this approach achieves competitive performance with significantly reduced encoder parameters. Discover attention-based encoder-decoder models for acoustic feature sequences and Conformer-based Seq2Seq models for ASR text prediction. Delve into Conformer encoders, MoE modules, and parameter-sharing techniques for effective model optimization."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Parameter-Efficient Conformers via Sharing Sparsely-Gated Experts for End-to-End Speech Recognition Ye Bai, Jie Li, Wenjing Han, Hao Ni, Kaituo Xu, Zhuo Zhang, Cheng Yi, Xiaorui Wang Kuaishou Technology Co., Ltd, Beijing, China

Index Terms parameter-efficient sparsely-gated mixture-of experts Conformer (transformer + CNN) cross-layer weight-sharing knowledge distillation

Introduction Parameter-Efficient Conformers + Sharing Sparsely-Gated Experts + knowledge distillation = competitive performance with 1/3(parameters of the encoder) (compared with the full-parameter model)

attention-based encoder-decoder (AED) models encoder : capture the high-level representations from the acoustic features decoder : predict text sequences token-by-token with the attention mechanism acoustic feature sequence X = [x0,x1,x2, xT-1], length T Background: Conformer- based Seq2Seq Models for ASR text token sequence Y = [y0,y1,y2, ys], length S+1 (start-of-sentence symbol<sos> and the end-of-sentence symbol<eos> )

Background: Conformer-based Seq2Seq Models for ASR Model Trfm predicts the probability of the text token: Model is trained with maximum likelihood criterion:

Sharing Sparsely-Gated Experts parameter-efficient model sparsely-gated MoE modules

Parameter-Sharing for Conformers FFN modules use Swish activation functions The multi-head self-attention module uses relative positional encodings The convolution module is a time-depth separable style convolutional block with GLU and Swish activation functions.

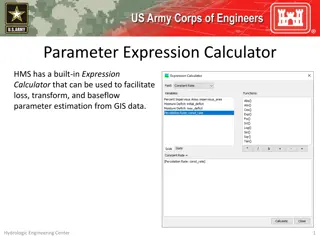

Dynamic Routing for Mixture of Experts To encourage all the experts to be used in balance, the load balancing loss is used as follows:

Distilling Knowledge from Hidden Embedding use knowledge distillation to transfer the knowledge from a full-parameter model minimize the L2 distance between the outputs of the shared-parameter encoder (student) and the full- parameter encoder (teacher):

Learning The model is learned by minimizing the overall loss:

Relation to Prior Work Conditional computation of mixture-of-experts MoE has been shown as an effective way to scale the capacity of neural networks without increasing computation Cross-layer weight sharing first used in transformer with adaptive computation time uses this technique to reduce the parameters of BERT.

Experiments 150 hours of speech for training, about 18 hours of speech for development, and about 10 hours speech for test. 80-dimension Mel-filter bank features (FBANK) as the input 2-layer CNN 4 experts for the second FFN