Efficient Inference with Adaptive Neural Networks

Explore the concept of adaptive neural networks for efficient inference, focusing on reducing test-time costs and optimizing decision-making processes. Learn about proposed approaches and related work in this area, including techniques like early exit strategies and network sparsification. Dive into the application of spatially adaptive networks and the optimization of exit points for enhanced computational efficiency.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

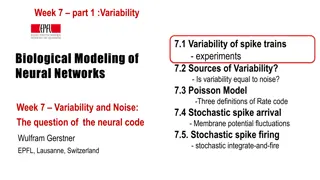

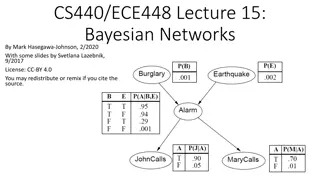

Adaptive Neural Networks for Efficient Inference Bolukbasi, T., Wang, J., Dekel, O., & Saligrama, V. (2017, July). Adaptive neural networks for efficient inference. In International Conference on Machine Learning (pp. 527-536). PMLR.

Introduction DNN is powerful machine learning techniques The computation cost of DNN to applying new example is high, called test-time cost High test-time cost makes DNN not applicable to resource-constrained platform Most of the work try to design efficient network structure 2

Proposed Approaches Use original DNN structure Do not require full power and complexity of a massive DNN 1. Early exit Before entering some layers, determine we should return or proceed 2. Network selection Take a set of pre-trained DNNs, arrange them into DAG and determine to use current node s prediction or proceed to the next network 3

Related Work Teacher-Student models: small student mimic teacher s accuracy Reduce precision network: reduce memory footprint, exploiting efficient operations to approximate complex functions Network sparsification: prune redundent part (remove unnessesary nodes/edges) Spatially adaptive networks: selectively activate nodes in a layer 4

Adaptive Early Exit Networks Computing each convolutional layer takes more than 3 times longer than computing a fully connected layer Goal: minimize the evaluation time of the network such that the error rate of the adaptive system is no more than some user-chosen value B T: prediction time k ( k (x)) is either 1 (indicating an early exit) or 1 k (x): output of layer k 5

Focus on Decision of the Last Exit Point Recursively solve the problem 6

Optimal Decision Cost 7

Recursively Solve the Problem K: # of exit points The kth early exit function can be trained by solving the supervised learning problem 8

Network Selection 1 : |X | {N1, N2, N3} 2 : |X | {N2, N3} : determines if the classification decision from N2 should be returned or if network N3 should be evaluated Goal: learn the functions 1 and 2 that minimize the average evaluation time subject to a constraint on the average loss induced by adaptive network selection 9

Formulation First learn k2 to trade-off evaluation time and induced error Can be posed as an importance weighted supervised learning problem theta & W: Optimal Decision & Cost 10

Experimental Setup ImageNet 2012 classification dataset Pre-trained models from Caffe Model Zoo for Alexnet, GoogLeNet and Resnet50 Measure network times using the built-in tool in the Caffe library on a server with a Nvidia Titan X Pascal with CuDNN 5 12

Experimental Results Oracal: choose the model with right answer (with some constraints) 13

Proportion of samples that choose to exit arrows: allow to forward myopic, uniform: single threshold 14

Accuracy gains at different layers for early exits: GoogleNet/ ResNet50 -> @x: loss, %: speedup 15

Conclusion Propose 2 different schemes: exit point selection and network selection Computation cost can reduce with negligible loss in accuracy based on their schemes 16