Efficient Leave-One-Out Evaluation of Kernelized Implicit Mappings

Leave-One-Out Evaluation (LOO) is a powerful tool for assessing implicit mappings like kernelized techniques. This method is crucial for generalization and assessing the accuracy of mapping novel samples compared to training samples. The process involves simulating the mapping using Kernelized Implicit Mapping (KIM) and then excluding one sample at a time for evaluation. This evaluation method is essential for understanding the effectiveness of the embeddings produced. Dive deeper into the process, benefits, and challenges associated with LOO evaluation in the context of kernelized implicit mappings.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

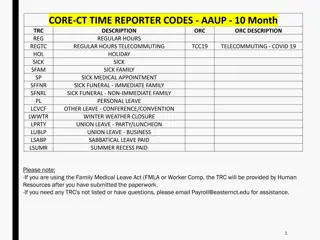

#1382 Efficient Leave-One-Out Evaluation of Kernelized Implicit Mappings Hokkaido University, Japan Mineichi Kudo, Keigo Kimura, Shumpei Morishita, Lu Sun mine@ist.hokudai.ac.jp

01Background ?? Out of Sample problem: many embedding algorithms cannot be applied to novel samples, e.g., ??? ?? Laplacian eigenmap minimize ? 2??? min {??} ?? ?? ?,? w.r.t embedded sample ?? s. ?? Explicit transforms approximate it e.g. ? 2??? min ? ??? ??? ?,? 2025/4/13 2

02Kernelized Implicit Mapping Given input-output pairs ?? ??? ?? ? (?? ??,?? ??)?=1 Simulate the implicit (hidden) functional relationship by a set of linear sums of kernels associated with samples ? Implicit Mappings ?1 ?2 : ?? ?1 ?2 : ?? ?(?1,?) ?(?2,?) ? ??,? ? ? = = ?? ? = Embedding Classification ? ?? ?? ????(??,?),? = 1, ,?, ? ? ??? ??= ?=1 referring Representer Theorem.

02Kernelized Implicit Mapping Applying ?? KIM simulation to all training samples, we have Perfect simulation Perfect simulation Of training samples Of training samples Since is invertible for an RBF kernel, we have for Embedding ?? KIM can simulate perfectly the mapping for KIM can simulate perfectly the mapping for training samples. training samples. 2025/4/13 4

03Leave-One-Out Evaluation The generalization, the difference of mapping of novel samples from that of training samples is Questionable. Questionable. left left- -out out ?? KIM simulation Leave Leave- -one one- -out (LOO) out (LOO) is a strong tool to evaluate it. Perfect simulation Perfect simulation Of training samples Of training samples Drawback: Drawback: needs much time complexity for n repetitions of mapping. Embedding Embedding ?? ?? We We need fast LOO evaluation. 2025/4/13 5

03 Objective When some implicit map (e.g., embedding) is simulated by KIM, show how fast LOO evaluation of the KIM is carried out. LOO matrix 2025/4/13 6

04 Basic Formula The LOO estimate of n th sample is given by where -n denotes that n th sample is excluded.

An Example 1 1 1 2 2 4 2 1 1 1 1 = 9/2 5 =1/2 1 1 1 1 4 1 4 4 2/51 9/51 10/51 9/51 15/51 6/51 10/51 6/51 1/51 1 9/51 15/51 6/51 10/51 6/51 1/51 9/2 5 2025/4/13 8

An Example 2/51 9/51 10/51 9/51 15/51 6/51 10/51 6/51 1/51 1 9/2 5 3/5 1 2/5 10 6 1 0 3/5 0 2/5 10 6 0 9/2 5 2025/4/13 9

04 Applications of LOO matrix Visualization Visualization ? ?2 ? Classification Classification ? {0,1}? ? 1 Image reconstruction Image reconstruction ? ?? ? Independent of Z 1 2025/4/13 10

04-1 Visualization for parameter selection ? ?2 ? All samples 2025/4/13

04-2Classification ? {0,1}? ? For class assignment, the largest value in ? is used 2025/4/13

04-3 Image reconstruction ? ?? ? 2025/4/13

05 Analysis of LOO matrix Since Z Z can be replaced depending on applications, LOO matrix should be relative In each row.

05 Positioning of this LOO evaluation The proposed technique (fast LOO calculation) is not new! (but found by us independently) When the output is one-dimensional, it has been already proposed in Kernel ridge regression Kernel ridge regression (Cawley and Talbot, 2004,2008; Tanaka and Imai, 2019) Least square kernel machines Least square kernel machines (Cawley, 2006; An, Liu and Venkatesh, 2006) Ours is LOO evaluation of multi-dimensional outputs. It can be extended to Affine transformation (adopted above two) Affine transformation (adopted above two) K K- -fold cross validation fold cross validation

06 Conclusion When some implicit map (e.g., embedding) is simulated by KIM (Kernelized Implicit Mapping), then LOO evaluation of the KIM can be made with almost no additional cost. The same LOO matrix is available for different goals/outputs such as visualization, classification and reconstruction. The high complexity of KIM, cubed of the number of samples, should be reduced. 2025/4/13 16