Efficient Parallelization Techniques for K-Nearest Neighbors Algorithm on GPU

"Explore the implementation and benefits of parallelizing the K-Nearest Neighbors (KNN) algorithm on GPU for faster computation. Learn how to classify objects based on closest training examples and optimize performance using CUDA. Discover the simplicity of the KNN algorithm and its significance in supervised learning."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

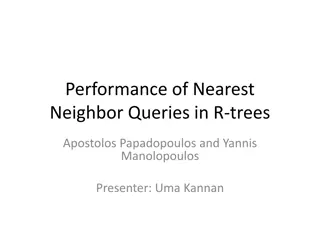

K nearest neighbors algorithm Parallelization on Cuda PROF. VELJKO MILUTINOVI MA A KNE EVI 3037/2015

K nearest neighbors algorithm Classification algorithm. Supervised learning. Instance-based learning method for classifying objects based on closest training examples in the feature space. 2/12

Simple KNN Algorithm Whenever we have a new point to classify, we find its K nearest neighbors from the training data. The distance is calculated using the Euclidean Distance. ? (?? ??)2 dxy= ?=1 3/12

Simple KNN Algorithm Add each training example <x, f(x)> to the list of training_examples. Given a query instance xqto be classified, Let x1, x2, , xkdenote the k instances from training_examples that are nearest to xq. Return the class that represents the maximum of the k instances. 4/12

KNN Example If K = 5, the query instance xq will be classified as negative since the majority of its neighbors are classified as negative. 5/12

KNN implementation The user sets a value for K which is supposed to be odd. Two thirds of the data set is used for training, one third for testing. The training examples are put into a list. 6/12

KNN implementation Testing the algorithm consists of comparing one test sample to all of the training examples. The Euclidean distance is found for all the training examples. The list is then sorted, the first K are taken into consideration when searching for the class of the test example. 7/12

KNN on GPU Calculation of the Euclidean distance between the query instance xqthat needs to be classified and a training example. Sorting algorithm to sort the training set in order to easily find the first K nearest neighbors. 9/12

Example Task Determine if a patient has diabetes using the attributes given in the data set, such as: triceps skin fold thickness, body mass index, diabetes pedigree function, age Public data set Pima Indian Diabetes Data Set (https://archive.ics.uci.edu/ml/machine-learning-databases/pima- indians-diabetes/) scaled appropriately for the testing purposes. 10/12

Results CPU GPU 300 250 200 Seconds 150 100 50 0 768 5000 10000 Data Set Elements 11/12

Example Task Original data set: Bigger data set: 12/12