Elastic IP and FPGA Productivity Optimization Strategies

Learn about Elastic IP for automatic discovery of use-case-specialized IP cores, overcoming FPGA productivity bottlenecks, and enhancing the design process with elastic IP implementations. Explore the challenges faced in RTL design and discover how to optimize performance efficiently.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Elastic IP Automatic Discovery of Use-Case-Specialized IP Greg Stitt Associate Professor Department of Electrical and Computer Engineering University of Florida

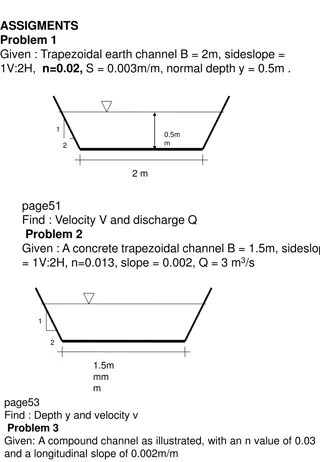

Select IP Cores Select IP Cores IP Library IP Library Limited specialization options Select Tradeoff from IP Core Request Request Specialized IP Specialized IP Implementation Implementation Implement and Optimize Custom RTL Custom Elastic- Compliant RTL Numerous slow iterations of optimization Automatic exploration replaces numerous manual iterations Compile Automatic Automatic Exploration Exploration Keep Exploring? Yes Compile No Improved performance Sub-optimal performance

FPGA Productivity Bottlenecks Problem: optimizing RTL code for emerging FPGAs is increasingly difficult Resulting performance far below potential peak performance Difficulty caused by enormous design space (i.e., millions of options) How to optimize module instances based on placement or floor-planning? Different instances of the same module may require different resources due to different placement What mix of resources should be used for each module? How much pipelining to apply? How to avoid routing congestion? How to minimize fan-out problems? Only feasible to explore tiny subset of design space Compile times can take hours to days 3

Typical RTL-Design Process How do designers handle these challenges? Use IP libraries as much as possible Implement and optimize remaining functionality Initially making guesses at best design options Select IP Cores IP Library Limited specialization options Select Tradeoff from IP Core Problems: RTL optimizations require numerous design iterations Huge bottleneck due to lengthy compile times Use cases have different goals and constraints IP libraries often provide few specialization options Or, so many options that it adds even more to the exploration Implement and Optimize Custom RTL Numerous slow iterations of optimization Compile Keep Exploring? Yes Solution: discover use-case-specific optimizations Each elastic IP core has knowledge base of different implementations Design-space explorer uses knowledge base to find new implementations with requested tradeoffs No Sub-optimal performance 4

Design Process with Elastic IP 1. Request IP with specific constraints and goals, e.g.: Give me a 2D convolution core that supports kernels up to 7x7, has a clock > 300 MHz, and generates a pixel every other cycle while using <= 5% of embedded memory I need a 128-point FFT core with latency < 512 cycles, clock >= 400 MHz, that generates an output every cycle while minimizing LUTs Delay this 512-bit signal by 59 cycles to maximize clock frequency without using any M20K blocks Develop custom, elastic-compliant RTL code Replaces design iterations with automatic exploration Select IP Cores IP Library Request Request Specialized IP Specialized IP Implementation Implementation Custom Elastic- Compliant RTL 2. Automatic exploration replaces numerous manual iterations Automatic Automatic Exploration Exploration Advantages: Significantly reduces design iterations Optimizes based on exact goals and constraints Even when IP meets constraints, can likely be further optimized for use case Discovers counter-intuitive, low-level optimizations Provides better solutions in less time Compile Improved performance 5

How do Elastic IP Cores Work? Elastic IP consists of bases and transformations Simple, yet useful example: delay width bits by cycles cycles Bases: flip-flops, embedded memory (BRAM, MLAB, SRL, M20K, etc.) input input width width . . + input(0) input(width-1) 1 + 1 wr_data wr_data . . . . Addr Reg enable wr_addr Addr Reg enable FF . . . . . FF . . . . . wr_addr cycles MLAB M20K cycles cycles . . . . FF FF + rd_addr + rd_addr . . rd_data output(0) output(width-1) rd_data width width output output 6

How do Elastic IP Cores Work? Transformations (rules to enable massive exploration) Leverage divide-and-conquer recursion to create new possibilities input[n-1:m] input[m-1:0] input input m n width width x Delay = = Delay Delay Delay cycles cycles y Delay n m width width output output output[n-1:m] output[m-1:0] ?,? ?.?.? + ? = ?????? ?,? ?.?. ? + ? = ????? 7

Exploration: Delay Example Exploration: combine transformations and bases to create new implementations width=40, cycles=513, Arria 10 FPGA 2 M20Ks (512x40 bits) (50.1% util.) 40 FFs, 1 M20K (100% util.) 20,520 FFs ... ... ... 512 513 513 1024 1 row of 40 FFs ... 40 511 words wasted 40 Huge amount of registers for most use cases Wastes many RAM bits Attractive balance of resources 8

Exploration: Delay Example width=20, cycles=64, Arria 10 FPGA 1 M20K (1024x20 bits) (6.25% util.) 2 MLABs (32x20 bits) (100% util.) 1,280 FFs 640 FFs + 1 MLAB (100% util.) 32 32 64 64 32 32 .............. 20 20 20 1024 960 words wasted 100% utilization of smaller MLAB resources Trades off FFs to save MLABs Mix of FFs and MLABs with 100% utilization 20 9

Exploration: Delay Example width=21, cycles=64, Arria 10 FPGA 1 M20K (6.6% util.) 64 FFs + 2 MLABs (100% utilization) 1,344 FFs 704 FFs + 1 MLAB (100% utilization) 4 MLABs (52.5% utilization) 32 64 64 64 32 .............. 1 1 20 21 19 wasted columns 20 21 Summary: there is rarely an optimal implementation across all use cases Elastic IP finds best implementation based on requested goals and constraints Synthesis tools tend to either solely use FFs or RAM Usually causes poor utilization of RAM Manual optimization of each delay instance isn t practical 10

Design Process w/ Elastic IP Elastic Design- Space Explorer Delay this 21-bit signal by 64 cycles to maximize clock frequency without using more than 2 block RAMs AxA Connect or 0 01 Ax4 Delay 2 15 Ax6 Connect or 1 02 7x6 3x6 Delay 4 14 Connect or 0 03 7x4 7x2 Connect or 1 0F Connect or 1 04 2x2 Delay 2 05 5x2 3x4 Delay 3 10 4x4 Connect or 1 06 Connect or 0 11 2x2 3x2 4x2 Delay 2 12 4x2 Delay 3 13 Connect or 0 07 Connect or 0 0C 2x1 2x1 Delay 2 0B 3x1 Delay 4 0D 3x1 Delay 3 0E Connect or 1 08 1x1 Delay 4 09 1x1 Delay 2 0A ELASTIC_DELAY : entity work.delay generic map ( width => 21, cycles => 64, elastic_config => "02150AA...." ) port map ( clk => clk, ... ); Configuration String 02150AA03141A6040F07605061720 000222070C152080B022090A12100 00411000021100002210D0E0320000 431000033110111740000334121304 400002420000342000043600002A4 11

Multiply-Add Example Multiply n pairs of inputs (x and y) and add results to produce a single output Bases Transformations x[0:n-2] y[0:n-2] x[n-1] y[n-1] x[0] y[0] x[1] y[1] x[n-1] y[n-1] x[0] y[0] x[0] y[0] x[1] y[1] . . . . * * * * * * Delay Delay Multiply Add + output * Adder Tree ??? ? = ? + output output ??? ? = ? ? ?.?.? > ? output optional register 12

Multiply-Add Exploration Example specialized implementations for 32-bit floats on Arria 10 Standard Implementation Specialized Implementation 1 Specialized Implementation 2 * * * * * * * * * * . . . . + + + + + * * * + + + + * * * + + + Advantages: Each node maps to Arria 10 DSP Requires only DSPs Advantages: Enables multiply-add in single DSP (low-level Arria/Stratix 10 optimization) DSPs chained directly together w/o reconfigurable interconnect (faster clocks) Requires n=4 DSPs (~2x improvement) Adder Tree Advantages: Saves RAM resources by keeping delays short Disadvantage: Requires 2n-1=7 DSPs Uses reconfigurable interconnect (slower clocks) Disadvantages: Adder tree requires 1 DSP per add, but elastic IP can automatically use LUTs too Disadvantages: 2n-4 delay instances, increased latency Large values of n will require many RAM resources for long delays delay DSP 13

Multiply-Add Exploration Numerous advantages: 1. Automatic optimization for different input sizes 2. Automatic optimization for integer, fixed-point, and floating point Each requires different optimizations that depend on the targeted FPGA 3. Automatic adaptation to DSP resources on different FPGAs Xilinx DSP38E1 vs. Xilinx DSP38E2 vs. Arria/Stratix 10 DSP vs. MAX10 DSP Each provides different resource combinations with different latencies Each requires different optimizations Manual optimization requires low-level knowledge of each FPGA Manual optimization reduces portability of code 4. Automatic use of multiple FPGA resource types e.g., use DSPs initially and then switch to LUTs when DSPs are exhausted 14

Logical-And Example Create 1-bit output by logically anding n single-bit inputs together Wide ands can create multiple-LUT paths between registers Potential timing-closure bottleneck Base Transformation inputs[n-1:n-b] inputs[b-1:0] inputs[n-1:0] ? ? ? ?b-bit and gates ......... Divide n-bit and into n: total inputs in and operation b: max inputs for each and instance FF FF FF ? ?bits Recursively and the remaining with another elastic and And output output ?,? ?.?. ? ? ?,? ?.?.? > ? 15

Logical-And Exploration Example goal: maximize clock w/o latency constraint n=24, b=4 n=24, b=6 4 4 4 4 4 4 6 6 6 Exploration adapts b to # LUT inputs Manual optimization is tedious Automatically adapts to different FPGAs Ensures that max delay between registers is 1 LUT Logic delay is constant for any n output Can also optimize for other goals Maximize clock for latency constraint Minimize latency with max delay <= 2 LUTs output flip-flop 16

Conclusion Thorough optimization of applications for emerging FPGAs becoming infeasible Huge design space Lengthy compile times Requires low-level knowledge of each FPGA Reduces portability of code Elastic IP allows designer to request optimized IP core for a specific use case Enables automatic optimization for: Different input sizes Data types: integer, fixed-point, and floating point Different constraints and optimization goals Different resource mixes Future presentation on how elastic IP exploration works If interested in collaborating, please send email to gstitt@ufl.edu 17