End-to-End Speech Translation using XSTNet

A comprehensive overview of end-to-end speech translation leveraging the innovative XSTNet model. Discusses the challenges in training E2E ST models, introduces XSTNet functionalities like supporting audio/text input, utilizing Transformer module, self-supervised audio representation learning, and more. Examines problem formulations, speech encoder configurations, and encoder-decoder mechanisms with modality and language indicators.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

End-to-end Speech Translation via Cross-modal Progressive Training Rong Ye, Mingxuan Wang, Lei Li ByteDance AI Lab, Shanghai, China Speaker : Yu-Chen Kuan

OUTLINE Introduction XSTNet Experiments Conclusion 2

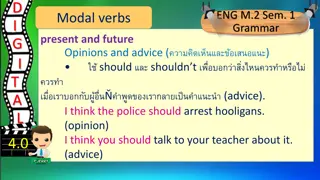

Introduction Training E2E ST models is challenging: limited parallel speech-text data Multi-task supervision with the speech-transcript-translation triple data Pretraining with external large-scale MT parallel text data Proposed Cross Speech-Text Network (XSTNet) for end-to-end ST to joint train ST, ASR and MT tasks 4

XSTNet Supports either audio or text input Shares a Transformer module Use a self-supervised trained Wav2vec2.0 representation of the audio Incorporate external large-scale MT data Progressive multi-task learning strategy Pre-training and fine-tuning paradigm 6

XSTNet 7

Problem Formulation Speech-transcript-translation triples D = {(s, x, y)} DASR = {(s, x)}, DST = {(s, y)} and DMT = {(x, y)} External MT dataset DMT-ext = {(x', y')} |DMT-ext| >> |D| 8

Speech Encoder Wav2vec2.0: without fine-tuning Self-supervised pre-trained contextual audio representation c = [c1, ...cT ] Convolution layers: match the lengths of the audio representation and text sequences 2 layers of 2-stride 1-dimensional convolutional layers with GELU activation reducing the time dimension by a factor of 4 es= CNN(c), es Rd T/4 , kernel size of CNN 5, the hidden size d = 512 9

Encoder-Decoder with Modality and Language Indicators Different indicators [src_tag] to distinguish the three tasks and audio/text inputs For audio input: Extra [audio] token with embedding e[audio] Rd Embedding of the audio e Rd (T/4+1) is the concatenation of e[audio] and es For the text input: Put the language id symbol before the sentence: [en] This is a book. When decoding, the language id symbol serves as the initial token to predict the output text 10

Progressive Multi-task Training Large-scale MT Pre-training: first pre-train the transformer encoder-decoder module using external MT data 11

Progressive Multi-task Training Multi-task Fine-tuning : combine external MT, ST, ASR, and MT parallel data from the in-domain speech translation dataset and jointly optimize the negative loglikelihood loss 12

Dataset ST datasets: MuST-C Augmented LibriSpeech En-Fr MT datasets: external WMT OPUS100 OpenSubtitle 14

Experimental Setups SentencePiece subword units with a vocabulary size of 10k Best BLEU on dev-set and average the last 10 checkpoints Beam size of 10 for decoding sacreBLEU 15

MuST-C 16

MuST-C Wav2vec2+Transformer model (abbreviated W-Transf.) with the same configuration as XSTNet Wav2vec2.0 vs. Fbank (W-Transf. & Transformer ST baseline) Multi-task vs. ST-only (XSTNet (Base) & W-Transf.) Additional MT data 17

The Influence of Training Procedure MT pretraining is effective Don t stop training the data in the previous stage Multi-task fine-tuning is preferred 22

Convergence Analysis Progressive multi-task training converges faster Multi-task training generalizes better 24

Conclusion Cross Speech-Text Network (XSTNet) Extremely concise model which can accept bi-modal inputs and jointly train ST, ASR and MT tasks Progressive multi-task training algorithm Significant improvement on the speech-to-text translation task compared with SOTA model 27