Enhancing Data Mining Techniques for Sequential Pattern Analysis

Explore the benefits of feature creation in problem-solving scenarios, calculate the Silhouette coefficient for a clustering example, delve into the APRIORI property in sequence mining, and analyze an Apriori-style algorithm for sequence mining with generated 3-sequences.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

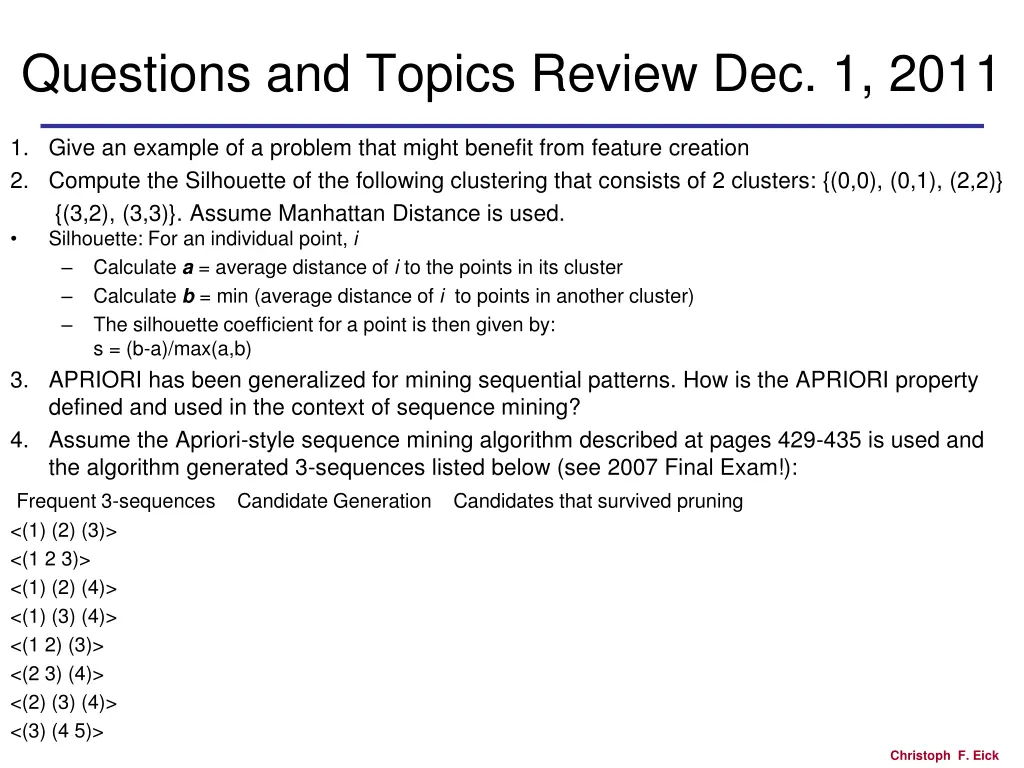

Questions and Topics Review Dec. 1, 2011 1. Give an example of a problem that might benefit from feature creation 2. Compute the Silhouette of the following clustering that consists of 2 clusters: {(0,0), (0,1), (2,2)} {(3,2), (3,3)}. Assume Manhattan Distance is used. Silhouette: For an individual point, i Calculate a = average distance of i to the points in its cluster Calculate b = min (average distance of i to points in another cluster) The silhouette coefficient for a point is then given by: s = (b-a)/max(a,b) 3. APRIORI has been generalized for mining sequential patterns. How is the APRIORI property defined and used in the context of sequence mining? 4. Assume the Apriori-style sequence mining algorithm described at pages 429-435 is used and the algorithm generated 3-sequences listed below (see 2007 Final Exam!): Frequent 3-sequences Candidate Generation Candidates that survived pruning <(1) (2) (3)> <(1 2 3)> <(1) (2) (4)> <(1) (3) (4)> <(1 2) (3)> <(2 3) (4)> <(2) (3) (4)> <(3) (4 5)> Christoph F. Eick

Questions and Topics Review Dec. 1, 2011 1. Give an example of a problem that might benefit from feature creation 2. Compute the Silhouette of the following clustering that consists of 2 clusters: {(0,0), (0,1), (2,2)} {(3,2), (3,3)}. Silhouette: For an individual point, i Calculate a = average distance of i to the points in its cluster Calculate b = min (average distance of i to points in another cluster) The silhouette coefficient for a point is then given by: s = (b-a)/max(a,b) 3. APRIORI has been generalized for mining sequential patterns. How is the APRIORI property defined and used in the context of sequence mining? Property: see text book [2] Use: Combine sequences that a frequent and which agree in all elements except the first element of the first sequence, and the last element of the second sequence. Prune sequences if not all subsequences that can be obtained by removing a single element are frequent. [3] 3. Assume the Apriori-style sequence mining algorithm described at pages 429-435 is used and the algorithm generated 3-sequences listed below: Frequent 3-sequences Candidate Generation Candidates that survived pruning <(1) (2) (3)> <(1 2 3)> <(1) (2) (4)> Christoph F. Eick

Questions and Topics Review Dec. 1, 2011 3. Assume the Apriori-style sequence mining algorithm described at pages 429-435 is used and the algorithm generated 3-sequences listed below: Frequent 3-sequences Candidate Generation Candidates that survived pruning 3) Association Rule and Sequence Mining [15] a) Assume the Apriori-style sequence mining algorithm described at pages 429-435 is used and the algorithm generated 3-sequences listed below: Candidates that survived pruning: <(1) (2) (3) (4)> Candidate Generation: <(1) (2) (3) (4)> survived <(1 2 3) (4)> pruned, (1 3) (4) is infrequent <(1) (3) (4 5)> pruned (1) (4 5) is infrequent <(1 2) (3) (4)> pruned, (1 2) (4) is infrequent <(2 3) (4 5)> pruned, (2) (4 5) is infrequent <(2) (3) (4 5)> pruned, (2) (4 5) is infrequent Christoph F. Eick

Questions and Topics Review Dec. 1, 2011 5. The Top 10 Data Mining Algorithms article says about k-means The greedy-descent nature of k- means on a non-convex cost also implies that the convergence is only to a local optimum, and indeed the algorithm is typically quite sensitive to the initial centroid locations The local minima problem can be countered to some extent by running the algorithm multiple times with different initial centroids. Explain why the suggestion in boldface is a potential solution to the local maximum problem. Propose a modification of the k-means algorithm that uses the suggestion! Christoph F. Eick

5. The Top 10 Data Mining Algorithms article says about k-means The greedy-descent nature of k- means on a non-convex cost also implies that the convergence is only to a local optimum, and indeed the algorithm is typically quite sensitive to the initial centroid locations The local minima problem can be countered to some extent by running the algorithm multiple times with different initial centroids. Explain why the suggestion in boldface is a potential solution to the local maximum problem. Propose a modification of the k-means algorithm that uses the suggestion! Using k-means with different seeds will find different local maxima of K-mean s objective function; therefore, running k-means with different initial seeds that are in proximity of different local maxima will produce alternative results.[2] Run k-means with different seeds multiple times (e.g. 20 times), then compute the SSE of each clustering, return the clustering with the lowest SSE value as the result. [3] Christoph F. Eick