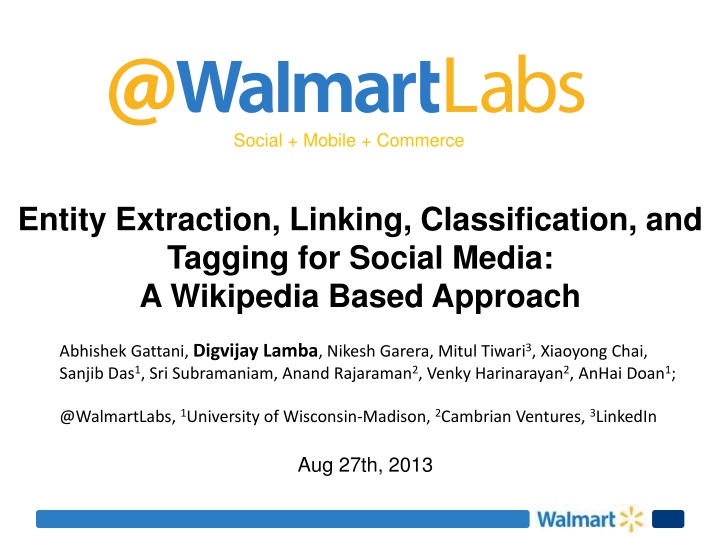

Entity Extraction and Contextual Annotation in Social Media Analysis

This article delves into a comprehensive approach to entity extraction, linking, classification, and tagging for social media using Wikipedia as a key resource. It explores the challenges in processing social media data, such as ungrammatical content and a large volume of updates, and explains the significance of contextual annotation for understanding social conversations. The use cases, based on a rich knowledge base that covers various topics, highlight the practical applications in social mining and event monitoring on platforms like Twitter. The examples provided illustrate how real-time user and social contexts can enhance the analysis of topics and conversations in different contexts.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Social + Mobile + Commerce Entity Extraction, Linking, Classification, and Tagging for Social Media: A Wikipedia Based Approach Abhishek Gattani, Digvijay Lamba, Nikesh Garera, Mitul Tiwari3, Xiaoyong Chai, Sanjib Das1, Sri Subramaniam, Anand Rajaraman2, Venky Harinarayan2, AnHai Doan1; @WalmartLabs, 1University of Wisconsin-Madison, 2Cambrian Ventures, 3LinkedIn Aug 27th, 2013

The Problem Obama gave an immigration speech while on vacation in Hawaii Entity Extraction Obama is aPerson, Hawaii is a location Entity Linking Obama -> en.wikipedia.org/wiki/Barack Obama Hawaii -> en.wikipedia.org/wiki/Hawaii Classification Politics , Travel Tagging Politics , Travel , Immigration , President Obama , Hawaii On Social Media Data Short Sentences Ungrammatical, misspelled, lots of acronyms Social Context From previous conversation/interests: Go Giants!! Large Scale 10s of thousands of updates a second Lots of Topics New topics and themes every day. Large scale of topics

Why? Use cases Used extensively at Kosmix and later at @WalmartLabs Twitter event monitoring In context ads User query parsing Product search and recommendations Social Mining Use Cases Central topic detection for a web page or tweet. Getting a stream of tweets/messages about a topic. Small team at scale About 3 engineers at a time Processing the entire Twitter firehose

Based on a Knowledge Base Global: Covers a wide range of topics. Includes WordNet, Wikipedia, Chrome, Adam, MusicBrainz, Yahoo Stocks etc. Taxonomy: Converted Wikipedia graph to a hierarchical taxonomy with IsA edges which are transitive Large: 6.5 Million hierarchical concepts with 165 Million relationships Real Time:Constantly updated from sources, analyst curation, event detection Rich:Synonyms, Homonyms, Relationships, etc Published: Building, maintaining, and using knowledge bases: A report from the trenches. In SIGMOD, 2013.

Annotate with Contexts Every social conversation takes place in a context that changes what it means A Real Time User Context What topics does this user talk about? A Real Time Social Context What topics are usually in context of a Hashtag, Domain, or KB Node A Web Context Topics in a link in a tweet. What are the topics in KB Node s Wiki Page? Compute the context at scale

Example Contexts Barack Obama Social: Putin, Russia, White House, SOPA, Syria, Homeownership, Immigration, Edward Snowden, Al Qaeda Web: President, White House, Senate, Illinois, Democratic, United States, US Military, War, Michelle Obama, Lawyer, African American www.whitehouse.gov Social: Petition, Barack Obama, Change, Healthcare, SOPA #Politics Social: Barack Obama, Russia, Rick Scott, State Dept, Egypt, Snowden, War, Washington, House of Representatives @Whitehouse User: Barack Obama, Housing Market, Homeownership, Mortgage Rates, Phoenix, Americans, Middle Class Families

Key Differentiators why it works? The Knowledge Base Interleave several problems Use of Context Scale Rule Based

How: First Find Candidate Mentions RTStephen lets watch. Politics of Love is about Obama s election @EricSu Step 1: Pre-Process clean up tweet Stephen lets watch. Politics of Love is about Obama s election Step 2: Find Mentions All in KB + detectors [ Stephen , lets , watch Politics , Politics of Love , is , about , Obama , Election ] Step 3: Initial Rules Remove obvious bad cases [ Stephen , watch , Politics , Politics of Love , Obama , Election ] Step 4: Initial scoring Quick and dirty [ Obama : 10, Politics of Love : 9, Stephen :7, watch : 7 ., Politics : 6, Election : 6,]

How: Add mention features Step 5: Tag and Classify Quick and dirty Obama : Presidents, Politicians, People; Politics, Places, Geography Politics of Love : Movies, Political Movies, Entertainment, Politics Stephen : Names, People watch : Verb, English Words, Language, Fashion Accessories, Clothing Politics : Politics Election : Political Events, Politics, Government Tweet: Politics, People, Movies, entertainment.. Etc. Step 6: Add features Contexts, similarity to the tweet, similarity to user or website, popularity measures, is it interesting?, social signals

How: Finalize mentions Step 7: Apply Rules Obama : Boost popular stuff and proper nouns Politics of Love : Boost Proper nouns, Boost due to Watch Stephen : Delete out of context names watch : Remove verbs Politics : Boost tags which are also mentions Election : Boost mentions in the central topic Step 8: Disambiguate KB has many meanings Pick One Obama: Barrack Obama. Popularity, Context, Social Popularity Watch: verb. Clothing is not in context Context is most important! We use many contexts for most success.

How: Finalize Step 9: Rescore Logistic Regression model on all the features Step 9: Re-tag Use latest scores and only picked meanings Step 9: Editorial Rules A regular expression like language for analysts to pick/book

Does it work? Evaluation of Entity Extraction For 500 English Tweets we hand curate a list of mentions. For 99 of those built a comprehensive list of tags. Entity extraction: Works well for people, organizations, locations Works great for unique names Works badly for Media: Albums, Songs, Generic Problem: Too many movies, books, albums and songs have Generic Names Inception, It s Friday etc. Even when popular they are often used in conversation Very hard to disambiguate. Very hard to find which ones are Generic.

Does it work? Evaluation of Tagging Tagging/Classification: Works well for Travel/Sports Bad for Products and Social sciences N Lineages problem: Note that all mentions have multiple lineages in the KB. Usually, one IsA lineage goes to People or Product A ContainedIn lineage goes to the topic like SocialScience Detecting which is primary is a hard problem. Is Camera in Photography? Or Electronics? Is War History? Or Politics? How far do we go?

Comparison with existing systems The first such comparison effort that we know of. OpenCalais Industrial Entity Extraction system StanNER-3: (From Stanford) This is a 3-class (Person, Organization, Location) named entity recognizer. The system uses a CRF-based model which has been trained on a mixture of CoNLL, MUC and ACE named entity corpora. StanNER-3-cl: (From Stanford) This is the caseless version of StanNER-3 system which means it ignores capitalization in text. StanNER-4: (From Stanford) This is a 4-class (Person, Organization, Location, Misc) named entity recognizer for English text. This system uses a CRF-based model which has been trained on the CoNLL corpora.

For People, Organization, Location Details in the Paper. We are far better on almost all respects: Overall: 85% Precision vs 78% best in other systems. Overall: 68% Recall vs 40% for StanNER-3 and 28% for OpenCalais Significantly better on Organizations Why? - Bigger Knowledge Base The larger knowledge base allows a more comprehensive disambiguation. Is Emilie Sloan referring to a person or organization? Why? - Common interjections LOL, ROFL, Haha interpreted as organizations by other systems. Acronyms misinterpreted Vs OpenCalais Recall is a major difference with a significantly smaller set of entities recognized by Open Calais