Geometric Discrepancy Theory: Online Vector Balancing & Interval Balancing Study

Study covers online geometric discrepancy theory with applications in fair division, focusing on vector balancing, interval balancing, and Tusnady's problem. Concepts explored include offline vs online approaches, interval balancing techniques, and linear algebraic views.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

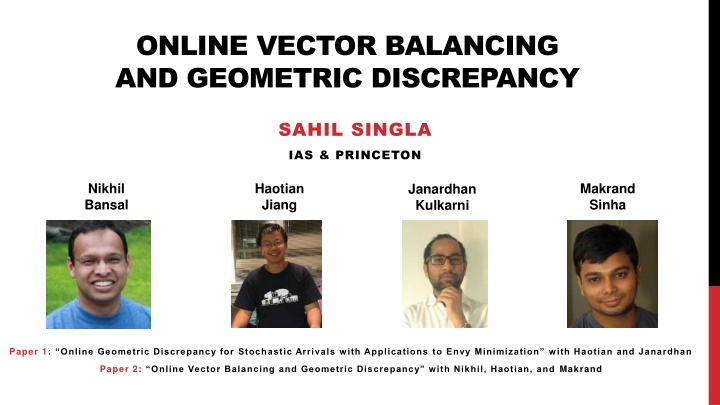

ONLINE VECTOR BALANCING AND GEOMETRIC DISCREPANCY SAHIL SINGLA IAS & PRINCETON Nikhil Bansal Haotian Jiang Makrand Sinha Janardhan Kulkarni Paper 1: Online Geometric Discrepancy for Stochastic Arrivals with Applications to Envy Minimization with Haotian and Janardhan Paper 2: Online Vector Balancing and Geometric Discrepancy with Nikhil, Haotian, and Makrand

INTERVAL BALANCING Offline Interval Balancing: x1 x3 x2 + Given ? points on a line, color t {1, 1} Every sub-interval nearly balanced, i.e., minimize + ? ? ? ? max I=[a,b] t Interval ? Just alternate xt I Online Interval Balancing: T points arrive uniformly in [0,1] Immediately color t {1, 1} Every sub-interval nearly balanced Hopeless for adversarial arrival: ( T) lower bounds Cannot change Random coloring gives ( T) Can we get O(1) or polylog(T)? Applications in fair division OTHER GEOMETRIC PROBLEMS? 2

TUSNADYS PROBLEM ? x1 Offline Tusnady s Problem: x2 Given T points in 0,1 [0,1], color t {1, 1} Every axis-parallel rectangle balanced, i.e., minimize x4 x3 max I1 I2 t ? xt I1 I2 ? Online Tusnady s Problem: Offline is already tricky: (logT)Beck 81 O(log1.5T)Nikolov 17 T points arrive uniformly in 0,1 [0,1] Immediately color t {1, 1} Random coloring gives ( T) Can we get O(1) or polylog(T)? LINEAR ALGEBRAIC VIEW: VECTOR BALANCING 3

OFFLINE VECTOR BALANCING Offline Vector Balancing: Given ? dimens vectors v1,v2, ,vT with vt 1 Color them t {1, 1} Every prefix-sum nearly balanced 0 max t v = max dt t t Observations: dt Easy for 1-dim: 0.7, 0.9, -0.8, 0.7, can ensure t v 1 Random coloring: ??/? log1/2n Std. deviation per coordinate Chernoff + union bd over n coordinates 4

MOTIVATION AND BACKGROUND Offline Vector Balancing: Variants: Some norm-1 to norm-2 Multiple colors Find an order Given v1, ,vT with vt 1, color to minimize max t Applications: dt t v = max t Fair-division / Sparsification / Rounding / RCTs Basic problem in discrepancy theory Vectors = items Coordinates = values Captures: Spencer for {0,1} vectors Komlos for 2 unit vectors Steinitz Chobanyan 94 What s known: Spencer 77 showed exp n Barany-Grinberg 81 showed 2n 1 Banaszczyk 12, Bansal-Garg 17 showed O( n log T) Big Conjecture: O( n) WHAT CAN WE DO ONLINE? Items/subjects come one-by-one 0 5

ONLINE VECTOR BALANCING Ideally want poly n log T vt What about Adversarial Arrivals? dt 1 vt has unit 2-norm & is orthogonal to discrep vector dt 1 2 2-norm becomes ( T) 2-norm increases by 1 each time step <t v Hope: Not always orthogonal Stochastic Arrivals: T vectors drawn i.i.d. from distribution ?over 1,1n Immediately color t {1, 1} Every prefix-sum nearly balanced max t CAN WE GET ???? ? ??? ? ? t v = max dt t Why does it capture Interval Balancing? 6

WHY A GENERALIZATION? Discretize using Dyadic Intervals Embed on a complete binary tree of height logT All sub-intervals corresponding to nodes Suffices to bound dyadic intervals ? ? Union of O(logT) dyadic intervals + Two leaf intervals Solve Vector Balancing #dim n = (T): Coordinate for each dyadic interval Sparsity ? = ???? Note: Cannot lose poly n . Can we get poly(slogT)? 7

OUR RESULTS THEOREM 1: Online Vector Balancing for i.i.d. arrivals: ATMOST?(????? ?) ATLEAST?( ? + ????/? ? ?) Remark: For independent +1, 1 coordinates, already by Bansal-Spencer 19 THEOREM 2: Online d-dim Tusnady s Problem for uniform arrivals: ATMOST?(?????+? ?) ATLEAST?(????/? ?) Corollary:O(log3T ) for interval discrepancy 8

OUTLINE INTRODUCTION: VECTOR BALANCINGAND GEOMETRIC DISCREPANCY BANSAL-SPENCER: VECTOR BALANCINGFOR INDEPENDENT +1, 1COORDINATES VECTOR BALANCINGWITH DEPENDENCIES: CHANGETHE BASIS INTERVAL DISCREPANCY: ATTEMPT 1 AND ATTEMPT 2 TUSNADY S PROBLEMVIA HAAR BASIS 9

ONLINE VECTOR BALANCING VIA A POTENTIAL Problem with Uniform Coordinates Hope: Not always orthogonal Vector vt drawn uniformly in { 1,1}n Immediately color t {1, 1} Every prefix-sum: max dt is discrepancy vector t v = max dt t t Greedy Algorithm on a Potential (t) How bad is ??? t icosh( dt(i)) cosh x := (e?+e ?)/2 = n 1/2 Proof Idea: Note 0 = n. Suffices: t,E t Show t that w.h.p. t poly(nT) cosh dt t poly(nT), so dt =1 poly(n) O(lognT) t - t 1 10

BANSAL-SPENCER FOR INDEP COORDINATES t = icosh dt 1i + tvti Want to show E t poly(n): 2 t t isinh dt 1i vti + ( t)2 icosh dt 1i vti 2+ poly(n) t iaiXi+ 2 iaiXi iaiXi+ 2 iaiXi (helping) Linear and Quadratic Let ai= sinh dt 1i Xi= vti 2+poly(n) cosh(x) = (e?+e ?)/2 sinh x = (e? e ?)/2 |cosh x sinh x| 1 (hurting) Note: If E Xi = 0, then E iaiXi = 0. 2? ANTI-CONCENTRATION: For any (a1 ,an), do we have E iaiXi E i|ai|Xi Uniform 1 Coordinates: So dt =1 O(lognT) = O( n log nT) 21/2 iai/ n Cauchy-Schwarz E iaiXi Khintchine iai 11

TAKEAWAYS t icosh( dt(i)) Bounding potential implies dt =1 O(lognT). This reduces to: 2? ANTI-CONCENTRATION: For any (a1 ,an), do we have E iaiXi E i|ai|Xi ai= sinh dt 1i Khintchine crucially uses independence of Xi= vti How to handle dependencies, e.g., interval balancing? ? Why anti-concent fails with dependencies? Suppose ? = (1,1,1, ) Random subset of size n/2 is +1 and others 1 All Xi mean zero, but Linear= 0 n = Quadratic v New way to measure badness of ??? 12

OUTLINE INTRODUCTION: VECTOR BALANCINGAND GEOMETRIC DISCREPANCY BANSAL-SPENCER: VECTOR BALANCINGFOR INDEPENDENT +1, 1 COORDINATES VECTOR BALANCINGWITH DEPENDENCIES: CHANGETHE BASIS INTERVAL DISCREPANCY: ATTEMPT 1 AND ATTEMPT 2 TUSNADY S PROBLEMVIA HAAR BASIS 13

VECTOR BALANCING WITH DEPENDENCIES Recall setting T vectors drawn i.i.d. from distribution ?over 1,1n Immediately color t {1, 1} Every prefix-sum nearly balanced max WLOG assume symmetric: v & v equally likely t v t Change the Basis to get Uncorrelation Xi uncorr if i j, E XiXj = 0 T Work in eigen basis of Covariance Matrix Ev~?vvT= k kukuk New i-th coordinate of v is Xi= v ui Now E XiXj = E ui T)uj= 0 TvvTuj = ui T( k kukuk Recall, Bansal-Spencer 19 t icosh( dt(i)) Greedy using Potential t icosh ???? ANTI-CONCENTRATION? 14

ANTI-CONCENTRATION FOR UNCORRELATED R.V.S LEMMA : For any a1, ,an and random variables X1, ,Xn that satisfy (1) Uncorr i j, E XiXj = 0 (2) Magnitude at most 1 (3) Sparsity ?, we have aiXi ? 2 E ? E |ai|Xi i i Note: Khintchine + Cauchy-Schwarz gives 1/ s for indep {1, 1} Tightness (for ? = ?): Let a = (1,1, ) A random column of Hadamard with Xi = ith coordinate Since rows orthogonal, i j, E XiXj = 0 E iaiXi =1 2= n n n = 1 and E iaiXi 15

WRAPPING UP: VECTOR BALANCING THEOREM : ONLINE Vector Balancing has discrepancy ?(?? ??? ??) Work in eigen basis Uncorrelation implies anti-concent with 1= So discrep in new basis 1 n s O lognT = O( n n log nT) Going back to the original basis Discrepancy-vector dt has 2 norm O(n2 log nT) Since 2 norm preserved, and upper bounds norm WHY GEOMETRIC DISCREPANCYIS TRICKY? 1. Sparsity might be lost in eigen basis 2. Can t lose ? going back as n = (T) 16

OUTLINE INTRODUCTION: VECTOR BALANCINGAND GEOMETRIC DISCREPANCY BANSAL-SPENCER: VECTOR BALANCINGFOR INDEPENDENT +1, 1 COORDINATES VECTOR BALANCINGWITH DEPENDENCIES: CHANGETHE BASIS INTERVAL DISCREPANCY: ATTEMPT 1 AND ATTEMPT 2 TUSNADY S PROBLEMVIA HAAR BASIS 17

ONLINE INTERVAL DISCREPANCY x1 x3 Recall setting x2 T points arrive uniformly in unit interval [0,1] Immediately color t {1, 1} ? ? Interval I + Hopeless for adversarial arrival + + (T) discrepancy for adaptive adversary ( T) discrepancy for oblivious adversary ? ? Next arrival Why na ve Greedy Algorithms don t seem to work: Tension between local and global intervals 18

ATTEMPT 1 ? Greedy with a natural potential t I is dyadiccosh dtI Ideal Lemma: For a random root-leaf path ? : ? ? Linear vs Quadratic E i P sinh d i E i P sinh d i Xi= ?[xt in interval i] Why it Fails and Works? Linear is exponentially smaller (in height) than Quadratic Cannot make height logT Can still make height O(loglog T) Fractal Structure HOW CAN WE DO BETTER? THM [Jiang-Kulkarni-S]: Gives sub-poly discrepancy: ??/?????? ? 19

ATTEMPT 2 Compare to cosh dtI ? A Different Potential ?????? ????? root+ I is dyadiccosh dtIleft dtIright t cosh dt ? ? Why Bounding this Potential Suffices? ? dleft dright & dleft+ dright implies both ?????? ????? Why does it help? Get a Martingale: Equally likely to be in left/right subtree Burkholder-Davis-Gundy instead of Khintchine Now Cauchy-Schwarz, dt 1 TUSNADY S PROBLEM? WHAT S REALLY HAPPENING? O logT = polylog(T) 20

OUTLINE INTRODUCTION: VECTOR BALANCINGAND GEOMETRIC DISCREPANCY BANSAL-SPENCER: VECTOR BALANCINGFOR INDEPENDENT +1, 1 COORDINATES VECTOR BALANCINGWITH DEPENDENCIES: CHANGETHE BASIS INTERVAL DISCREPANCY: ATTEMPT 1 AND ATTEMPT 2 TUSNADY S PROBLEMVIA HAAR BASIS 21

HAAR BASIS VIEW ? root+ I is dyadiccosh dtIleft dtIright t cosh dt ?????? ????? Forms n dimensional orthogonal Haar Basis ? ? Uncorrelation i j, E XiXj = 0 ? Retainssparsity ? = ??? ? ?????? ????? Don t lose while going back to original basis dleft dright & dleft+ dright implies both Use ???? potential in Haar basis & anti-concentration of uncorrelated r.v.s Don t need Burkholder-Davis-Gundy 22

TUSNADYS PROBLEM THEOREM : ONLINE d-DIM TUSNADY SDISCREPIS?(?????+? ?) Tensor product of 1-d Haar-basis Still gives uncorrelation Preserves sparsity Don t lose while going back to the original basis 23

CONCLUSION Online Stochastic Arrivals: poly(nlogT) for vector balancing poly(logdT) for d-dim Tusnady s problem Techniques: Use cosh( ) potential. Show Linear Quadratic Change basis to get Uncorrelation, which implies Anti-Concentration Eigen basis and Haar basis Open Problems: Extend vector balancing to oblivious adversary? (Easier Prophet model) Independent but non-identical distributions? QUESTIONS? 24

ANTI-CONCENTRATION FOR UNCORRELATED R.V.S LEMMA : For any a1, ,an and random variables X1, ,Xn that satisfy (1) Uncorr i j, E XiXj = 0 (2) Magnitude at most 1 (3) Sparsity ?, we have aiXi ? 2 E ? E |ai|Xi i i Proof (for ? = ?): Khintchine + Cauchy-Schwarz gives 1/ s for indep {1, 1} E iaiXi E iaiXi |Xk| = E |akXk E sign ak = E[ akXk TIGHTNESS: A random row of Hadamard matrix Xi is the ith coordinate of this row 2+ i kaiXiXk| akXk 2] 2+ i kaiXiXk Now sum over all k. 26

FRACTAL STRUCTURE TREE FRACTAL 27

ATTEMPT 3: HAAR BASIS root+ t cosh dt I is dyadiccosh dtIleft dtIright Orthogonal Haar Basis: Uncorrelation x +1 if 0 x < 0.5 1 if 0.5 x 1 0,0x = 1 j,kx (2j 1x k) for j 1 & 0 k 2j 1 Use ???? potential in Haar Basis: Retains logTsparsity Don t lose while going back to original basis 28

EXTENSIONS AND LOWER BOUNDS d-Dimensional Interval Discrepancy: ? x1 T points uniformly in 0,1 [0,1] Interval Discrepancy after projection on each axis Offline problem same as ?-permutations problem x2 x4 x3 Use Haar Basis for each Axis: At most O(d log3 T) At least ( d log (T/d)) ? ? Main Idea for Lower Bounds: Use uncorrelation to imply potential somewhat rises Thus, discrepancy is at least something 29