GPU Architecture Research Beyond Assigned Readings

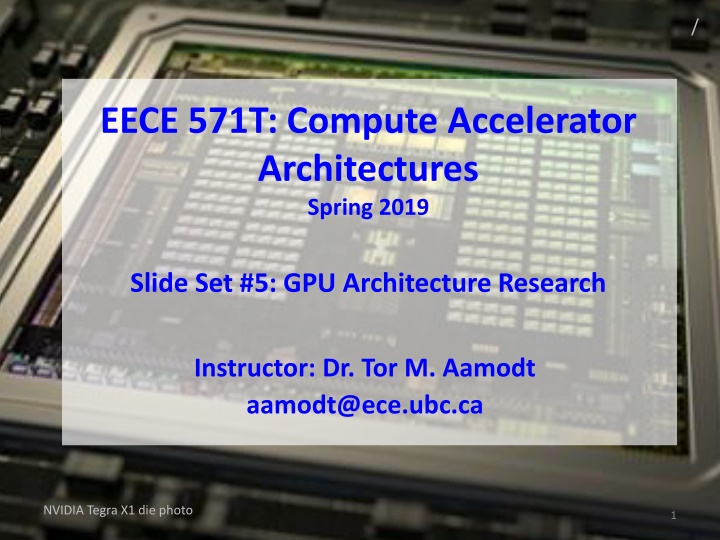

Dive into advanced GPU architecture research topics including mitigating SIMT control divergence, performance vs. warp size in applications, dynamic warp formation, and hardware implementations. Explore how innovations such as virtualized deep neural networks and multi-chip module GPUs are transforming the landscape of scalable and memory-efficient neural network design. Gain insights from notable studies such as vDNN (MICRO 2016) and MCM-GPU (ISCA 2017) while exploring the intricacies of NVIDIA Tegra X1 die photos and the potential for significant throughput loss in control flow scenarios. Join the discussion on improving SIMD efficiency, warp formation strategies, and dynamic thread packing approaches to enhance GPU performance in modern computing workflows.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

/ EECE 571T: Compute Accelerator Architectures Spring 2019 Slide Set #5: GPU Architecture Research Instructor: Dr. Tor M. Aamodt aamodt@ece.ubc.ca NVIDIA Tegra X1 die photo 1

Learning Objectives After this lecture you should be familiar with some research on GPU architecture beyond that covered in the assigned readings. 2

Reading (for quiz on Feb 28) Rhu et al., vDNN: Virtualized Deep Neural Networks for Scalable, Memory-Efficient Neural Network Design , MICRO 2016. Arunkumar et al., MCM-GPU: Multi-chip module GPUs for continued performance scalability, ISCA 2017. 3

Research Direction 1: Mitigating SIMT Control Divergence 4

Recall: SIMT Hardware Stack Stack A A/1111 Reconv. PC Next PC Active Mask TOS TOS TOS TOS TOS TOS - E E - - E E E - - - - G A D C E E D D E B E E 1111 1111 0110 1001 1001 1111 0110 0110 1111 1111 1111 1111 B B/1111 TOS C C/1001 D D/0110 F Common PC Thread Warp E E/1111 Thread 1 Thread 2 Thread 3 Thread 4 G G/1111 A B C D E G A Time Potential for significant loss of throughput when control flow diverged! 5

Performance vs. Warp Size 165 Applications 1.8 1.6 Warp Size 4 IPC normalized to warp size 32 1.4 1.2 1 0.8 0.6 0.4 0.2 0 Application Divergent Applications Convergent Applications Warp-Size Insensitive Applications Rogers et al., A Variable Warp-Size Architecture, ISCA 2015 6

Dynamic Warp Formation (Fung MICRO 07) Warp 0 Warp 1 Warp 2 A 1 2 3 4 Reissue/Memory Latency A 5 6 7 8 A 9 10 11 12 B 1 2 3 4 B 5 6 7 8 SIMD Efficiency C 5 -- 11 12 C 88% B 9 10 11 12 C 1 2 -- -- 1 2 7 8 Pack C 5 -- 7 8 Time C -- -- 11 12 D -- -- 3 4 How to pick threads to pack into warps? D -- 6 -- -- D 9 10 -- -- E 1 2 3 4 E 5 6 7 8 E 9 10 11 12 7

Dynamic Warp Formation: Hardware Implementation A: BEQ R2, B C: Thread Scheduler PC-Warp LUT Warp Pool PC PC A B Issue Logic Warp Update Register T 5 7 8 3 2 0 2 B B 0110 0010 A B B C C 1 2 3 4 2 3 5 2 3 8 PC PC C C OCC OCC 1001 1101 IDX IDX 1 1 TID x N TID x N 5 6 7 8 1 6 Prio Prio REQ 1011 0110 B B TID x N Warp Update Register NT 6 1 4 PC A H 4 PC TID x N Prio 7 REQ 0100 1001 C C TID x N PC B H Warp Allocator X Y Z X Y X Y X Y Z X Y Z (TID, Reg#) ALU 1 RF 1 5 6 7 1 2 3 4 8 1 2 3 4 8 5 6 7 1 2 3 4 8 8 5 6 7 3 5 2 5 5 2 1 2 6 7 3 5 5 2 1 2 6 7 3 Writeback (TID, Reg#) Commit/ ALU 2 RF 2 I-Cache 3 4 8 8 Decode 3 4 8 8 (TID, Reg#) ALU 3 RF 3 (TID, Reg#) ALU 4 RF 4 No Lane Conflict Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 8

DWF Pathologies: Starvation B: if (K > 10) C: K = 10; else D: K = 0; E: B = C[tid.x] + K; Majority Scheduling Best Performing Prioritize largest group of threads with same PC Starvation LOWER SIMD Efficiency! C C E E 1 2 7 8 5 -- 11 12 1 2 7 8 5 -- 11 12 D D E E E D E E 9 6 3 4 -- 10 -- -- 1 2 3 4 5 6 7 8 9 10 11 12 -- 10 -- -- 9 6 3 4 -- 10 -- -- 1000s cycles D 9 6 3 4 Other Warp Scheduler? Tricky: Variable Memory Latency Time Wilson Fung, Tor Aamodt Thread Block Compaction 9

DWF Pathologies: Extra Uncoalesced Accesses Coalesced Memory Access = Memory SIMD 1st Order CUDA Programmer Optimization Not preserved by DWF E: B = C[tid.x] + K; Memory #Acc = 3 No DWF 0x100 0x140 0x180 E E E 1 2 3 4 5 6 7 8 9 10 11 12 L1 Cache Absorbs Redundant Memory Traffic Memory #Acc =9 With DWF 0x100 0x140 0x180 E E E 1 2 7 12 9 6 3 8 5 10 11 4 L1$ Port Conflict Wilson Fung, Tor Aamodt Thread Block Compaction 10

DWF Pathologies: Implicit Warp Sync. Some CUDA applications depend on the lockstep execution of static warps Warp 0 Warp 1 Warp 2 Thread 0 ... 31 Thread 32 ... 63 Thread 64 ... 95 E.g. Task Queue in Ray Tracing int wid = tid.x / 32; if (tid.x % 32 == 0) { sharedTaskID[wid] = atomicAdd(g_TaskID, 32); } my_TaskID = sharedTaskID[wid] + tid.x % 32; ProcessTask(my_TaskID); Sync. Implicit Warp Wilson Fung, Tor Aamodt Thread Block Compaction 11

Observation Compute kernels usually contain divergent and non-divergent (coherent) code segments Coalesced memory access usually in coherent code segments DWF no benefit there Static Warp Coherent Divergence Dynamic Warp Divergent Recvg Pt. Reset Warps Coales. LD/ST Static Warp Coherent Wilson Fung, Tor Aamodt Thread Block Compaction 12

Thread Block Compaction Run a thread block like a warp Whole block move between coherent/divergent code Block-wide stack to track exec. paths reconvg. Barrier @ Branch/reconverge pt. All avail. threads arrive at branch Insensitive to warp scheduling Warp compaction Regrouping with all avail. threads If no divergence, gives static warp arrangement Implicit Warp Sync. Starvation Extra Uncoalesced Memory Access Wilson Fung, Tor Aamodt Thread Block Compaction 13

Thread Block Compaction PC RPC A D C Active Threads A A 1 2 3 4 1 2 3 4 E - E E - 1 2 3 4 5 6 7 8 9 10 11 12 -- -- -- -- -- -- -- -- 1 2 3 4 5 6 7 8 9 10 11 12 -- -- 3 4 -- 6 -- -- 9 10 -- -- 1 2 -- -- 5 -- 7 8 -- -- 11 12 -- -- -- -- -- -- -- -- A A A A 5 6 7 8 9 10 11 12 5 6 7 8 9 10 11 12 -- -- -- -- -- -- -- -- C C C 1 2 7 8 5 -- 11 12 1 2 -- -- A: K = A[tid.x]; C C 5 -- 7 8 -- -- 11 12 B: if (K > 10) D D 9 6 3 4 -- 10 -- -- C: K = 10; D -- -- 3 4 D D -- 6 -- -- 9 10 -- -- else E 1 2 3 4 E E 5 6 7 8 9 10 11 12 D: K = 0; E 1 2 7 8 E: B = C[tid.x] + K; E E 5 6 7 8 9 10 11 12 Time Wilson Fung, Tor Aamodt Thread Block Compaction 14

Thread Compactor Convert activemask from block-wide stack to thread IDs in warp buffer Array of Priority-Encoder C E 1 2 -- -- 5 -- 7 8 -- -- 11 12 1 5 -- 2 -- -- -- 7 11 -- 8 12 P-Enc P-Enc P-Enc P-Enc 1 5 2 -- 7 11 8 12 Warp Buffer C C 1 2 7 8 5 -- 11 12 Wilson Fung, Tor Aamodt Thread Block Compaction 15

Experimental Results 2 Benchmark Groups: COHE = Non-Divergent CUDA applications DIVG = Divergent CUDA applications Serious Slowdown from pathologies No Penalty for COHE 22% Speedup on DIVG COHE DIVG DWF TBC 0.6 0.7 0.8 0.9 1 1.1 1.2 1.3 IPC Relative to Baseline Per-Warp Stack Wilson Fung, Tor Aamodt Thread Block Compaction 16

Recent work on warp divergence Intel [MICRO 2011]: Thread Frontiers early reconvergence for unstructured control flow. UT-Austin/NVIDIA [MICRO 2011]: Large Warps similar to TBC except decouple size of thread stack from thread block size. NVIDIA [ISCA 2012]: Simultaneous branch and warp interweaving. Enable SIMD to execute two paths at once. Intel [ISCA 2013]: Intra-warp compaction extends Xeon Phi uarch to enable compaction. NVIDIA: Temporal SIMT [described briefly in IEEE Micro article and in more detail in CGO 2013 paper] NVIDIA [ISCA 2015]: Variable Warp-Size Architecture merge small warps (4 threads) into gangs . 17

+ Thread Frontiers [Diamos et al., MICRO 2011] 18

Temporal SIMT Spatial SIMT (current GPUs) Pure Temporal SIMT 32-wide datapath 1-wide thread 0 ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld thread (threads) 31 1 cyc 1 cyc time time 0 1 2 3 4 5 6 7 8 9 ld ld ld ld ld ld ld ld ld ld ld 10 ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld ld mlmlmlml mlmlmlml mlmlmlml mlmlmlml mlmlmlml mlmlmlml mlmlmlml mlmlmlml adadadad adadadad adadadad adadadad adadadad adadadad adadadad adadadad st st st st st st st st st st st st st st st st st st st st st st st st st st st st st st st st 1 warp instruction = 32 threads [slide courtesy of Bill Dally]

Temporal SIMT Optimizations Control divergence hybrid MIMD/SIMT 32-wide (41%) 4-wide (65%) 1-wide (100%) Scalarization Factor common instructions from multiple threads Execute once place results in common registers [See: SIMT Affine Value Structure (ISCA 2013)] [slide courtesy of Bill Dally]

Scalar Instructions in SIMT Lanes Scalar instruction spanning warp Scalar register visible to all threads Temporal execution of Warp [slide courtesy of Bill Dally] Multiple lanes/warps Y. Lee, CGO 2013

Variable Warp-Size Architecture Most recent work by NVIDIA [ISCA 2015] Split the SM datapath into narrow slices. Extensively studied 4-thread slices Gang slice execution to gain efficiencies of wider warp. Slices share an L1 I-Cache and Memory Unit Slices can execute independently Frontend Frontend L1 I-Cache L1 I-Cache Slice Slice Datapath Slice Slice Datapath Warp Datapath ... Ganging Unit Warp Control Logic Slice Front End Slice Front End 32-wide 4-wide 4-wide Memory Unit Memory Unit Tim Rogers A Variable Warp-Size Architecture 22

Divergent Application Performance I-VWS: Break on E-VWS: Break + Warp Size 4 CF Only Reform WS 32 WS 4 I-VWS E-VWS 1.8 IPC normalized to warp size 32 1.6 1.4 1.2 1 0.8 0.6 0.4 0.2 0 CoMD GamePhysics ObjClassifier Raytracing HMEAN-DIV Lighting Divergent Applications Tim Rogers A Variable Warp-Size Architecture 23

Convergent Application Performance I-VWS: Break on E-VWS: Break + Warp Size 4 CF Only Reform 1.2 WS 32 WS 4 I-VWS E-VWS IPC normalized to warp size 32 1 0.8 0.6 0.4 0.2 0 Game 1 Game 2 FeatureDetect MatrixMultiply HMEAN-CON Radix Sort Warp-Size Insensitive Applications Unaffected Convergent Applications Tim Rogers A Variable Warp-Size Architecture 24

Research Direction 2: Mitigating High GPGPU Memory Bandwidth Demands 25

Improve upon CCWS? CCWS detects bad scheduling decisions and avoids them in future. Would be better if we could think ahead / be proactive instead of being reactive 26

Observations [Rogers et al., MICRO 2013] Memory divergence in static instructions is predictable Main Memory Divergence load Warp 0 Warp 1 Both Used To Create Cache Footprint Prediction Data touched by divergent loads dependent on active mask Main Memory Main Memory 4 accesses 2 accesses Divergence Divergence Warp 1 0 Warp 1 1 1 0 1 1 27

Footprint Prediction 1. Detect loops with locality Some loops have locality Some don t Limit multithreading here 2. Classify loads in the loop Loop with locality while( ) { } Diverged load 1 load 2 Not Diverged 3. Compute footprint from active mask Loop with locality while( ) { } Warp 0 1111 11 4 accesses Diverged load 1 load 2 Warp 0 s Footprint = 5 cache lines + Not Diverged 1 access 28

DAWS Operation Example Example Compressed Sparse Row Kernel Cache A[32] Cache A[0] Cache A[0] Hit Hit x30 int C[]={0,64,96,128,160,160,192,224,256}; void sum_row_csr(float* A, ) { float sum = 0; int i =C[tid]; Hit A[160] A[64] A[64] Hit x30 Hit A[192] A[96] A[96] Hit x30 A[224] Hit Hit x30 A[128] A[128] Loop Stop Go 1st Iter. Divergent Branch while(i < C[tid+1]) { Warp1 0 1 1 1 sum += A[ i ]; Memory Divergence Warp0 Warp0 1 1 1 1 1 0 0 0 ++i; 2nd Iter. Go Go 33rd Iter. Go Time0 Time1 Time2 } Cache Footprint Stop Early warps profile Warp1 1 Diverged Load Detected loop for later warps Warp 0 has branch divergence Locality Detected Footprint = 3X1 4 4 Want to capture spatial locality Both warps capture spatial locality together 4 Active threads Footprint decreased 4 No locality detected = no footprint Warp1 No Warp0 Footprint = 4X1 Footprint 29 Warp0

Sparse MM Case Study Results Performance (normalized to optimized version) Within 4% of optimized with no programmer input 4.9 2 Divergent Code Execution time 1.5 1 0.5 0 CCWS Other Schedulers DAWS 30

Coordinated criticality-Aware Warp Acceleration (CAWA) [Lee et al., ISCA 2015] Some warps execute longer than others due to lack of uniformity in underlying workload. Give these warps more space in cache and more scheduling slots. Estimate critical path by observing amount of branch divergence and memory stalls. Also, predict if line inserted in line will be used by a warp that is critical using modified version of SHiP cache replacement algorithm. 31

Other Memory System Performance Considerations TLB Design for GPUs. Current GPUs have translation look aside buffers (makes managing multiple graphics application surfaces easier; does not support paging) How does large number of threads impact TLB design? E.g., Power et al., Supporting x86-64 Address Translation for 100s of GPU Lanes, HPCA 2014. Importance of multithreaded page table walker + page walk cache. 32

Research Direction 3: Easier Programming with Synchronization 33

Synchronization Locks are not encouraged in current GPGPU programming manuals. Interaction with SIMT stack can easily cause deadlocks: while( atomicCAS(&lock[a[tid]],0,1) != 0 ) ; // deadlock here if a[i] = a[j] for any i,j = tid in warp // critical section goes here atomicExch (&lock[a[tid]], 0) ; 34

Correct way to write critical section for GPGPU: done = false; while( !done ) { if( atomicCAS (&lock[a[tid]], 0 , 1 )==0 ) { // critical section goes here atomicExch(&lock[a[tid]], 0 ) ; } } Most current GPGPU programs use barriers within thread blocks and/or lock-free data structures. This leads to the following picture 35

Lifetime of GPU Application Development Functionality Performance E.g. N-Body with 5M bodies CUDA SDK: O(n2) 1640 s (barrier) Barnes Hut: O(nLogn) 5.2 s (locks) Time Fine-Grained Locking/Lock-Free Transactional Memory ? Time Time Wilson Fung, Inderpeet Singh, Andrew Brownsword, Tor Aamodt 36

Transactional Memory Programmer specifies atomic code blocks called transactions [Herlihy 93] Lock Version: Lock(X[a]); Lock(X[b]); Lock(X[c]); X[c] = X[a]+X[b]; Unlock(X[c]); Unlock(X[b]); Unlock(X[a]); TM Version: atomic { X[c] = X[a]+X[b]; } Potential Deadlock! 37

Transactional Memory Programmers View: TX1 TX2 Time Time OR TX2 TX1 Non-conflicting transactions may run in parallel Memory Conflicting transactions automatically serialized Memory A B C D A B C D TX1 TX2 TX1 TX2 Commit Abort TX2 Commit Commit Commit 38

Are TM and GPUs Incompatible? GPU uarch very different from multicore CPU KILO TM[MICRO 11, IEEE Micro Top Picks] Hardware TM for GPUs Half performance of fine grained locking Chip area overhead of 0.5% 39 39 Hardware TM for GPU Architectures

Hardware TM for GPUs Challenge #1: SIMD Hardware On GPUs, scalar threads in a warp/wavefront execute in lockstep A Warp with 4 Scalar Threads ... TxBegin LD r2,[B] ADD r2,r2,2 ST r2,[A] TxCommit ...Committed T0 T1 T2 T3 T0 T1 T2 T3 Branch Divergence! Aborted T0 T1 T2 T3 40 40 Hardware TM for GPU Architectures

KILO TM Solution to Challenge #1: SIMD Hardware Transaction Abort Like a Loop Extend SIMT Stack ... TxBegin LD r2,[B] ADD r2,r2,2 ST r2,[A] TxCommit ... Abort Wilson Fung, Inderpeet Singh, Andrew Brownsword, Tor Aamodt 41 41 41 Hardware TM for GPU Architectures Hardware TM for GPU Architectures

Hardware TM for GPUs Challenge #2: Transaction Rollback GPU Core (SM) CPU Core Warp Warp Warp Warp Warp Warp Warp Warp 10s of Registers 32k Registers Register File @ TX Abort @ TX Entry Register File Checkpoint Register File 2MB Total On-Chip Storage Checkpoint? 42 42 Hardware TM for GPU Architectures

KILO TM Solution to Challenge #2: Transaction Rollback SW Register Checkpoint Most TX: Reg overwritten first appearance (idempotent) TX in Barnes Hut: Checkpoint 2 registers Overwritten TxBegin LD r2,[B] ADD r2,r2,2 ST r2,[A] TxCommit Abort 43 43 Hardware TM for GPU Architectures

Hardware TM for GPUs Challenge #3: Conflict Detection Existing HTMs use Cache Coherence Protocol Not Available on (current) GPUs No Private Data Cache per Thread Signatures? 1024-bit / Thread 3.8MB / 30k Threads 44 44 Hardware TM for GPU Architectures

Hardware TM for GPUs Challenge #4: Write Buffer GPU Core (SM) Warp Warp Warp Warp Warp Warp Warp L1 Data Cache Problem: 384 lines / 1536 threads < 1 line per thread! Fermi s L1 Data Cache (48kB) = 384 X 128B Lines 1024-1536 Threads 45 45 Hardware TM for GPU Architectures

KILO TM: Value-Based Conflict Detection A=1 A=1 Global Memory TX1 atomic {B=A+1} B=0 B=2 Private Memory Read-Log A=1 TxBegin LD r1,[A] ADD r1,r1,1 ST r1,[B] TxCommit Write-Log B=2 B=2 TX2 atomic {A=B+2} Private Memory Read-Log B=0 TxBegin LD r2,[B] ADD r2,r2,2 ST r2,[A] TxCommit Write-Log A=2 Self-Validation + Abort: Only detects existence of conflict (not identity) 46 46 Hardware TM for GPU Architectures

Parallel Validation? Data Race!?! Tx1 then Tx2: A=4,B=2 A=1 A=1 Global Memory TX1 atomic {B=A+1} B=0 B=0 Private Memory OR Read-Log A=1 Tx2 then Tx1: A=2,B=3 Write-Log B=2 B=2 TX2 atomic {A=B+2} Private Memory Read-Log B=0 Write-Log A=2 A=2 47 47 Hardware TM for GPU Architectures

Serialize Validation? TX1 V + C TX2 Stall V + C Commit Unit Global Memory Time V = Validation C = Commit Benefit #1: No Data Race Benefit #2: No Live Lock Drawback: Serializes Non-Conflicting Transactions ( collateral damage ) 48 48 Hardware TM for GPU Architectures

Solution: Speculative Validation Key Idea: Split Conflict Detection into two parts 1. Recently Committed TX in Parallel 2. Concurrently Committing TX in Commit Order Approximate V = Validation C = Commit TX1 V+C TX2 V+C TX3 Stall V+C RS RS RS Commit Unit Global Memory Time Conflict Rare Good Commit Parallelism 49 49 Hardware TM for GPU Architectures

Efficiency Concerns? 128X Speedup over CG-Locks 40% FG-Locks Performance 2X Energy Usage Scalar Transaction Management Scalar Transaction fits SIMT Model Simple Design Poor Use of SIMD Memory Subsystem Rereading every memory location Memory access takes energy 50