HDFS Overview and Roles

"Explore the Hadoop Distributed File System (HDFS) and its major roles in the cloud computing environment. Learn about its distributed, scalable, and portable nature, along with features like file replication, fault tolerance, and data processing. Dive into the world of HDFS with this informative presentation."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

HDFS Hadoop Distributed File System 100062123 100062139 101062401

Outline Introduction HDFS How it works Pros and Cons Conclusion 2

Introduction to HDFS Hadoop Distributed File System Cloud Computing JAVA Processing PB-Level Data Distributed Computing Environment Replication & Fault tolerance Mapping between logical objects & physical objects Allow files shared via internet Write-once-read-many Restricting access Dung Cutting established Nutch Project File System for Hadoop framework Remote Procedure Call Master/Slave Yahoo! has accomplished 10,000-core Hadoop cluster in 2008 HDFS Hadoop MapReduce HBase 3

HBase NoSQL Using several servers to store PB-level data 5

HDFS Distributed, scalable, and portable File replication(default : 3) Reading efficacy 6

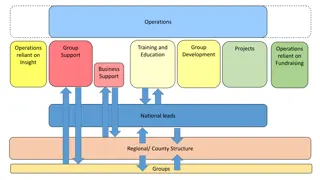

HDFS major roles Client(user) read/write data from/to file system Name node(masters) oversee and coordinate the data storage function, receive instructions from Client Data node(slaves) store data and run computations, receive instructions from Namenode 8

HDFS fault tolerance Node failure data node or nam enode is dead Communication failure cannot send and retrieve data Data corruption data corrupted while sending over network or corrupted in the hard disks Write failure the data node which is ready to be written is dead Read failure - the data node which is ready to be read is dead 18

Detect the Network failure Whenever data is sent, an ACK is replied by the receiver If the ACK is not received(after several retries), the sender assumes that the host is dead, or the network has failed Also Checksum is sent along with transmitted data can detect corrupt data when transferring 20

Handling the write/read failure Client write the block in smaller data units(usually 64KB) called packet Each data node replies back an ACK for each packet to confirm that they got the packet If client don t get the ACKs from some nodes, dead node detected Client then adjust the pipeline to skip that node(then?) Handling the read failure just read another node 21

Handling the write failure contd Name node contains two tables: List of blocks blockA in dn1, dn2,dn8 blockB in dn3, dn7, dn9 List of Data nodes dn1 has blockA, blockD dn2 has blockE, blockG Name node check list of blocks to see if a block is not properly replicated If so, ask other data nodes to copy block from data nodes that have the replication. 22

Pros Very large files A file size overs xxxMB, GB, TB, PB . .. Streaming data access Write-once, read-many. Efficient on reading whole dataset. Commodity hardware High reliability and availability. Doesn t require expensive, highly reliable hardware. 23

Cons 24

Conclusion HDFS - an Apache Hadoop subproject. Highly fault-tolerant and is designed to be deployed on low-cost hardware. High throughput but not low latency. 25