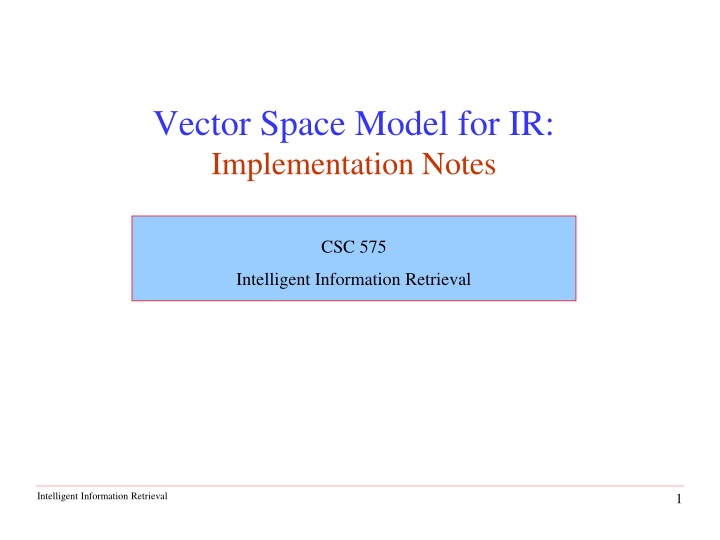

Implementation Notes on Intelligent Information Retrieval with Vector Space Model

Learn about the implementation of the Vector Space Model for Information Retrieval in CSC 575. Explore inverted index design for efficient search, posting classes, token information, and document references. Understand the representation of document vectors and essential structures for intelligent information retrieval.

Uploaded on | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Vector Space Model for IR: Implementation Notes CSC 575 Intelligent Information Retrieval Intelligent Information Retrieval 1

Inverted Index Designed for efficient search based on tokens in a query dictionary contains terms, but will now also contain statistics about the term such as the DF (or IDF) values the postings will contain the doc ids and the weights (usually counts) for all occurrences of the term Doc Freq Token Occurrence (Posting) Index terms D9, 1 D11, 3 D7, 4 3 computer D3, 1 database 2 D1, 3 D2, 2 D8, 1 D3, 1 D5, 3 4 science system 1 D5, 2 Postings lists Dictionary/Index Intelligent Information Retrieval 2

Posting Class // A lightweight object for storing information about // occurrences of a single token in a Document (i.e., a posting) class Posting { // A reference to the Document where token occurs docRef = null // an object of type DocumentReference count = 0 // The number of times it occurs in the Document // Constructor for a Posting object def Posting(docRef, count) { this.docRef = docRef this.count = count } } Intelligent Information Retrieval 3

TokenInfo Class This is a dictionary entry corresponding to a token. We ll assume that each entry will contain the IDF value for the token as well as the postings list for the token class TokenInfo { idf = 0.0 // IDF value for the token // A list of Postings of documents where this token occurs postingList // May be a list, array, or hashtable structure // Create an initially empty data structure def TokenInfo() { this.postingList = empty list / array / hashtable this.idf = 0.0 } } Intelligent Information Retrieval 4

DocumentReference Class // A simple data structure for storing a reference to a // document file that may include information on the length of // its document vector. This is a lightweight object to store // in inverted index without storing an entire Document object. class DocumentReference { // The file where the referenced document is stored. file = null // The length of the corresponding Document vector. length = 0.0 def DocumentReference(file, length) { this.file = file this.length = length } . . . } Intelligent Information Retrieval 5

Representing a Document Document Vector Typically, a document vector is represented as a hashtable structure (such as Java Hashmap or Python Dict) In the following I will use Java-style Hashmaps to refer to the hashtable structures, but this could be replaced by the appropriate structure in other languages Needed as an efficient, indexed representation of sparse document vectors Some numeric libraries may have specific data structures for efficiently representing vectors Intelligent Information Retrieval 6

Constructing an Inverted Index Create an empty HashMap, H (will hold the dictionary) For each document, D, (i.e. file in an input directory): Create a HashMap Vector, V, for D; For each token, T, in V: TokenInfo for T and insert it into H; Create a Posting for T in D and add it to the postingList in the TokenInfo for T; If T is not already in H, create an empty Compute IDF for all tokens in H; Compute vector lengths for all documents in H; Intelligent Information Retrieval 7

Computing IDF Let N be the total number of Documents; For each token, T, in the dictionary H: Determine the total number of documents, M, in which T occurs (the length of T s postingList); Set the IDF for T to log2(N/M); // alternatively, set DF value for T to M and postpone // full IDF value computation Note that full IDF computation requires a second pass through all the tokens after all documents have been indexed (since we need the value of N). Intelligent Information Retrieval 8

Document Vector Length Remember that the length (norm) of a document vector is the square-root of sum of the squares of the weights of its tokens. Remember the weight of a token is: TF * IDF. Therefore, must wait until IDF s are known (and therefore until all documents are indexed) before document lengths can be determined. Intelligent Information Retrieval 9

Computing Document Lengths Assume the length of all document vectors are initialized to 0.0; For each token T in dictionary H: Let, I be the IDF weight of T; For each Posting of T in document D Let, C, be the count of T in D; Increment the length of D by (I*C)2; For each document D in H: Set the length of D to be the square-root of the current stored length; Intelligent Information Retrieval 10

After Index Construction: Example idf Index terms D4, 2 D1, 4 D2, 1 D3, 1 D3, 2 computer 0.42 D2, 3 D1, 3 D1, 2 database network 1.00 1.00 2.00 log2*(4/3) file D3, 1 D1, 1 D2, 2 0.42 2.00 science system D2, 3 Computing Document Lengths (Example: D1) Vector for D1 computer: 4*0.42, network: 3*1, file: 2*2, science: 1*0.42 computer: 1.68, network: 3, file: 4, science: 0.42 Length of D1 SQRT(1.68*1.68 + 3*3 + 4*4 + 0.42*0.42 = 5.29 Intelligent Information Retrieval 11

Time Complexity of Indexing Complexity of creating vector and indexing a document of n tokens is O(n). So indexing m such documents is O(m n). Computing token IDFs for a vocabulary V is O(|V|). Computing vector lengths is also O(m n). Since |V| m n, complete process is O(m n), which is also the complexity of just reading in the corpus. Intelligent Information Retrieval 12

Retrieval with an Inverted Index Tokens that are not in both the query and the document do not effect the dot products in the computation of Cosine similarities. Usually the query is fairly short, and therefore its vector is extremely sparse. Use inverted index to find the limited set of documents that contain at least one of the query words. Intelligent Information Retrieval 13

Inverted Query Retrieval Efficiency Assume that, on average, a query word appears in B documents: Q = q1 q2 qn D11 D1B D21 D2B Dn1 DnB Then retrieval time is O(|Q| B), which is typically, much better than na ve retrieval that examines all N documents, O(|V| N), because |Q| << |V| and B << N. Intelligent Information Retrieval 14

Processing the Query Incrementally compute dot product of each indexed document as query words are processed one by one. To accumulate a total score for each retrieved document store retrieved documents in a hashtable DocumentReference is the key and the partial accumulated score is the value Once full dot product is computed, divide by the lengths (norms) of the document and query to obtain cosine similarity. Intelligent Information Retrieval 15

Inverted-Index Retrieval Algorithm Create a HashMap Vector, Q, for the query. Create empty HashMap, R, to store retrieved documents with scores. For each token, T, in Q: Let I be the IDF of T, and K be the count (tf) of T in Q; Set the weight of T in Q: W = K * I; Let L be the postingList of T from the dictionary H; For each Posting, P, in L: Let D be the document of P, and C be the count of P (tf of T in D); If D is not already in R (D was not previously retrieved) Then add D to R and initialize score to 0.0; Increment D s score by W * I * C; (product of T s weights in Q and D) Intelligent Information Retrieval 16

Retrieval Algorithm (cont) Compute the length, L, of the vector Q (square-root of the sum of the squares of its weights). For each retrieved document D in R: Let S be the current accumulated score of D; (S is the dot-product of D and Q) Let Y be the length of D as stored in its DocumentReference; Normalize D s final score to S/(L * Y); Sort retrieved documents in R by final score Return results in an array Intelligent Information Retrieval 17

An example of Query Processing from an Inverted Index Based on the Retrieval Algorithm Intelligent Information Retrieval 18

Query: information retrieval system IDF D1, 4 D3, 1 D5, 3 0.74 information D2, 2 1.32 query D1, 3 Computed during index construction 0.74 D1, 3 D4, 1 retrieval D5, 2 0.74 search D2, 2 D3, 1 D4, 2 1.32 system D1, 1 D5, 2 Doc lengths: D1 = SQRT((4*0.74)2 + (3*1.32)2 + (3*0.74)2 + (1*1.32)2 = 5.57 D2 = 3.03 D3 = 1.04 D4 = 1.65 D5 = 3.75 = 1.68 Q length = SQRT(0.74*0.74 + 0.74*0.74 + 1.32*1.32) Intelligent Information Retrieval 19

Query: information retrieval system D1, 4 D3, 1 D5, 3 0.74 information D2, 2 1.32 query D1, 3 0.74 D1, 3 D4, 1 retrieval D5, 2 0.74 search D2, 2 D3, 1 D4, 2 1.32 system D1, 1 D5, 2 Q Term: information (Iteration 1) increment score for D1 0 + (4*0.74) * (1*0.74) = 2.17 increment score for D3 0 + (1*0.74) * (1*0.74) = 0.54 increment score for D5 0 + (3*0.74) * (1*0.74) = 1.63 TFxIDF weight for information in Q TFxIDF weight for information in D1 Intelligent Information Retrieval 20

Query: information retrieval system D1, 4 D3, 1 D5, 3 0.74 information D2, 2 1.32 query D1, 3 0.74 D1, 3 D4, 1 retrieval D5, 2 0.74 search D2, 2 D3, 1 D4, 2 1.32 system D1, 1 D5, 2 Q Term: retrieval (Iteration 2) increment score for D1 2.17 + (3*0.74) * (1*0.74) = 3.80 increment score for D4 0 + (1*0.74) * (1*0.74) = 0.54 increment score for D5 1.63 + (2*0.74) * (1*0.74) = 2.72 D1 Score (partial dot product) from previous iteration Intelligent Information Retrieval 21

Query: information retrieval system D1, 4 D3, 1 D5, 3 0.74 information D2, 2 1.32 query D1, 3 0.74 D1, 3 D4, 1 retrieval D5, 2 0.74 search D2, 2 D3, 1 D4, 2 1.32 system D1, 1 D5, 2 Q Term: system (Iteration 3) increment score for D1 3.80 + (1*1.32) * (1*1.32) = 5.55 increment score for D5 2.72 + (2*1.32) * (1*1.32) = 6.21 Intelligent Information Retrieval 22

Query: information retrieval system D1, 4 D3, 1 D5, 3 0.74 information D2, 2 1.32 query D1, 3 0.74 D1, 3 D4, 1 retrieval D5, 2 0.74 search D2, 2 D3, 1 D4, 2 1.32 system D1, 1 D5, 2 Doc length (norm) for Query Final Scores (Cosine): D5 = 6.21 / (3.75 * 1.68) = 0.98 D1 = 5.55 / (5.57 * 1.68) = 0.59 D3 = 0.54 / (1.04 * 1.68) = 0.31 D4 = 0.54 / (1.64 * 1.68) = 0.20 Final Dot Products: D1 = 5.55 D3 = 0.54 D4 = 0.54 D5 = 6.21 Doc length (norm) for D5 Intelligent Information Retrieval 23

Design Issues for Inverted Index Implementation Four major options for storing weights in the postings Store the raw counts slow, but flexible (term weights can be changed without changing the index) Store normalized frequency the normalization would be done during the creation of the final dictionary and postings the normalized frequency would be inserted into the postings file instead of the raw term frequency Store the completely weighted term allows simple addition of term weights during the search, rather than first multiplying by the IDF of the term very fast, but changes to index requires changing all postings because the IDF changes; also relevance feedback reweighting is difficult No within-record frequency postings records do not store weights not suitable for ranked retrieval, but efficient for Boolean queries Intelligent Information Retrieval 24