Implementing AdaBoost Algorithm Insights

Discover the AdaBoost algorithm through insightful visuals and explanations. Learn about the boosting concept, AdaBoost algorithm overview, SVM classifier, credit card fraud dataset, and methods to address class imbalance. Dive into the world of machine learning with practical examples and explanations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Implementing AdaBoost Jonathan Boardman

Boosting The General Idea Kind of like a game of Guess Who? Each question on it s own only provides limited insight, much like a weak learner Many questions taken together allows for stronger predictions Image credit: https://i5.walmartimages.com/asr/5295fd05-b791-4109-9d82-07e8f41a9634_1.eeddf5db2eeb5a98103151a5df399166.jpeg?odnHeight=450&odnWidth=450&odnBg=FFFFFF

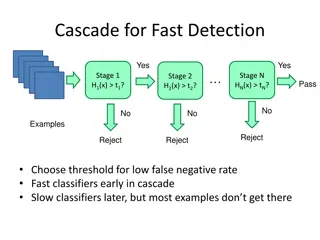

AdaBoost Algorithm Overview Choose how many iterations to run For each iteration: Train a classifier on a weighted sample (weights initially equal) to obtain a weak hypothesis Generate a strength for this hypothesis based on how well the learner did Reweight the sample Incorrectly classified observations get upweighted Correctly classified observations get downweighted Final classifier is a weighted sum of the weak hypotheses

The Classifier Support Vector Machine svm.SVC from sklearn Used default settings RBF kernel Gamma equal to 1 / n_features C set to 1 Gamma is roughly the inverse of the radius of influence of a single training example C acts as a regularization parameter Lower C -> larger margin -> simpler decision function Higher C -> smaller margin -> more complex decision function Image credit: https://scikit-learn.org/stable/auto_examples/svm/plot_rbf_parameters.html#sphx-glr-auto-examples-svm-plot-rbf-parameters-py

The Dataset Credit Card Fraud 284,807 credit card transactions collected over a period of 3 days, but only 492 were fraudulent. 30 Predictor Variables: Time, Amount, and 28 principal components V1 through V28 Target Variable: Class Binary Fraud (1) or Not Fraud (0)

Removing Class Imbalance Subsetting, Undersampleing, Shuffling the Data Undersampled the majority class (non-fraud) Random Sample of 492 without replacement from observations with Class = 0 Concatenated the 492 fraud and the 492 sampled non-fraud observations together to create a balanced dataset. Shuffled the observations

Further Preprocessing Drop all predictor fields except principal components V1 and V2 NOTE: In just these 2 dimensions, the data is not linearly separable Separate label and predictor data Apply z-score normalization to V1 and V2 Split the data into 5 disjoint folds

Results Boosting is Better than Lone SVM Lone SVM 5-Fold CV Accuracy: 0.817 AdaBoost-ed SVM 5-Fold CV Accuracy: 0.840 (18 iterations)