Individualizing Bayesian Knowledge Tracing: Skill vs. Student Parameters

Modeling student learning variability and the impact of skill and student-level factors in predicting performance using Bayesian Knowledge Tracing. Exploring the importance of individualization and understanding the influence of skill parameters versus student parameters.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Individualizing BKT. Are Skill Parameters More Important Than Student Parameters? Michael V. Yudelson Carnegie Mellon University

Modeling Student Learning (1) Sources of performance variability Time learning happens with repetition Knowledge quanta learning is better (only) visible when aggregated by skill Students people learn differently Content there are easier/harder chunks of material Michael V. Yudelson (C) 2016 2

Modeling Student Learning (2) Accounting for variability Time implicitly present in time-series data Knowledge quanta skills are frequently used as units of transfer Content many models address modality and/or instances of content Students significant attention is given to accounting for individual differences Michael V. Yudelson (C) 2016 3

Of Students and Skills Without accounting for skills (the what) there is little chance to see learning Component theory of transfer reliably defeats faculty theory and item-based models (Koedinger, et al., 2016) Student (the who) is, arguably, the runner up/contestant for the most potent factor Skill and student-level factors in models of learning Which one is more influential when predicting performance? Michael V. Yudelson (C) 2016 4

Focus if this work Subject: mathematics Model: Bayesian Knowledge Tracing (BKT) Investigation: adding per-student parameters Extension: [globally] weighting skill vs. student parameters Question: which [global] weight is larger per-skills or per-student? Michael V. Yudelson (C) 2016 5

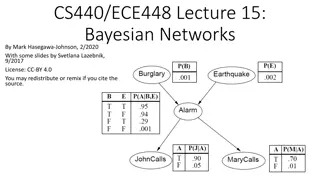

Bayesian Knowledge Tracing Unrolled view of single-skill BKT Parameters Values [0,1] Rows sum to 1 Forgetting (pF) = 0 Why 4 parameters Rows sum to 1, every last value can be omitted Forgetting is 0, no 5th parameter ? Priors? ? ? ? ? Known? Init? Unknown? 1-? Init? ? ? to? Known? to? Unknown? Forget? 1-Learn? Transitions? ? A? ? from? Known?1-Forget? from? Unknown? Learn? ? ? Known? Correct? 1-Slip? Guess? Incorrect? Slip? 1-Guess? Emissions? ? B? ? ? Unknown? Michael V. Yudelson (C) 2016 6

Individualization BKT Individualization student-level parameters 1PL IRT, AFM student ability intercept Split BKT parameters into student/skill components Corbett & Anderson, 1995; Yudelson et al., 2013 Multiplex Init for different student cohorts Pardos & Heffernan, 2010 Parameters are only set within student, not across students Lee & Brunskill, 2012 Michael V. Yudelson (C) 2016 7

Additive/Compensatory BKT Individualization BKT parameter P {Init, Learn, Slip, Guess) iBKT: splitting parameter P (Yudelson et al., 2013) P = f(Puser,Pskill)=Sigmoid( logit(Puser) + logit(Pskill) ) Per-student and per-skill parameters are added on the logit scale and converted back to probability scale Setting all Puser = 0.5 converts iBKT to standard BKT iBKT model fit using block coordinate descent iBKT-W: making parameter split more interesting P = f(Pu,Pk,W0,Wu,Wk,Wuk)= Sigmoid( W0 + Wu? logit(Pu) + Wk? logit(Pk) + Wuk? logit(Pu)? logit(Pk) ) W0 bias, hopefully low Wu vs. Wk student vs. skill weight Wuk interaction of student and skill components Michael V. Yudelson (C) 2016 8

Fitting BKT Models iBKT: HMM-scalable Public version Standard BKT only https://github.com/IEDMS/standard-bkt Fits standard and individualized BKT models using a suite of gradient-based solvers Exact inference of student/skill parameters (via block coordinate descent) iBKT-W: JAGS/WinBUGS via R s rjags package Hierarchical Bayesian Model Flexible hyper-parameterization Skill parameters drawn from uniform distribution Student parameters drawn from Gaussian distribution Only individualize Init and Learn parameters. Michael V. Yudelson (C) 2016 9

Data KDD Cup 2010 Educational Data Mining Challenge. Carnegie Learning s Cognitive Tutor data http://pslcdatashop.web.cmu.edu/KDDCup One curriculum unit Linear Inequalities JAGS/WibBUGS is less computationally efficient 336 students 66,307 transactions 30 skills Michael V. Yudelson (C) 2016 10

BKT Models. Statistical Fit. Model Parameters Hyper Parameters RMSE Accuracy Majority Class (predict correct) 0 0 0.52516 0.7242 Standard BKT hmm- scalable *4N 0 0.40571 0.7561 Standard BKT HBM 4N 0 0.40299 0.7569 iBKT hmm-scalable **4N+2M 0 0.39376 0.7680 iBKT HBM 4N+2M 4 0.39287 0.7692 iBKT-W HBM 4N+2M+4 12 0.39236 0.7687 iBKT-W-2G HBM*** 4N+2M+4 16 0.39252 0.7689 * N number of skills ** M number of students *** Init and Learn fit as a mixture of 2 Gaussians Michael V. Yudelson (C) 2016 11

The Story of Two Gaussians iBKT-W HBM iBKT-W-2G* HBM * Fitting a mixture of 3-Gaussians results in bimodal distribution as well Michael V. Yudelson (C) 2016 12

Student vs. Skill Model W0 0.012 Wskill 0.565 Wstudent 1.420 Wstudent*skill 0.004 iBKT-W HBM iBKT-W-2G HBM 0.019 0.700 1.274 0.007 The [global] bias W0 is low The [global] interaction term Wstudent*skill is even lower Student [global] weight in the additive function is visibly higher Michael V. Yudelson (C) 2016 13

Discussion (1) Student parameters v. skill parameters Bias and interaction terms effectively 0 A little disappointed about the interaction Student parameters weighted higher (2 reported + 7 additional models tried) Only small chance of over-fit despite random- factor treatment 30 skills (uniform distr.) 336 students (Gaussian distr.) Wk and Wu weights could be compensating/shifting the individual student/skill parameters Michael V. Yudelson (C) 2016 14

Discussion (2) iBKT via hmm-scalable Per-student Init(x)~Learn(y) Exact inference (fixed effect) iBKT-W-2G via HBM Per-student Init(x)~Learn(y) Regularization via setting priors Wouldn t it be nice to have students here? W R R R R R, BKT: Init 0, Learn 1, Logistic: Sigmoid(intercept) 0, Sigmoid(slope) 1 Michael V. Yudelson (C) 2016 15

Discussion (3) Small differences in statistical fits Models with similar accuracies could be vastly different Significant differences in the amount of practice they would prescribe Prescribed practice time hh:mm Michael V. Yudelson (C) 2016 16

Discussion (4) iBKT-W-2G: what do 2 Gaussians represent? Problems, time, hints, errors, % correct, {time,errors,hints}/problem none of these explain the membership Lower Init&Learn vs. higher Init&Learn does explain membership latent uni-dimensional student ability Michael V. Yudelson (C) 2016 17

Thank you! Michael V. Yudelson (C) 2016 18