Inference from Non-Probability Samples Using Machine Learning

The use of machine learning techniques in making inferences from non-probability samples. Topics cover expanding the toolbox for statistical analysis, handling disruptive technologies, big data challenges, and methods such as k-Nearest Neighbors and Regression Trees.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Expanding the toolbox: Inference from non-probability samples using machine learning Joep Burger, Bart Buelens, Jan van de Brakel Statistics Netherlands INPS, 16 17 March 2017, Paris

Disruptive technologies Big picture Transport Light Music

Disruptive technologies Big picture Official statistics

Big data Human-sourced data social media internet search Process-mediated data scanners electronic funds transfers Machine-generated data GPS sensors UNECE 2013 4

Potential Timelier Higher frequency More detail Higher precision ( more accurate) Lower measurement bias Cheaper Less burden 5

Challenges Representation big data sample population of interest non-probability samples Others measurement (data information) processing privacy continuity 6

Street Bump App Automatic pothole mapping Selection bias wealthier, younger neighborhoods

INPS Inference: generalize from sample to population Predict missing values Pseudo-design-based Model-based Algorithmic Auxiliary information Known for all units in the population ? ? ? sample population remainder

Formal Population quantity of interest ? values known for sample S, unknown for remainder R Prediction estimator with Variance through bootstrapping

Methods Sample mean (SAM) Pseudo-design-based (PDB) Generalized linear model (GLM) k-Nearest neighbors (KNN) Artificial neural network (ANN) Regression tree (RTR) Support vector machine (SVM)

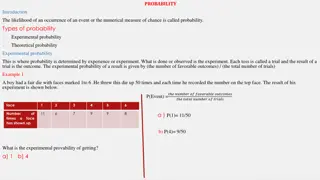

Sample mean (SAM) Mean of observed units ??= ? =1 ? ? ? ??

Pseudo-design-based (PDB) Mean of observed units within stratum Use auxiliary variables 1 ? ??= ? = ?? ? ? ?

Generalized linear model (GLM) Generalized combination of auxiliary variables ?(? ??) = ??? ??= ? 1( ???)

k-Nearest neighbors (KNN) Mean of ? observed units closest in ? space Distance measure ??=1 ?2 ? ? ?? ?? ? ? ?1

Artificial neural network (ANN) Artificial neuron Network of artificial neurons ??= ???(??, ?) Kon Mamadou Tadiou

Regression tree (RTR) Construct binary tree Maximize between variance Stopping criterion ?1 ? ?1> ? ?1 ? ?1> ? ?2 ? ?2> ? Mean of observed units within leaf 1 ?? ??= ??? ??, ? = ?? ? ? ? Algorithmic version of PDB

Support vector machine (SVM) Linear separation in ?>?( ? ) Learn in ?(Kernel trick) Original space N Higher-dimensional space M ? ??,?? = ??, ?? ?>? ??= ??? ??,?? ?

Case study Online Kilometer Registration 6,7 mln privately owned cars Mileage readings annual mileage Auxiliary variables Registration year Weight Fuel type Owner s age

Non-probability sample Only data about young cars Inference: ??= ? ?? ? ? ?? ? ?

Conclusions Both PS and NPS may suffer from selection bias Beyond pseudo-design-based methods Model-based Algorithmic (Many, continuous) auxiliary variables crucial Registers Paradata Profiling

Working paper https://www.cbs.nl/nl- nl/achtergrond/2015/44/predictive-inference- for-non-probability-samples Contact j.burger@cbs.nl