Introduction to SVM and Logistic Regression in Machine Learning

Explore the fundamental concepts of Support Vector Machines (SVM) and Logistic Regression in machine learning through topics such as soft and hard margin comparisons, generative versus discriminative approaches, linear classifiers, margin optimization, and more. Dive into the differences between SVMs and logistic regression for effective classification tasks and understand how to handle non-linearly separable data.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

ECE 5984: Introduction to Machine Learning Topics: SVM soft & hard margin comparison to Logistic Regresion Readings: Barber 17.5 Dhruv Batra Virginia Tech

New Topic (C) Dhruv Batra 2

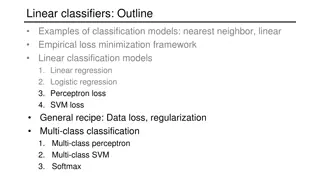

Generative vs. Discriminative Generative Approach (Na ve Bayes) Estimate p(x|y) and p(y) Use Bayes Rule to predict y Discriminative Approach Estimate p(y|x) directly (Logistic Regression) Learn discriminant function f(x) (Support Vector Machine) (C) Dhruv Batra 3

Linear classifiers Which line is better? w.x = j w(j) x(j) 4

Margin x+ x- (C) Dhruv Batra Slide Credit: Carlos Guestrin 5

Support vector machines (SVMs) Solve efficiently by quadratic programming (QP) Well-studied solution algorithms Hyperplane defined by support vectors (C) Dhruv Batra Slide Credit: Carlos Guestrin 6

What if the data is not linearly separable? 7 Slide Credit: Carlos Guestrin

What if the data is not linearly separable? Minimize w.w and number of training mistakes 0/1 loss Slack penalty C Not QP anymore Also doesn t distinguish near misses and really bad mistakes (C) Dhruv Batra Slide Credit: Carlos Guestrin 8

Slack variables Hinge loss If margin >=1, don t care If margin < 1, pay linear penalty (C) Dhruv Batra Slide Credit: Carlos Guestrin 9

Soft Margin SVM Effect of C Matlab demo by Andrea Vedaldi (C) Dhruv Batra 10

Side note: Whats the difference between SVMs and logistic regression? SVM: Logistic regression: Log loss: SVM: Hinge Loss LR: Logistic Loss (C) Dhruv Batra Slide Credit: Carlos Guestrin 11

(C) Dhruv Batra Slide Credit: Andrew Moore 12

(C) Dhruv Batra Slide Credit: Andrew Moore 13

(C) Dhruv Batra Slide Credit: Andrew Moore 14

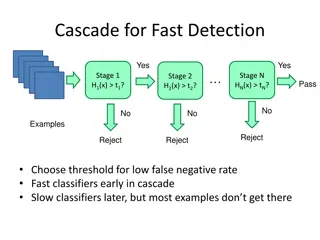

Does this always work? In a way, yes (C) Dhruv Batra Slide Credit: Blaschko & Lampert 15

Caveat (C) Dhruv Batra Slide Credit: Blaschko & Lampert 16

Kernel Trick One of the most interesting and exciting advancement in the last 2 decades of machine learning The kernel trick High dimensional feature spaces at no extra cost! But first, a detour Constrained optimization! (C) Dhruv Batra Slide Credit: Carlos Guestrin 17