iThreads: A Threading Library for Parallel Incremental Computation

This content explores iThreads, a threading library designed for efficient execution of applications in successive runs with small input changes. They aim for transparency, practicality, and efficiency in parallel incremental computation, targeting unmodified pthreads-based programs while supporting a full range of synchronization primitives. The approach involves automatic incremental computation and parallelism proposals, aiming for up to 8X speedups compared to pthreads. The design goals include practicality and efficiency, addressing the limitations of existing approaches in incremental computation.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

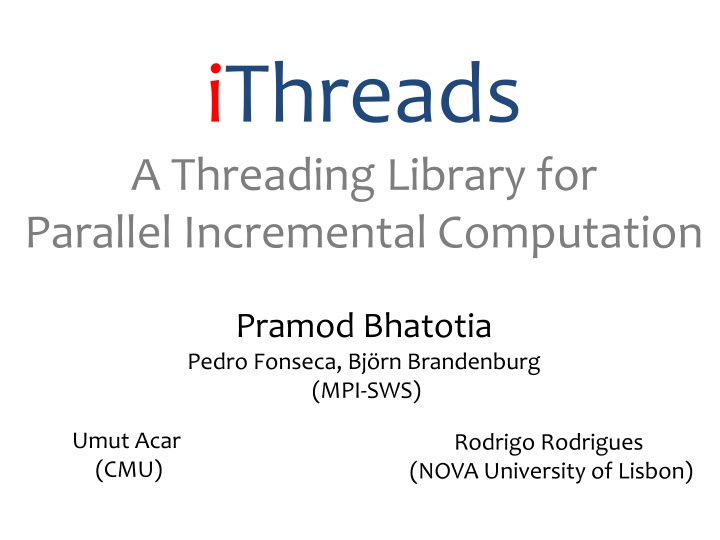

iThreads A Threading Library for Parallel Incremental Computation Pramod Bhatotia Pedro Fonseca, Bj rn Brandenburg (MPI-SWS) Umut Acar (CMU) Rodrigo Rodrigues (NOVA University of Lisbon)

Common workflow: Rerun the same application with evolving input Scientific computing Data analytics Reactive Systems Goal: Efficient execution of apps in successive runs Small input change small work to update the output 2

Existing approaches Design application- specific algorithms Re-compute from scratch (-) Inefficient (+) Easy to design (+) Efficient (-) Hard to design Incremental computation Automatic + Efficient 3

Auto Incrementalization Well-studied topic in the PL community Compiler and language-based approaches Target sequential programs! 4

Parallelism Proposals for parallel incremental computation Hammer et al. [DAMP 07] Burckhardt et al. [OOPSLA 11] Limitations Require a new language with special data-types Restrict programming model to strict fork-join Existing multi-threaded programs are not supported! 5

Design goals 1. Transparency Target unmodified pthreads based programs 2. Practicality Support the full range of synchronization primitives 3. Efficiency Design parallel infrastructure for incremental computation 6

iThreads $ LD_PRELOAD= iThreads.so $ ./myProgram <input-file> (initial run) $ echo <offset> <len> >> changes.txt $ ./myProgram <input-file> (incremental run) Speedups w.r.t. pthreads up to 8X 7

Outline Motivation Design Evaluation 8

Behind the scenes step#1 Divide step#2 Build step#3 Perform Computation Sub-computations Dependence graph Change propagation Initial run Incremental run 9

A simple example shared variables: x, y, and z thread-1 thread-2 lock(); z = ++y; unlock(); lock(); x++; unlock(); local_var++; lock(); y = 2*x + z; unlock(); 10

Step # 1 Build Perform Divide Computation Sub-computations Dependence graph Change propagation How do we divide a computation into sub- computations? 11

Sub-computations thread-1 thread-2 lock(); z = ++y; unlock(); lock(); x++; unlock(); Entire thread? Instruction? Single local_var++; Coarse-grained Fine-grained lock(); y = 2*x + z; unlock(); Small change implies recomputing the entire thread Requires tracking individual load/store instructions 12

Sub-computation granularity Entire thread Single instruction Coarse-grained Expensive Release Consistency (RC) memory model to define the granularity of a sub-computation A sub-computation is a sequence of instructions between pthreads synchronization points 13

Sub-computations thread-1 thread-2 lock(); z = ++y; unlock(); Sub- computations for thread-2 lock(); x++; unlock(); Sub-computation for thread-1 local_var++; lock(); y = 2*x + z; unlock(); 14

Step # 2 Build Perform Divide Computation Sub-computations Dependence graph Change propagation How do we build the dependence graph? 15

Example: Changed schedule shared variables: x, y, and z thread-1 thread-2 lock(); z = ++y; unlock(); Read={x} Write={x} Read={local_var} Write={local_var} lock(); x++; unlock(); different schedule Read={x,z} Write={y} (1): Record happens-before dependencies local_var++; lock(); y = 2*x + z; unlock(); Read={y} Write={y,z} 16

Example: Same schedule shared variables: x, y, and z thread-1 thread-2 lock(); z = ++y; unlock(); Read={y} Write={y,z} Same schedule lock(); x++; unlock(); Read={x} Write={x} Read={local_var} Write={local_var} local_var++; lock(); y = 2*x + z; unlock(); Read={x,z} Write={y} 17

Example: Changed input y shared variables: x, y, and z thread-1 thread-2 lock(); z = ++y; unlock(); Read={y} Write={y,z} Write={y,z} Read={y} different input lock(); x++; unlock(); Read={x} Write={x} Read={local_var} Write={local_var} (2): Record data dependencies local_var++; Read={x,z} lock(); y = 2*x + z; unlock(); Read={x,z} Write={y} Write={y} 18

Dependence graph Vertices: sub-computations Edges: Happens-before order b/w sub-computations Data dependency b/w sub-computations 19

Step # 3 Build Perform Divide Computation Sub-computations Dependence graph Change propagation How do we perform change propagation? 20

Change propagation Dirty set {Changed input} For each sub-computation in a thread Check validity in the recorded happens-before order If (Read set Dirty set) Re-compute the sub-computation Add write set to the dirty set Else Skip execution of the sub-computation Apply the memoized effects of the sub-computation 21

Outline Motivation Design Evaluation 22

Evaluation Evaluating iThreads 1. Speedups for the incremental run 2. Overheads for the initial run Implementation (for Linux) 32-bit dynamically linkable shared library Platform Evaluated on Intel Xeon 12 cores running Linux 23

1. Speedup for incremental run No. of Threads = 12 No. of Threads = 24 No. of Threads = 48 No. of Threads = 64 10 Time speedup Better 1 Worst <0.1 0.1 Histogram Linear_reg Kmeans Matrix_mul Swapations Blackscholes String_match PCA Canneal Word_count RevIndex Speedups vs. pthreads up to 8X 24

Memoization overhead Write a lot of intermediate state 1000 Space Overheads 100 10 1 Histogram Linear_reg Kmeans Matrix_mul Swapations Blackscholes String_match PCA Canneal Word_count RevIndex Space overheads w.r.t. input size 25

2. Overheads for the initial run 1000 No. of Threads = 12 No. of Threads = 24 No. of Threads = 48 No. of Threads = 64 100 Time overhead Worst 10 1 Better 0.1 Histogram Linear_reg Kmeans Matrix_mul Swapations Blackscholes String_match PCA Canneal Word_count RevIndex Runtime overheads vs. pthreads 26

Summary A case for parallel incremental computation iThreads: Incremental multithreading Transparent: Targets unmodified programs Practical: Supports the full range of sync primitives Efficient: Employs parallelism for change propagation Usage: A dynamically linkable shared library 27

iThreads Transparent + Practical + Efficient bhatotia@mpi-sws.org Thank you! 28