Key Concepts Learned in ECE 313: Post-Midterm Review

Explore key concepts covered in ECE 313 post-midterm, including Bernoulli, Poisson, Exponential distributions, and more. Get prepared for final project presentation and exam review.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

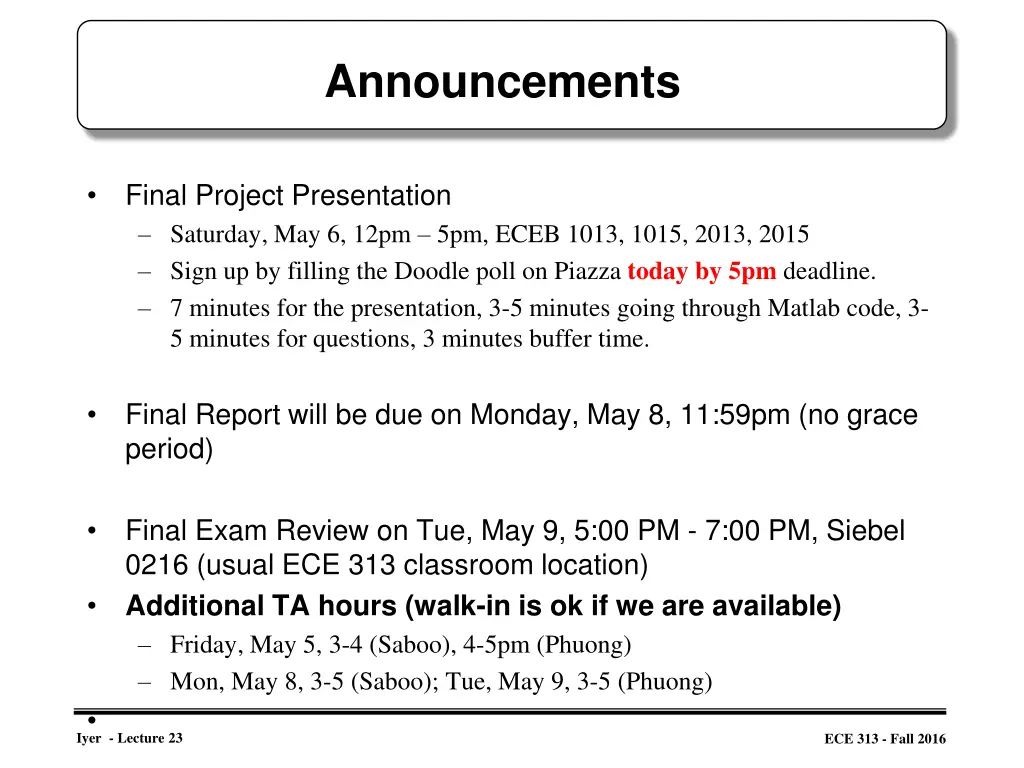

Announcements Final Project Presentation Saturday, May 6, 12pm 5pm, ECEB 1013, 1015, 2013, 2015 Sign up by filling the Doodle poll on Piazza today by 5pm deadline. 7 minutes for the presentation, 3-5 minutes going through Matlab code, 3- 5 minutes for questions, 3 minutes buffer time. Final Report will be due on Monday, May 8, 11:59pm (no grace period) Final Exam Review on Tue, May 9, 5:00 PM - 7:00 PM, Siebel 0216 (usual ECE 313 classroom location) Additional TA hours (walk-in is ok if we are available) Friday, May 5, 3-4 (Saboo), 4-5pm (Phuong) Mon, May 8, 3-5 (Saboo); Tue, May 9, 3-5 (Phuong) Iyer - Lecture 23 ECE 313 - Fall 2016

Key concepts substantially learned post-midterm Bernoulli, Poisson binomial approximation Exponential and related: K-stage Erlang, hypoexponential, hyperexponential Normal Reliability function, instantaneous failure rate function, TMR Conditional probability Expected Value, Variance, Covariance and Correlation Joint, conditional distribution Independent random variables Central Limit Theorem, Law of large number (strong and weak) Markov, Chebyshev inequality Iyer - Lecture 23 ECE 313 - Fall 2016

Solutions Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 1 The jointly continuous random variable X and Y have joint pdf v v = u u Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 1 (cont.) The jointly continuous random variable X and Y have joint pdf Find P[Y > 3X] v = 3u v v = u u Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 2 Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 2 (cont.) Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 3 Suppose ? fair die are independently rolled. Let: ??= 1, ?? ?? ??? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? and ?? ?? ???? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? ??, which is the number of odds showing, and ? = ??, which is the number of evens showing. Find ???(??,??) if 1 ? ? and 1 ? ?. ??= 1, ? Let ? = ?=1 ?=1 ? Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 3 (cont.) Suppose ? fair die are independently rolled. Let: ??= 1, ?? ?? ??? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? and ?? ?? ???? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? ??, which is the number of odds showing, and ? = ??, which is the number of evens showing. Find ???(??,??) if 1 ? ? and 1 ? ?. Solution: To calculate the ???(??,??) , we only need to consider the case where ? = ?, a specific roll of the die, because when ? ?, we are considering the ?? and ?? from two different rolls of the dice, which are actually independent from each other and therefore their covariance would be zero (see Lecture 26). ??= 1, ? Let ? = ?=1 ?=1 ? Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 3 (cont.) For ? = ?, the joint PDF of ??and ??, ?(??,??) would be: p(0,0) = 0 (the probability that neither odd nor even shows in the roll of a die) p(1,0) = 1/2 (odd shows up) p(0,1) = 1/2 (even shows up) p(1,1) = 0 (the probability that both odd and even show in a roll, which isn t possible) So ? ???? = 0 because ???? is always zero. ? ??? ?? =1 2 So we have the ??? ??,?? = 0 1 2 1 4= 1/4 Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 4 Let P(D) be the probability you have Zika. Let P(T) be the probability of a positive test. We wish to know P(D|T). Bayes theorem says ?(?|?) = ?(?|?)?(?) ?(?) ?(?|?) = ?(?|?)?(?) ?(?|?)?(?) + ?(?|??)?(??) where P(ND) means the probability of not having Zika. Iyer - Lecture 23 ECE 313 - Fall 2016

Problem 4 (cont.) We have P(D) = 0.0001 (the a priori probability you have Zika). P(ND) = 0.9999 P(T|D) = 1 (if you have Zika the test is always positive). P(T|ND) = 0.01 (1% chance of a false positive). Plugging these numbers in we get P(D|T) = 1 0.0001 1 0.0001 + 0.01 0.9999 0.01 That is, even though the test was positive your chance of having Zika is only 1%, because your P(D) is indeed very low. However, if you went to Florida recently then your starting P(D) is 0.005. In this case P(D|T) = 1 0.005 1 0.005 + 0.01 0.995 0.33 and you should be a lot more worried. Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Poisson Distribution Let X be a discrete random variable, representing the no. of arrivals in an interval (0,t] . Assume is the rate of arrival of the jobs. In a small interval, t, prob. of new arrival is t. If t is small enough, probability of two arrivals in t may be neglected Suppose interval (0,t] is divided into n subintervals of length t/n Suppose arrival of a job in any interval is independent of the arrival of a job in any other interval. For a large n, the n intervals can be thought of as constituting a sequence of Bernoulli trials with probability of success p = t/n Therefore, probability of k arrivals in a total of n intervals, is given by: Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Expectations Expectation: The Discrete Case ( x p = [ ] ( ) E X xp x : = ) 0 x [ ] ( ) E X xf x dx The Continuous Case Expectation of function of a random variable ( x p = [ ( )] ( ) ( ) E g X g x p x : ) 0 x = [ ( )] ( ) ( dx ) E g X g x f x Corollary: + = + [ ] [ ] E aX b aE X b Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Moments Moments: i ( ) ( ), , x p x if X is discrete i X i = = [ ] [ ( )] E Y E X ( ) ( ) , , x f x dx if X is continuous X mk=E[(X-E[X])k] = = n n [ ], 1 Y X E X n Variance: 2 ( [ ]) ( ) x E X p x if X is discrete i i i ( = = = 2 2 [ ] Var X x E 2 [ ]) ( ) x E X f x dx if X is continuous ( = 2 2 ( ) [ ] [ ]) Var X E X X Corollary: + = 2 [ ] ( ) Var aX b a Var X Iyer - Lecture 23 ECE 313 - Fall 2016

Joint distribution The pmf p(x, y) of random variables ? and ? is given by the following table. ? = ? ? = ? 0.2 0 0.3 0.1 0.2 0.2 ? = 0 ? = 1 ? = 2 Iyer - Lecture 23 ECE 313 - Fall 2016

Joint distribution Iyer - Lecture 23 ECE 313 - Fall 2016

Joint distribution Iyer - Lecture 23 ECE 313 - Fall 2016

Joint distribution Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Covariance and Variance The covariance of any two random variables, X and Y, denoted by Cov(X,Y), is defined by = ( , ) [( [ ])( X [ ])] + Cov X Y E X E X Y E Y = [ [ Y ] E [ ] [ E ] [ ]] E XY YE XE Y E X E Y = + [ ] [ ] [ ] [ ] [ ] [ ] [ ] E XY E X E X Y E X E Y = [ ] [ ] [ ] E XY E X E Y If X and Y are independent then it follows that Cov(X,Y)=0 For any random variable X, Y, Z, and constant c, we have: 1. Cov (X,X) = Var(X), 2. Cov (X,Y) = Cov(Y,X), 3. Cov (cX,Y) = cCov(X,y), 4. Cof (X,Y+Z) = Cov(X,Y) + Cov(X,Z). Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Covariance and Variance Covariance and Variance of Sums of Random Variables n m n m = i = j = i 1 = , ( , ) Cov X Y Cov X Y i j i j = 1 1 1 j A useful expression for the variance of the sum of random variables can be obtained from the preceding equation n m n n = i = j = i = i 1 = + , ( ) 2 ( , ) Cov X Y Var X Cov X Y i j i i j 1 1 1 j i , = ,..., 1 Xi i n If are independent random variables, then the above equation reduces to n n = i = i = ( ) Var X Var X i i 1 1 Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Example Three balls numbered one through three are in a bag. Two balls are drawn at random, without replacement, with all possible outcomes having equal probability. Let X be the number on the first ball drawn and Y be the number on the second ball drawn. (a) Are X and Y independent? (b) Find E[X]. (c) Find Var(X). (d) Find E[XY]. (e) Find the correlation coefficient, X,Y Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Example (Solution) (a) We find the marginal pmfs of X and Y and their joint pmf: When picking the first ball we have 3 possibilities {1, 2, 3}, so X is uniformly distributed with P(X = x) = 1/3. For the second ball, depending on which number was on the first ball, we can calculate the probability of each number using the law of total probability as follows: P(Y = k) = P(Y = k | X = k)P(X = k)+ P(Y = k | X k)P(X k) The probability of seeing a number on second ball if it has been already seen in the first ball, P(Y = k | X = k), is zero. And the probability of seeing a number on second ball that has not been seen in the first ball, P(Y = k | X k), is 1/2. So for the pmf of Y we have: P(Y = k) = 0 (1/3) + (1/2) (2/3) = 1/3 Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Example (Solution) For the joint pmf of X and Y, we find all possible values that pair (X, Y) can take as follows: There are a total of 3X2 = 6 possibilities and each of them are equally likely to happen, so the joint pmf of X and Y would be P(X, Y) = 1/6. ?,? 1 < ?,? < 3,? ? = { 1,2 ,(1,3), 2,1 , 2,3 , 3,1 , 3,2 } So P(X, Y) = 1/6 P(X).P(Y) = 1/9 X and Y are not independent from each other. Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Example (Solution) Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Problem 1 Suppose ? fair die are independently rolled. Let: ??= 1, ?? ?? ??? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? and ?? ?? ???? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? ??, which is the number of ones showing, and ? = ??, which is the number of two s showing. Find ???(??,??) if 1 ? ? and 1 ? ?. ??= 1, ? Let ? = ?=1 ?=1 ? Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Problem 1 Suppose ? fair die are independently rolled. Let: ??= 1, ?? ?? ??? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? and ?? ?? ???? ?????? ? ??? ?? ? ? ?? ??? 0, ?? ?????? ??, which is the number of ones showing, and ? = ??, which is the number of two s showing. Find ???(??,??) if 1 ? ? and 1 ? ?. Solution: To calculate the ???(??,??) , we only need to consider the case where ? = ?, a specific roll of the die, because when ? ?, we are considering the ?? and ?? from two different rolls of the dice, which are actually independent from each other and therefore their covariance would be zero. ??= 1, ? Let ? = ?=1 ?=1 ? Iyer - Lecture 23 ECE 313 - Fall 2016

Covariance Problem 1 (Cont.) For ? = ?, the joint PDF of ??and ??, ?(??,??) would be: p(0,0) = 0 (the probability that neither odd nor even shows in the roll of a die) p(1,0) = 1/2 (odd shows up) p(0,1) = 1/2 (even shows up) p(1,1) = 0 (the probability that both odd and even show in a roll, which isn t possible) So ? ???? = 0 because ???? is always zero. ? ??? ?? =1 2 So we have the ??? ??,?? = 0 1 2 1 4= 1/4 Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Limit Theorems Markov s Inequality: If X is a random variable that takes only nonnegative values, then for any value a>0: [ ] E X { } P X a a Chebyshev s Inequality: If X is a random variable with mean and variance 2then for any value k>0, 2 { } P X k 2 k Strong law of large numbers: Let X1,X2, be a sequence of independent random variables having a common distribution, and let E[Xi]= Then, with probability 1, + + + n ... X X X 1 2 as n n Iyer - Lecture 23 ECE 313 - Fall 2016

Review: Limit Theorems Central Limit Theorem: Let X1, X2, be a sequence of independent, identically distributed random variables, each with mean and variance 2 then the distribution of X X + + + ... X n 1 2 n n Tends to the standard normal as . That is, n + + + n ... 1 X X X n a 2 / 2 x 1 2 n P a e dx 2 as . n Note that like other results, this theorem holds for any distribution of the Xi s; herein lies its power. Iyer - Lecture 23 ECE 313 - Fall 2016

Piazza Question on Markov Inequality The Markov inequality says that as E[X] gets smaller than a, then probability of P(X >= a) gets very close to 1. Also as the value of a increases, the quotient E(X)/a will becomes smaller. This means that the probability of X being very, very large is small Please note that the Markov inequality works for any random non-negative random variable with any distribution and the real use of Markov inequality is to prove the Chebychev's inequality. Iyer - Lecture 23 ECE 313 - Fall 2016

Example Chebyshev Inequality The mean and standard deviation of the response time in a multi- user computer system are known to be 15 seconds and 3 seconds respectively. Estimate the probability that the response time is more than 5 seconds from the mean. The Chebyshev inequality with m = 15, = 3, and a = 5: Iyer - Lecture 23 ECE 313 - Fall 2016

Instantaneous Failure Rate Let ? ? =3 2 ? for 0 ? 1. Find the instantaneous failure rate. Solution: 3 2 ? ? = ? 3 2 ? ? = 1 ? ? = 1 ? ? ? =? ? ?(?)= 1.5 ? 3 2 1 ? ? ? = lim?? 0? ?+?? ? ? ? ? ?(?) = ?? ?(?) Iyer - Lecture 23 ECE 313 - Fall 2016

Example - Joint Distribution Functions The jointly continuous random variable X and Y have joint pdf v v = u u Iyer - Lecture 23 ECE 313 - Fall 2016

Example - Joint Distribution Functions The jointly continuous random variable X and Y have joint pdf Find P[Y > 3X] v = 3u v v = u u Iyer - Lecture 23 ECE 313 - Fall 2016

![Lec [2] Health promotion](/thumb/274962/lec-2-health-promotion-powerpoint-ppt-presentation.jpg)