Language Testing History & Development

The historical and recent developments in language testing, from pre-scientific stages to communicative methods. Learn about different testing types and stages in language assessment.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

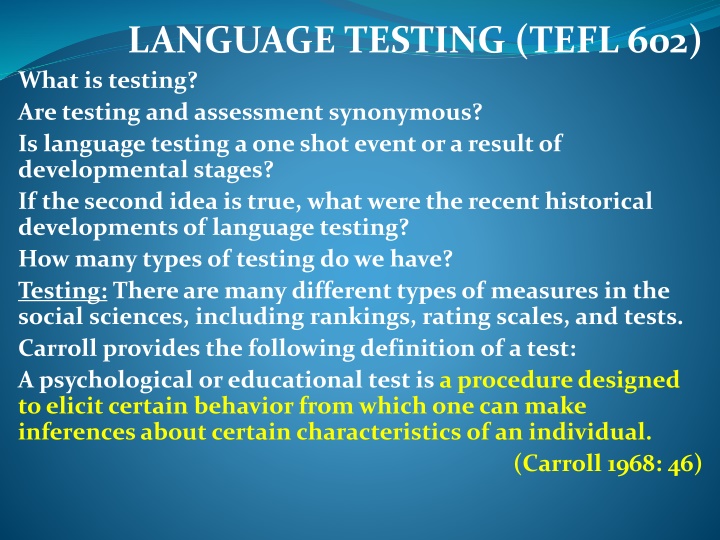

LANGUAGE TESTING (TEFL 602) What is testing? Are testing and assessment synonymous? Is language testing a one shot event or a result of developmental stages? If the second idea is true, what were the recent historical developments of language testing? How many types of testing do we have? Testing: There are many different types of measures in the social sciences, including rankings, rating scales, and tests. Carroll provides the following definition of a test: A psychological or educational test is a procedure designed to elicitcertain behavior from which one can make inferences about certain characteristics of an individual. (Carroll 1968: 46)

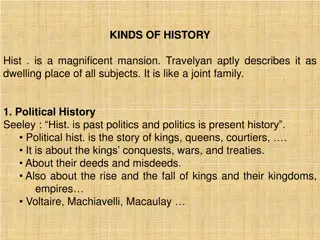

a test is a measurement instrument designed to elicit a specific sample of an individual s behavior. As one type of measurement, a test necessarily quantifies characteristics of individuals according to explicitly designed procedures. What distinguishes a test from other types of measurement is that it is designed to obtain a specific sample of behavior. Historical stages of language testing According to Spolsky (1875), there are three stages in the recent history of language testing. The pre-scientific, the psychometric-structuralist and the psycholinguistic- socio-linguistic.

These may be characterized as the Garden of Eden, the Vale of Tears and the Promised Land By Morrow (1979). However, these stages have been divided into four by Heaton (1990). The essay translation, the structuralist, the integrative and the communicative. Different tests or even different parts of a given test may fall into one or another of these stages. On the other hand, these stages may be viewed as concomitant with the teaching methods. For instance, pre-scientific stage with grammar translation, psychometric-structuralistwith audio lingual and the psycholinguistic- sociolinguistic with the recent communicative language teaching methods.

The essay translation period 1. This is the pre-scientific stage of language testing. No special skill or expertise was required in testing. The subjective judgment of the teacher is important. Tests were consisting essay writing, translation and grammatical analysis (often in the form of comments about the language being learnt).

Tests had a heavy literary and cultural bias Public exams like ESLCE result from this stage sometimes had an aural/oral component at the intermediate and advanced levels as addition but without an integral part of the syllabus or the examination. 2. The structuralist stage Language learning is concerned with acquisition of a set of habits. Focuses on structural linguistics identifies and measures learners mastery of separate elements of the target language such as phonology, vocabulary and grammar. Such mastery is tested using words and sentences completely divorced from any context.

The skills of listening, speaking, reading and writing are also separated from one another since it believes to test one thing at a time. This structuralistapproach is important to concentrate on testees separate ability such as testing writing composition making it independent of reading. statistical measures of reliability and validity are legacies of this stage as a result of the popularity of multiple choice items. 3.The integrative stage This primarily involves the testing of language in context being concerned with meaning. It does not seek to separate language skills into neat divisions to improve test reliability. These tests are designed to assess learners ability to use two or more skills simultaneously.

Thus, integrative tests are concerned with global view of proficiency i.e. an underlying language competence or grammar of expectancy (that argues every learner to possess regardless of the functional language or regardless of the purpose for which the language is being learnt. integrative testing involves functional language but not the use of functional language. This type of testing is best characterized by the use of cloze testing and dictation. Oral interviews, translation and essay writing are also included in many integrative tests which may be overlooked by those who narrowly see this test. The principle of cloze testing is based on Gestalt s theory of closure (closing gaps in patterns subconsciously i.e. ability to decode interrupted or mutilated messages by making the most acceptable substitutions from all the contextual

clues available. Every nth word is deleted in a text (usually every 5th, 6thor 7thword). The text of a close test should be long enough to allow a reasonable number of deletions (about 40/50 blanks). The more blanks the more reliable the cloze test. Methods of scoring may be awarding marks to acceptable (awarding to any reasonable equivalent answer) or to exact answer only. We need 3 types of knowledge to perform cloze tests; A) Linguistic knowledge/knowledge about the language B) Textual knowledge/knowledge about the text C) Knowledge of the world/global knowledge Cloze tests correlate highly with listening, writing and speaking abilities.

Provide lead in for cloze test i.e. no deletion should be made in the first few sentences to enable students become familiar with the text. Integrative tests use cloze tests, dictation, oral interviews, composition writing and translation methods. Cloze tests may be used for achievement, proficiency , diagnostic and placement tests. Dictation is another type of integrative tests. This measures students skill of listening comprehension (auditory discrimination, auditory memory, spelling, recognition of sound segments, grammatical & lexical patterns of language). N.B plz. Take time to see the three other types of integrative tests : oral interviews, composition writing and translation.

4. The communicative stage This language testing emphasizes meaning of utterances than their form and structure. Although there is a link b/n communicative and integrative tests, communicative tests are primarily concerned with how language is used in communication. Mostly associated with real life tasks. Success in this test is proved by success of students with their effectiveness in communication than formal language. Language use is emphasized to the exclusion of language usage ( knowledge of formal patterns of language) . The attempt made to measure students different performance profile or language skills by using communicative tests is called divisibility hypothesis . This is due to measuring students different proficiency (listening, reading, speaking, and writing) than simply one overall measure.

Types of Language Tests The needs of assessing the outcome of learning have led to the development and elaboration of different test formats. Testing language has traditionally taken the form of testing knowledge about language, usually the testing of knowledge of vocabulary and grammar. Stern (1983, p. 340) notes that if the ultimate objective of language teaching is effective language learning, then our main concern must be the learning outcome . Learning the language Learning about the language.

In the same line of thought, Wigglesworth (2008) further adds that In the assessment of languages, tasks are designed to measure learners productive language skills through performances which allow candidates to demonstrate the kinds of language skills that may be required in a real world context. This is because a specific purpose language test is one in which test content and methods are derived from an analysis of specific purposes of target language use situation, so that test tasks and content are authentically representative of tasks in the target situation (Douglas, 2000, p. 19).

In short Douglas Brown said, Specific purpose tests make their contents and methods focus on identifying and analyzing specific purposes of language use situations of the target language. i.e. test tasks and contents should be authentic so that they reflect and match with tasks in real life situations of the target language. Authenticity refers to purposes in real life situation. Thus, the issue of authenticity is central to the assessment of language for specific functions.

This is another way of saying that testing is a socially situated activity although the social aspects have been relatively under-explored (Wigglesworth, 2008). Yet, language tests differ with respect to how they are designed, and what they are for, in other words, tests differ in respect to test method and test purpose. In terms of method, we can broadly distinguish traditional paper-and-pencil language tests from performance tests. Paper-and-pencil language tests are typically used for the assessment either of separate components of language knowledge (grammar, vocabulary etc.), or of a receptive understanding (listening and reading comprehension).

In performance-based tests, the language skills are assessed in an act of communication. Performance tests are most commonly tests of speaking and writing, for instance, to ask a language learner to introduce himself or herself formally or informally and to write a composition, a paragraph or an essay. A performance test is a test in which the ability of candidates to perform particular tasks, usually associated with job or study requirements, is assessed (Davies et al.) i.e. we test students performance of the target language required in real life situations.

In terms of purpose, several types of language tests have devised to measure the learning outcomes accordingly. However, each test has its specific purpose, properties and criterion to be measured. The test types that will be dealt with in this part have been laid-out not in terms of importance, they are all of equal importance, but on the basis of alphabetical order. Yet, dictation, the traditional testing device which focuses much more on discrete language items, will have its fair of attention in terms of its pro s and con s.

Richards et al. (1985) define a criterion-referenced test (CRT) as: a test which measures a student s performance according to a particular standard or criterion which has been agreed upon. The student must reach this level of performance to pass the test, and a student s score is therefore interpreted with reference to the criterion score, rather to the scores of the students. That definition is very different from their definition for a norm-referenced test (NRT) which they say is: A test which is designed to measure how the performance of a particular student or group of students compares with the performance of another student or group of students whose scores are given as the norm. A student s score is therefore interpreted with reference to the scores of other students or group of students, rather than to an agreed criterion score.

1. Achievement Test An achievement test, also referred to as attainment or summative test, are devised to measure how much of a language someone has learned with reference to a particular course of study or programmeof instruction, e.g. end-of-year tests designed to show mastery of a language. An achievement test might be a listening comprehension test based on a particular set of situational dialogues in a textbook. The test has a two-fold objective: 1) To help the teachers judge the success of their teaching. 2) To identify the weaknesses of their learners. In more practical and pedagogical terms, Brown (1994, p. 259) defines an achievement test as tests that are limited to particular material covered in a curriculum within a particular time frame . In other words, they are designed primarily to measure individual progress rather than as a means of motivating or reinforcing language. Ideally, achievement tests are rarely constructed by classroom teacher for a particular class.

Achievement tests are formal tests given to learners to check how really they learnt to achieve the course objectives. Its content depends on the syllabus or the text book. Some scholars (Hughes, A.,1989)divide achievement tests as progress and final achievement tests. Progress tests are those given at various stages throughout a language course to see what the students have learnt. Whereas final achievement tests tend to be given at the end of the course for the same purpose (to know what students have learnt).

2. Diagnostic tests: are used to identify students strengths and weaknesses. They will be administered in the course of the teaching learning process. 3. Placement tests: as their name suggests, are administered to provide information which will help to place students at the stage that is most appropriate to their abilities. Typically they are used to assign students to classes at different levels.

A placement test is originally designed to place learners at an appropriate level in a programme or course. The term placement test as Richards et al. (1989) note does not refer to what a test contains or how it is constructed, but to the purpose for which it used. Various types or testing procedures such as dictation, interview or a grammar test (discrete or integrative) can be used for placement purposes. The English Placement test (EPT) is a well-known test in America, The EPT is designed to assess the level of reading and writing skills of entering undergraduate students so that they can be placed in appropriate courses. Those undergraduate students who do not demonstrate college or university-level skills will be directed to remedial courses or programmes to help them attain theseskills

4. Proficiency Test A proficiency test is devised to measure how much of a language someone has learned. It is not linked to any particular course of instruction, but measures the learner s general level of language mastery. Most English language proficiency tests base their testing items on high frequency-count vocabulary and general basic grammar. Some proficiency tests have been standardized for worldwide use, such as the well-known American tests, the TOEFL, and the English Language Proficiency Test (ELPT) which are used to measure the English language proficiency of foreign students intending further study at English-speaking institutions in the USA.

The English Language Proficiency Test (ELPT) assess both the understanding of spoken and written standard American English and the ability to function in a classroom where English is spoken. Generally, the aim of a proficiency test is to determine whether this language ability corresponds to specific language requirements (Valette, 1977, p. 6) TOEFL The Test of English as a Foreign Language, or TOEFL for short, is a large-scale language assessment. It is, arguably the most well-known and widely assessment in the world (Kunnan, 2008, p. 140). It was first developed in 1963 in the United States to help in the assessment of the language competence of non-native speakers. As a test type, it is a standardized administered by the Educational Testing Service, Princeton. It is widely used to measure the English-language proficiency of foreign students wishing to enter American colleges and universities. used large-scale language test of English proficiency

Over the years, the TOEFL became mandatory for non- American and non-Canadian native speakers of English applicants to undergraduate and graduate programs in U.S. and Canadian English-medium universities . The International English Language Testing System, IELTS, is designed to assess the language ability of candidates who wish to study or work in countries where English is the language of communication. IELTS is required for admission to British universities and colleges. It is also recognized by universities and employers in Australia, Canada, and the USA.

5. Language Aptitude Test Before defining what a language aptitude test is, it would be wiser to start first by defining what a language aptitude is. Language aptitude, as a hybrid of linguistic and psychological concept refers to the genuine ability one is endowed with to learn a language. It is thought to be a combination of several abilities: Phonological ability, i.e. the ability to detect phonetic differences (e.g. of stress, intonation, vowel quality) in a new language. Syntactic ability, i.e., the ability to recognize the different grammatical functions of words in sentences. Psychological ability, i.e. rote-learning abilities and the ability to make inferences and inductive learning.

Crystal (1989, p. 371) suggests other variables conducive to successful language learning such as empathy and adaptability, assertiveness and independence with good drive and powers of application . A high language-aptitude person can learn more quickly and easily than a low language-aptitude individual. The evidence in such assertion is axiomatic in a language aptitude test. A language aptitude test tends to measure a learner aptitude for language learning, be it second or foreign, i.e. students performance in a language. Thus, it is used to identify those learners who are most likely to succeed. Language aptitude tests usually consist of several different test items which measures such abilities as: Sound-coding ability, i.e. the ability to identify and remember new sounds in a new language. Grammar-coding ability, i.e. the ability to identify the grammatical functions of different parts of sentences. Inductive-learning ability, i.e. the ability to work out meanings without explanation in the new language. Memorization, i.e. the ability to remember and to recall words, patterns, rules in the new language.

Some English aptitude tests require students to perform such tasks as learning numbers, listening, detecting spelling clues and grammatical patterns and memorizing (Brown, 1994). 6. Discrete-Point Test The discrete-point test, also called discrete-item test, is a language test which measures knowledge of individual language items, such as a grammar test which has different sections on tenses, adverbs and prepositions. Discrete-point tests are based on the theory that language consists of different parts such as speech sounds, grammar and vocabulary, and different skills such as listening, speaking, reading and writing, and these are made up of elements that can be tested separately.

Test consisting of multiple-choice questions are usually regarded as discrete-point tests. Discrete-point tests are all too often contrasted with what are called integrative tests. An integrative test is one which requires a learner to use several skills at the same time. An essay-writing is an integrative test because it leans heavily on the knowledge of grammar, vocabulary, and rules of discourse; a dictation is also an integrative test as it requires knowledge of grammar, vocabulary and listening comprehension skills. In this vein, Harmer notes the following distinction between discrete-point testing and integrative testing, Whereas discrete point-testing only tests one thing at a time such as asking students to choose the correct tense of a verb, integrative test items expect students to use a variety of language at any one given time as they will have to do when writing a composition or doing a conversational oral test (Harmer, 2001, p. 323).

In the same line of thought, Broughton et al. ,more than some thirty years ago, noted that Since language is seen as a number of systems, there will be items to test knowledge of both the production and reception of the sound segment system, of the stress system, the intonation system, and morphemic system, the grammatical system, the lexical system and so on (Broughton et al., 1980, pp. 149-150).

Characteristics of a Good Test There are some general requirements that should be considered while constructing effective tests. Familiarity of test constructors to the material to be tested Awareness on the examinee s level, range of understanding and ability Skill in clearly and concisely expressing information and instruction Mastery of techniques of item writing Giving necessary time for test construction Avoiding the use of vague (highly abstruse concepts) or highly technical jargon words.

However, comprehensiveness are criteria used to measure quality of language tests. validity: the extent to which a test measures what it is supposed to measure and nothing else (Heaton, 1990). In line with this criterion, there are face validity, content validity, construct validity, empirical validity, concurrent and predictive validity (or according to Hughes, 1989 criterion-related validity) are some to mention. 2) Reliability: for a test to be valid, it must at first be reliable as an instrument. This means, it is a necessary characteristic of any good test. This concept refers to the ability of a test to generate similar results in differentoccasions inscoring. validity, reliability, practicality and 1)

In other words, reliability refers to the dependability or consistency of a test given to the same students in different times without additional input or a test given to different groups at the same time. 3) Practicality refers to whether the test is appropriate and free from time, financial, administration, scoring and interpretation constraints. 4) Comprehensiveness is the contents inagiven test. completeness of the

TEST FORMATS Tests differ in the method of scoring and can be divided into two kinds. 1) Objective tests: tests which require no judgment of the scorer are called objective tests. These are formats in which students select the correct responses among provided information. True-false, multiple choice and matching tests are of this format. These formats have high reliability. 2) Subjective tests: tests in which students are required to supply answers by themselves are of this type of format. This format requires judgmentof the scorer.

Individual assignment Language tests and gender/fairness in language testing Item analysis = Endalk Mulatie Wash back effect and authenticity Alternative assessment= Yohannes Beyene Continuous assessment Assessment models 1. 2. 3. 4. 5. 6. Characteristics of good tests 7. Validity = Getinet Girmay 8. Reliability = 9. Practicality and comprehensiveness = Getinet Girmay 10 . Approaches to good testing 11. Standardized and teacher-made/non-standardized tests 12. Scoring, grading and student evaluation 13. Test construction and types of tests 14. Assessing listening = Mekonnen Atnafu 15. Assessing speaking = 16. Assessing reading = Adane Kindie 17. Assessing writing = 18. Objective type tests = Addis Ezezew 19. Subjective tests 20. Principles or evaluation criteria of good and bad tests 21. Assessment VS testing 22. Teaching, learning and assessment 23. Communicative testing Date of submission: To be informed later

A test is a method of measuring an individuals ability, knowledge or performance in a given domain (Brown, D) Characteristics of a good test 1. Reliability: refers to consistency / dependability / repeatability of measurement. I.e. a test giving the same result whenever used under the same condition. A measure can be reliable without being valid. Factors affecting reliability of a test are: a) examinee-specific (concentration, fatigue, memory lapse, recklessness) b) Test-specific (questions set, ambiguity, lack of instructional clarity, tricky items) c) Scoring-specific (lack of uniformity in scoring, computation/counting error, carelessness in scoring

Reliability is a precursor of validity. As checking validity is laborious, reliability is the first step to validity. No need to west time checking validity if a test is unreliable. Types of reliability are: a) test-retest reliability (Stability) checks whether a test score is stable over time. i.e. checking the measure of a test by administering it twice over a period of time to a group and correlate results. Tests are administered twice and pair of scores of each student would be registered in columns and finally Pearson product-moment correlation coefficient b/n the two scores would be calculated. However, the test- retest time interval should not be long. The shorter the time of test administration, the less the changes may occur and the higher the reliability of test score ( as no additional input to change the score occurs).

Negative correlation coefficient would be rounded to zero correlation or uncorrelated (by negative it means less than no reliability which is meaningless). b) equivalent/parallel forms reliability is similar to test- retest but instead of giving the same test to the same group in time interval, we slightly change the same test in sequence or any other means like coding (different but equivalent tests) and administer to the same group of students.

c) Internal consistency reliability: a test administered once and the strength of each item to measure the content will be checked. How well each item measures the construct/content. Split-half reliability is the best means to check internal consistency. Test will be split in terms of odd and even numbers and scored separately like two different tests and correlation coefficient will be calculated for the two sets of scores. d)Reliability of rater judgment: usually, in productive skills, rater judgments will be made. These are inter-rater and intra-rater reliabilities.

intra-rater reliability: the degree to which a rater equivalently scores the same test on two separate occasions (may be in two weeks interval) under various physical and emotional states. 2) Inter-rater reliability: two or more raters will score the same test and check their agreement in their judgment. Factors affecting reliability: a) student-related factors: physical conditions of students like illness, fatigue, sight or auditory problems may impact reliability. 1)

Or psychological factors like motivation, emotional state, concentration, memory/forgetfulness, impulsiveness, carelessness etc. may vary scores of students. b) rater-related factors: tester s control over tests is more of scoring procedures. Errors in doing the scoring are commonly sources of errors. Additionally, variance in judgment of subjective type tests is another source due to the subjective nature of scoring procedures. Certain idiosyncrasies/behaviors are also reasons for committing measurement errors. i.e. some raters are simply tougher than others.

Lack of attention to the scoring criteria, experience and preconceived biases are also some other reasons related to raters errors. Rater-reliability problems are not only related to two or more raters but intra-rater reliability is a common occurrence for teachers as a result of unclear scoring criteria, fatigue, bias to particular good or bad students or even carelessness. c) Test administration related factors: unclear directions are bases for scoring variance, timing, tedious papers and illegible typing or duplication problems are some reasons for measurement errors. d) Test-related reliability factors: setting too long tests, type of items chosen, poorly written items (quality of items), test security (students getting test copies ahead of administration) may also be reasons for measurement errors.

e) environment-related factors: classroom situations (comfortable seats, ventilation, class size, proper lighting and weather etc.) are factors. According to Hughes, reasons for unreliability are two : interaction b/n the testeeand the test itself and the scoring of a test. How to improve test reliability? Re-administering the test after a lapse of time, administering parallel form of the test to the same group (giving two similar versions of a test to the same group), Applying technique of split-half method of measuring reliability and test and maintaining test administration reliability by making sure that all students got same quality input

(like giving all students cleanly photocopied test sheets, video input equally visible, sound clearly heard, light, temperature, extra noise and objective scoring) are some ways to improve reliability problems. In the same token, intra-rater reliability of open-ended responses may be managed by: Using consistent sets of criteria Giving uniform attention throughout the scoring Checking consistency by reading at least twice Avoiding fatigue and maintaining stamina (resistance to hardship) Setting more number of items will increase reliability

Maintaining homogeneity of items in quality and discrimination power Writing concise and clear instruction making the group of students heterogeneous will excel the measure of the reliability of a test Identifying students by number than by name ( to avoid bias). 2. Validity: is another characteristic of a good test which is a Greek word meaning strong (concept, conclusion or measurement) to the extent of accuracy to the real world. Therefore, validity of a test refers to the degree to which the test as a tool measures what it claims to measure. So validity equates accuracy . To some scholars validity means truth-fullness (Goodwin&leech, 2003). To others like Ebel&Frisbie (1991) it means appropriacy of an instrument to fulfill its purpose.

Validity is concerned with the soundness of the results and their interpretation. Validity shows how well a test determines the arrival of students to the established goals of a given course or how well a test calls for performance of students and matches with the tested unit. Validity refers to appropriateness of the test results not the instrument itself. There is no as such invalid or fully valid result. But it is simply a matter of degree There is no all rounded valid test. One may be valid for vocabulary but not for comprehension. Validity is a unitary concept though dependent to

different types of evidences. With this respect we have about 7 different types of validity. 2.1 Construct validity: refers to the ability of a test to exactly measure what it is claimed for. A test that is designed to measure depression should only measure depression not its causes like anxiety or stress. It is the basis for all other validities that determines the nature and the system of the exam / the test (i.e. the content and the system). 2.2 Content validity: this is the most important type for certification and licensure programs like educational training programs. Connections b/n test items and job- related tasks should be established through test specification and item

writing guidelines should be carefully followed in order to increase content validity of a test. Content validity can easily be estimated by group of subject matter experts (SMEs). 2.3 Concurrent validity: concurrent means at the same time and in psychology, education and social sciences, it refers to the degree of correspondence b/n a test and a previously valid test of the same construct. It can also mean the practice of concurrently testing two groups at the same time or asking two different groups of people to take the same test and measure if the test accurately measured what it claims to measure through statistical method than a logical method.

If students score well in both practical and paper tests, then concurrent validity has occurred, but if there exists a mismatch b/n the two, then concurrent validity hasn t. Advantages of concurrent validity It is a vast way to validate a given data. It is highly appropriate to validate personal attributes (strengths and weaknesses). Disadvantages o It is less effective as compared to predictive validity o When testing different groups, responses may differ

2.4 Predictive validity: This is another statistical approach to validity. It measures the relationship b/n examinees performances on the test scores to an examinee s future performance. It considers how well the test predicts examinees future status. It is specially useful for selection or admission purposes. 2.5 Face validity: is the magnitude of a test to which students see the assessment as fair, relevant and useful to improve their learning. It is students perception about the test to be valid. It asks the question Does the test appear to do what it is designed to do on the face as per the students? Students consider a test to have high face validity if

Its constructed as expected with familiar tasks The directions are crystal clear Items are not complicated Time allowed is appropriate to do the test Tasks are related to the course work (has content validity) Difficulty level of items is reasonable (85% and>) 2.6 Criterion validity: this is combination of both concurrent and predictive validities. i.e. does the test predict students performance/behavior the same way in another situation or time in the past / present or in the future? For instance if a test was given to an applicant for a