Lightweight AI for Next-Generation Optical Wireless Communication

Explore the integration of lightweight AI models for NG-OWC in constrained environments, focusing on deep learning techniques like model pruning. This submission discusses the advantages of efficient AI processing on edge devices without compromising performance, essential for OWC advancements.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

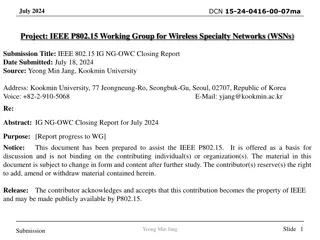

DCN 15-24-0626-00-07ma November 2024 Project: IEEE P802.15 Working Group for Wireless Specialty Networks (WSNs) Submission Title: Lightweight AI for NG-OWC in Constrained Environment Date Submitted: Nov 12, 2024 Source: Muhammad Rangga Aziz Nasution, Ida Bagus Krishna Yoga Utama, Nguyen Ngoc Huy, Yeong Min Jang (Kookmin University) Address: Room #603 Mirae Building, Kookmin University, 77 Jeongneung-Ro, Seongbuk-Gu, Seoul, 136702, Republic of Korea Voice: +82-2-910-5068 E-Mail: yjang@kookmin.ac.kr Re: Abstract: Present the Lightweight AI for NG-OWC in Constrained Environment Purpose: Presentation for contribution on IG NG-OWC Notice: This document has been prepared to assist the IG NG-OWC. It is offered as a basis for discussion and is not binding on the contributing individual(s) or organization(s). The material in this document is subject to change in form and content after further study. The contributor(s) reserve(s) the right to add, amend or withdraw material contained herein. Release: The contributor acknowledges and accepts that this contribution becomes the property of IEEE and may be made publicly available by IG NG-OWC. Slide 1 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Lightweight AI for NG-OWC in Constrained Environment Nov. 12, 2024 Slide 2 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Contents Background Techniques lightweight Advantages of Lightweight AI for NG-OWC Conclusion for making deep learning models Slide 3 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Background Deep learning models have become increasingly mature, demonstrating remarkable capabilities across a wide range of applications. As the deep learning grows, its integration to constrained systems is gaining attention. As a result, current research is focused on making these models more lightweight without sacrificing performance, driven by the growing demand for AI on edge devices where efficient operation in constrained environments is essential. Similarly, advancements in Optical Wireless Communication (OWC) require lightweight models that can process data efficiently and operate in real time, even on devices with limited processing power and memory. Slide 4 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Techniques for making deep learning models lightweight Pruning Model pruning entails reducing the parameter count by eliminating less significant weights, neurons, channels, or entire layers. This process can be unstructured (removing individual weights) or structured (removing groups), substantially enhancing model efficiency with minimal impact on performance. Slide 5 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Techniques for making deep learning models lightweight Quantization Quantization involves lowering the numerical precision of model weights and activations, often from 32-bit floating-point to 8-bit integer formats, to reduce memory and computational demands. Post-training quantization is frequently employed, enabling models to retain acceptable accuracy while operating efficiently on low-power devices. Slide 6 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Techniques for making deep learning models lightweight Knowledge Distillation Knowledge distillation transfers knowledge from a larger, more complex teacher model to a smaller student model by using the teacher s outputs or intermediate layers to guide the student s training. This technique is effective for model compression, allowing the student model to approach the teacher s performance with significantly less parameters. Slide 7 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Techniques for making deep learning models lightweight Neural Architecture Search Neural Architecture Search (NAS) automates the design of model architectures by exploring configurations that balance accuracy and computational cost. Employing methods like reinforcement learning or evolutionary algorithms, NAS discovers efficient architectures suited to specific hardware constraints. Slide 8 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Advantages of Lightweight AI for NG-OWC Faster transmitter detection Optical wireless communication (OWC) employs line-of-sight (LoS) based communication to transfer data. Lightweight models are designed for minimal computational overhead, enabling faster detection of transmitter signals in real time LoS system. Lower power consumption and resource efficiency These models consume less power, enabling battery-powered devices to operate longer without recharging. They are optimized for resource- constrained hardware, making them ideal for edge devices in optical wireless communication. Slide 9 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Advantages of Lightweight AI for NG-OWC Reduce latency in every communication layer Lightweight AI models process signals faster due to fewer parameters and lower computational complexity, which is crucial for low-latency applications like augmented reality and autonomous vehicles. Their ability to quickly adapt to changing conditions enhances communication speed without introducing delays. Slide10 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 Conclusion Lightweight AI models have recently gained significant attention, driven by the need for efficient, scalable AI solutions across various fields. Applying lightweight AI model for OWC is crucial to enhance communication quality. Key techniques for creating these models include pruning, quantization, knowledge distillation, and neural architecture search, each focusing on reducing computational demands while maintaining performance. Applying Lightweight AI models in optical wireless communication (OWC) technology can reduce latency, allowing for faster transmitter detection and reduce latency of the communication itself. Lightweight models also offer the advantage of lower power consumption and optimized resource efficiency, making them ideal for battery-powered and edge devices. Slide 11 Yeong Min Jang Submission

DCN 15-24-0626-00-07ma November 2024 References 1. S. A. Celtek, A. Durdu, and E. Kurnaz, Design and simulation of the hierarchical tree topology based wireless drone networks, in 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), 2018, pp. 1 5. C.-H. Wang, K.-Y. Huang, Y. Yao, J.-C. Chen, H.-H. Shuai, and W.-H. Cheng, Lightweight AI: An overview, IEEE Consum. Electron. Mag., vol. 13, no. 4, pp. 51 64, 2024. H.-I. Liu et al., Lightweight AI for resource-constrained environments: A survey, ACM Comput. Surv., vol. 56, no. 10, pp. 1 42, 2024. J. Tmamna et al., Pruning deep neural networks for green energy-efficient models: A survey, Cognit. Comput., vol. 16, no. 6, pp. 2931 2952, 2024. J. Tmamna, E. B. Ayed, R. Fourati, A. Hussain, and M. B. Ayed, Bare Bones particle Swarm optimization based quantization for fast and energy efficient convolutional neural networks, Expert Syst., vol. 41, no. 4, 2024. F. He, C. Liu, M. Wang, E. Yang, and X. Liu, Network lightweight method based on knowledge distillation is applied to RV reducer fault diagnosis, Meas. Sci. Technol., vol. 34, no. 9, p. 095110, 2023. Y. Tian, S. Peng, S. Yang, X. Zhang, K. C. Tan, and Y. Jin, Action command encoding for surrogate- assisted neural architecture search, IEEE Trans. Cogn. Dev. Syst., vol. 14, no. 3, pp. 1129 1142, 2022. A. S. Elgamal, O. Z. Aletri, B. A. Yosuf, A. Adnan Qidan, T. El-Gorashi, and J. M. H. Elmirghani, AI- driven resource allocation in optical wireless communication systems, in 2023 23rd International Conference on Transparent Optical Networks (ICTON), 2023, pp. 1 5. J. Vrana, The core of the fourth revolutions: Industrial internet of things, digital twin, and cyber-physical loops, J. Nondestruct. Eval., vol. 40, no. 2, 2021. 2. 3. 4. 5. 6. 7. 8. 9. Slide12 Yeong Min Jang Submission