Machine Learning Applications in Various Fields

Explore the realm of machine learning and its diverse applications across different domains such as text classification, speech processing, computer vision, computational biology, and more. Learn about association rules, extended association rules, and their practical implications in real-world scenarios.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

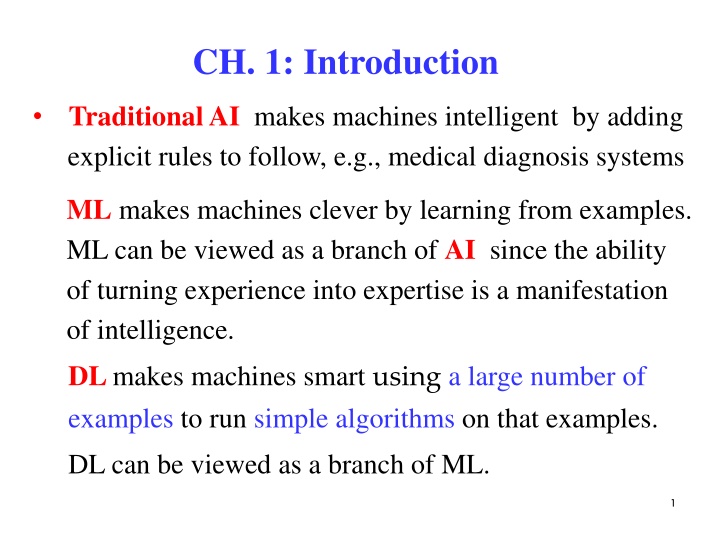

CH. 1: Introduction Traditional AI makes machines intelligent by adding explicit rules to follow, e.g., medical diagnosis systems ML makes machines clever by learning from examples. ML can be viewed as a branch of AI since the ability of turning experience into expertise is a manifestation of intelligence. DL makes machines smart using a large number of examples to run simple algorithms on that examples. DL can be viewed as a branch of ML. 1 1

1.1 Why is Machine Learning Widespread use of computers, networks and wireless communication leads to big data Data preserves useful information (e.g., diversities of knowledge, associations, classifications, regularities, structures, etc.). Information extraction may be performed manually through human analysis or automatically through machine learning 2 2

Example: Association rules An association rule is often expressed as a conditional probability P(X|Y), graphically, where X: predecessor, Y: successor i) Basket Analysis Find association rules between products bought by customers e.g., P(chips|beer) = 0.73: i) The customer has the probability of 0.73 of buying chips after buying beer, or ii) 73% of customers who buy beer also buy chips. 3

ii) Web Portals estimate the links a user is likely to click and use this information to download such pages in advance for faster access 4 Extended association rules, e.g., P(Y | X, D ), P(Y, E | X, D), Graphical models provide ways to compute the probabilities. Potential Applications (1) Text or document classification, e.g., (a) Assigning a topic to a text or a document 4

(b) Determining if the content of a web page is appropriate (2) Speech processing, e.g., Speech recognition, Speech synthesis, Speaker identification, Acoustic modeling (3) Natural language processing, e.g., Part-of-speech tagging, Named-entity recognition Context-free parsing, Dependency parsing (4) Computer vision application, e.g., OCR, Object detection, Face recognition, Content-based image retrieval, Pose estimation 5 5

(5) Computational biology application, e.g., Protein function prediction, Identification of key sites, Analysis of gene and protein networks (6) Others, e.g., Fraud detection, Network intrusion, Game playing, Identification of key, Robot control, Medical diagnosis, Recommendation systems, Search engines, Information extraction 6 6

Potential Tasks: (1) Classification assigning a category to each item (2) Regression predicting a value for each item (3) Ranking learning to order items. (4) Clustering partitioning items into homogeneous subsets (5) Dimensionality reduction (manifold learning) transforming a representation of items into a lower-dimensional one 7 7

Ingredients of Machine Learning: Training examples Feature vectors (patterns) Labels Hyperparameters free parameters not determined by the learning algorithm but specified as input to the learning algorithm Validation sample for tuning the hyperparameters Test sample Hypothesis set a set of functions mapping to the set of labels 8 8

1.2 Biological Learning (A) Insects: bee, fly, grasshopper Feeding, predator avoidance, aggression, social interactions, and sexual behavior. 9 (B) Animals: Squirrel Bird Mouse 9

Hebbian Learning Dog 10 10

(C) Human Brain Cerebral cortex Activities: sensation, perception, cognition, recognition, imagination, dream, consciousness Recognition = Re-cognition Cognition = learning + representation + storing Recognition = retrieval + matching + decision 11

Human Learning VS. Machine Learning Perception < -- > Cognition < -- > Recognition < -- > Unsupervised learning Reinforcement learning Supervised learning 1.3 Types of Machine Learning Supervised Learning On-line Learning Unsupervised Learning Semi-supervised Learning Reinforcement Learning Active Learning 12

Supervised Learning -- Learn a mapping from the input to an output using a set of labeled examples Ex.: Classification -- Given a set of labeled examples, learn a discriminant that separates unseen points with different labels, e.g., i) Credit scoring Differentiate customers between low- and high-risk customers. ii) Pattern recognition OCR, Face recognition, speech recognition 13

= Ex.: Regression Assume a model , where are the parameters to be estimated using a set of training examples {( , i x y e.g., ( | ) g x y n i )} = 1 i Linear model: = + = ; ( , ) y w x w w w 1 0 0 1 Quadratic model: = + + = 2 ; ( , , ) y w x w x w w w w 2 1 0 0 1 2 2 1 ( ) x = Gaussian model: exp( ); y 2 2 2 = ( , ) 14

i) Price of car Assume the price model y = g(x|q), where x : car attributes, y : price, q: parameters = = f f a ii) Physics (a) Force model: m k l (b) Spring model: iii) Kinematics of a robot arm Assume angle models: = = ( , ), g x y ( , ) g x y 1 1 2 2 15

Unsupervised Learning -- Find the regularities of data using a set of unlabeled examples, e.g., clustering, density estimation, dimensionality reduction, image segmentation Examples: i) Clustering clustering classification 16

ii) Density estimation d { i i } , x x Data points R = , , 1 n i Density Estimation Density distribution 17

iii) Image segmentation segmentation Input image Semi-supervised Learning -- The training sample consisting of both labeled and unlabeled data 18

Reinforcement Learning -- During learning, the correct answers are not provided but hints or delayed rewards e.g., policy learning, game playing, multiple agents, robot navigation, credit assignment. On-line Learning -- Training and production phases are intermixed. Active Learning -- The learner adaptively or interactively collects training examples 19

Summary Widespread use of computers and communication leads to big data. Data preserves useful information. Machine learning is to automatically extract useful information from data. It relates to i) Artificial intelligence, ii) Artificial neural networks iii) Data mining (knowledge discovery in databases) iv) Generative models (explain the observed data through the interaction of hidden factors) v) Clustering, classification, pattern recognition 20

Potential Tasks: (1) Classification assigning a category to each item (2) Regression predicting a value for each item (3) Ranking learning to order items. (4) Clustering partitioning items into homogeneous subsets (5) Dimensionality reduction (manifold learning) transforming a representation of items into a lower-dimensional one 21 21

Ingredients of Machine Learning: Training examples Feature vectors (patterns) Labels Hyperparameters free parameters not determined by the learning algorithm but specified as input to the learning algorithm Validation sample for tuning the hyperparameters Test sample Hypothesis set a set of functions mapping to the set of labels 22 22

Types of Machine Learning Supervised Learning -- Learn a mapping from the input to an output using a set of labeled examples Unsupervised Learning -- Find the regularities of data using a set of unlabeled examples Semi-supervised Learning -- The training sample consisting of both labeled and unlabeled data 23

Reinforcement Learning -- During learning, the correct answers are not provided but hints or delayed rewards On-line Learning -- Training and testing phases are intermixed. Active Learning -- The learner adaptively or interactively collects training examples 24