Machine Learning Approach for Thread Mapping in Transactional Memory Applications

Discover how a machine learning approach is used to optimize thread mapping in software transactional memory applications, aiming to predict the best mapping strategy for improved performance and efficiency.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

A Machine Learning Approach for Thread Mapping on Transactional Memory Applications > Source: 18th International Conference on High Performance Computing Authors: Castro, M.; Goes, L.F.W.; Ribeiro, C.P.; Cole, M.; Cintra, M.; Mehaut, J. > 100062512 , 100065801 > /34 1

Outline > Introduction > Software Transactional Memory (STM) > Thread Mapping > Machine Learning > The ID3 Algorithm > Result & Conclusion & Future Works /34 2

Part 1 Introduction /34 3

Transaction > a sequence of instructions that performs a single logical function. > The concept originally used in database systems. /34 4

Software Transactional Memory (STM) > Programmer can write the code as transactions. > Use the STM libraries to guarantee each transaction is executed atomically and in isolation regardless of eventual data races. /34 5

Cache Memory > When the data in the main memory is used, it s copied into the cache. > From the application perspective, it can be viewed as a way to share data efficiently. /34 6

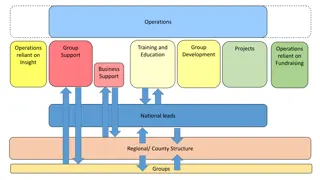

Thread Mapping > For example, you can put threads that communicate often on cores that share some level of cache. > By doing so, the high latency to access the main memory can be avoided. /34 7

Thread Mapping (contd) > Many strategies exist for mapping. > However, there is no single one that provides good performance for all different applications and platforms. /34 8

The Goal > Given an application, predict which mapping strategy is the best. /34 10

Part 2 Machine Learning /34 11

Machine Learning > Static phase: > The goal is to build up a predictor. > Here, the predictor is a decision tree. > Dynamic phase: > Use the predictor to decide which mapping strategy is going to be used. /34 12

Machine Learning (contd) /34 13

Decision Tree /34 14

Static Phase > Three steps: > Application profiling > Data pre-processing > Learning process /34 15

Application Profiling > Features: > Category A: the interaction between the application and the STM system. > Transaction time ratio > Abort ratio > Category B: STM mechanisms > Conflict detection: eager, lazy > Resolution strategy: suicide, backoff /34 16

Application Profiling (contd) > Features: > Category C: the interaction between the application and the platform > Last Level Cache Miss Ratio > Target Variable T: thread mapping strategies > Linux, Compact, Scatter, Round-Robin /34 17

Data Pre-Processing > Since we are building a decision tree, the features must be categorical or discrete. > Features in categories A & C are converted into: > Low (0.0; 0.33) > Medium (0.33; 0.66) > High (0.66l 1.0) /34 19

Part 3 The ID3 Algorithm /34 20

Using Game-Based Cooperative Learning to Improve Learning Motivation: A Study of Online Game Use in an Operating Systems Course IEEE TRANSACTIONS ON EDUCATION, VOL. 56, NO. 2, MAY 2013 /34 21

Decision Tree /34 22

ID3 (Iterative Dichotomiser 3) > Quinlan(1979) > Base on Shannon(1949) Information theory /34 23

ID3 (Iterative Dichotomiser 3) > Information theory: k , Pi (Entropy) Example 1: k=4 p1=0.25,p2=0.25,p3=0.25,p4=0.25 I=-(.25*log2(.25)*4)=2 Example 2: k=4 p1=0, p2=0.5, p3=0, p4=0.5 I=-(.5*log2(.5)*2)=1 > > /34 24

ID3 (Iterative Dichotomiser 3) > Calculate the entropy of every attribute using the data set > Split the set attribute for which entropy is minimum (or, equivalently, information gain is maximum) into subsets using the > Make a decision tree node containing that attribute > Recurse on subsets using remaining attributes /34 25

> Stop condition: > > > /34 26

Decision Tree /34 27

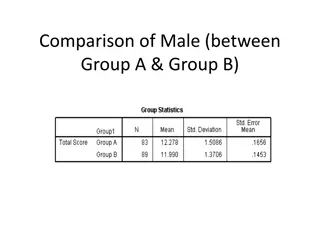

Cross Validation > Leave-one-out > The accuracies on SMP-24 and SMP-16 were 86% and 72%. /34 28

Prediction > The dynamic phase. > Three steps: > The application starts running with default thread mapping scheduling and is profiled during a initial warm-up interval. > Then use the profiled data to decide a mapping strategy. > Change the mapping strategy. /34 29

Part 4 Result & Conclusion & Future Works /34 30

Average Speedup /34 31

Result & Conclusion > Improve 11.35% and 18.46% compared to the worst case and 3.21% and 6.37% over Linux strategy. > ML-based approach is within 1% of the oracle performance. /34 32

Future work > Increase features to make more accuracies. > Automatically executed in an existing STM system. > Other algorithms like Neural networks or Support Vector Machines. /34 33

Thanks for your attention. /34 34