Managing GPU Concurrency in Heterogeneous Architectures

This study delves into managing GPU concurrency in heterogeneous architectures, delving into LLC memory, network, and shared resources, improving GPU and CPU performance through warp scheduler controls, CPU-centric and CPU-GPU balanced strategies. Results show positive impacts on CPU performance while mitigating latency in the GPU.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

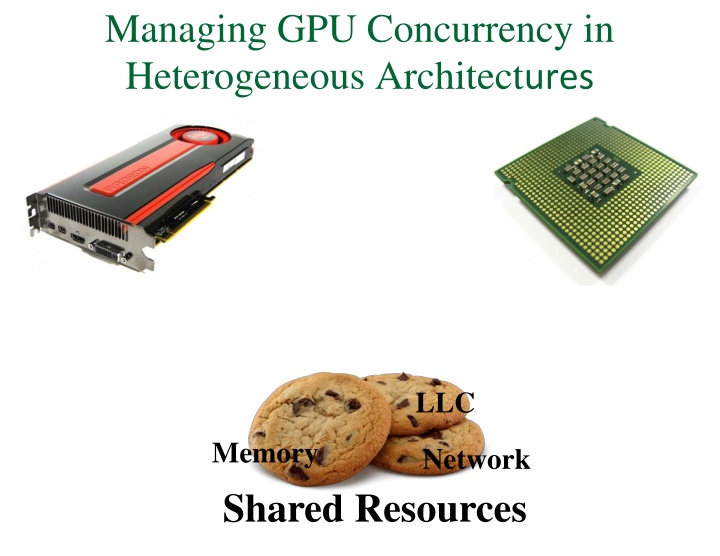

Managing GPU Concurrency in Heterogeneous Architectures LLC Memory Network Shared Resources

Managing GPU Concurrency in Heterogeneous Architectures LLC Memory Network Shared Resources

Managing GPU Concurrency in Heterogeneous Architectures LLC Memory Network Shared Resources

Managing GPU Concurrency in Heterogeneous Architectures LLC Memory Network Shared Resources

Our Proposal Warp Scheduler Controls GPU Thread-Level Parallelism

Our Proposal Warp Scheduler Controls GPU Thread-Level Parallelism Improved GPU performance Improved CPU performance CPU-centric Strategy

Our Proposal Warp Scheduler Controls GPU Thread-Level Parallelism Improved GPU performance Improved CPU performance CPU-centric Strategy CPU-GPU Balanced Strategy

Our Proposal Warp Scheduler Controls GPU Thread-Level Parallelism Improved GPU performance Improved CPU performance CPU-centric Strategy CPU-GPU Balanced Strategy Control the trade-off

Our Proposal CPU-centric Strategy Memory Congestion CPU Performance

Our Proposal CPU-centric Strategy Memory Congestion CPU Performance IF Memory Congestion GPU TLP

Our Proposal CPU-centric Strategy Memory Congestion CPU Performance IF Memory Congestion GPU TLP Results Summary: +24% CPU & -11% GPU

Our Proposal CPU-GPU Balanced Strategy CPU-centric Strategy Memory Congestion CPU Performance GPU TLP GPU Latency Tolerance IF Memory Congestion GPU TLP Results Summary: +24% CPU & -11% GPU

Our Proposal CPU-GPU Balanced Strategy CPU-centric Strategy Memory Congestion CPU Performance GPU TLP GPU Latency Tolerance IF Memory Congestion GPU TLP IF Latency Tolerance GPU TLP Results Summary: +24% CPU & -11% GPU

Our Proposal CPU-GPU Balanced Strategy CPU-centric Strategy Memory Congestion CPU Performance GPU TLP GPU Latency Tolerance IF Memory Congestion GPU TLP IF Latency Tolerance GPU TLP Results Summary: +24% CPU & -11% GPU Results Summary: +7% both CPU & GPU

Managing GPU Concurrency in Heterogeneous Architectures Onur Kay ran1, Nachiappan CN1, Adwait Jog1, Rachata Ausavarungnirun2, Mahmut T. Kandemir1, Gabriel H. Loh3, Onur Mutlu2, Chita R. Das1 1 Penn State 2 Carnegie Mellon 3 AMD Research

Managing GPU Concurrency in Heterogeneous Architectures Onur Kay ran1, Nachiappan CN1, Adwait Jog1, Rachata Ausavarungnirun2, Mahmut T. Kandemir1, Gabriel H. Loh3, Onur Mutlu2, Chita R. Das1 1 Penn State 2 Carnegie Mellon 3 AMD Research Today Session 1B Main Auditorium @ 3 pm