Matrix Factorization Techniques Overview

In this detailed content, various methods for matrix decomposition including eigenvector decomposition, Cholesky, LU decomposition, SVD, and more are discussed. The concepts of irreducible and reducible matrices, Perron-Frobenius Theorem, data matrix representation, choosing the dimension K, and the significance of non-negative decomposition are explained comprehensively.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Matrix Factorization Presenter : Advisor :

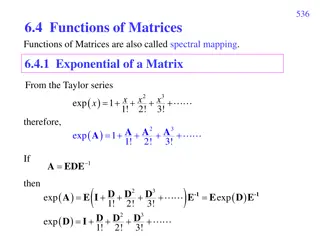

Pipeline Method for different matrix decomposition ? = ??? 1 ?? ?,?? ?,?? ? Eigenvector decomposition ? = ?? Principle component analysis (PCA) ?? ?,?? ?,?? ? Matrix factorization Fast algorithm: Cholesky : ? = ??? , ?? ?,?? ? LU : ? = ?? , ?? ?,?? ?, ?? ? Cholesky decomposition, LU, etc Singular value decomposition (SVD) ? = ???? , ?? ?,?? ?,?? ?,?? ? ? = ??, ?,? 0 ?? ?,?? ?,?? ? Negative matrix decomposition (NMD)

Matrix Factorization Irreducible matrix & reducible matrix <Thm 1>: Reducible matrix A? ? If ? and ??= ? 1, s.t. ???? =? ? ? , where ?? ?, ? 1 and ?(? ?) (? ?) ? , then A? ? is a reducible matrix <Thm 2>: Strongly connected graph A? ? is a irreducible matrix iff its directed graph is strongly connected graph. 0 0 ?12 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ?12 0 0 0 ?23 0 0 0 ?23 0 A = ----- irreducible ?31 0 0 A = ----- reducible ?31 ?45 0 ?12 0 ;?23 0 ; ?31 0 ?54 ?12 0 ;?23 0 ; ?31 0 ; ?45 0 ; ?54 0 ?12 ?24 ?12 1 2 4 2 1 Strongly connected Weakly connected ?45 ?54 ?31 ?23 ?23 ?31 3 5 3

Matrix Factorization Perron-Frobenius Theorem : ? = [???] with the size ? ? is a non-negative matrix if Irreducible matrix ??? 0, ?=1,2, ,? ; ?=1,2, ,? Radius of spectrum : ? ? = ??? ? ?>?, s.t. ?? = ? ? ? and ?1= 1 Eigenvector ? corresponding to its eigenvalue ? is a non-negative vector > 0

Matrix Factorization Data matrix : ?? ?, each column vector represents each data. Basis (Dictionary, Patterns) : ?? ?, each column vector represents a basis. Regressors (activation coefficients) : ?? ?, each column vector represents the coefficients

Matrix Factorization How to choose the dimension K : ?? ? ?? ??? ? Data fitting A great K leads to a better data approximation. Model complexity A smaller K leads to a less complex model (easier to estimate, less parameters to transmit)

Matrix Factorization Why do we use non-negative decomposition for optimized problem ? Data is often non-negative by nature Pixel intensities Amplitude spectra Occurrence counts Energy consumption Users scores Stock market values

Non-negative matrix decomposition Optimized problem for NMD :

Non-negative matrix decomposition Criteria: Similarity between true data ? and estimated data ?? min ?,?>0? ?|?? where ? ?|?? = ? ?? ???| ???? ? ?|? is a scalar divergence which satisfies the following rule : ? ?|? is continuous over ? and ? ? ?|? 0 , ?,? 0 ? ?|? = 0 if ? = ?

Non-negative matrix decomposition NMD : ? ??, ? 0 ,? 0 Euclidean norm: ?????||?? = ? ??2= ? ???? ???? ??????||?? = ?2?????||? Kullback-leibler divergence: 2 ??? ???? ????||?? = ? ????log ?????||?? = ?????||? Itakura-Saito divergence: ??? ???? ??? ???? log ------ scale-invariant ??? ???? ????||?? = ? ? ?????||?? = ????||? it provides higher accuracy in the representation of data with large dynamic range, such as audio spectra. 1

Non-negative matrix decomposition Question : Which divergence to choose ? by intuition or from some prior knowledge of the application goal (e.g., NMF is used for predicting the unseen data while minimizing the mean squared error = EUC distance) or invariances (e.g., scale invariance for music analysis with IS divergence) from some probabilistic considerations. optimize the divergence (e.g. from some parametric family) on some development data within a particular application.

Non-negative matrix decomposition Statistical viewpoint : For many divergences a probabilistic formulation is possible: the divergence minimization becomes to a maximum likelihood (ML) criterion ? ?|| ? = log ? ?| ? + ? , ? = ?? Divergence ? ?|| ? PDF ? ?| ? Probability distribution 2 ???~G??????? ???,?2 Euclidean norm 2??2? ??? ??? 2 ??? ??? 1 2?2 ? ? ?,? ???~??????? ??? 1 ??? ???? Kullback- leibler divergence ???? ??? ??? ???log ??? ???? ???+ 1 ? ? ?,? ??? ??? ??? ???? ??? ???? 1 Itakura- Saito divergence 1 log 1 ???~??????????? ? ??? ??? ? ? ?,?

PCA vs NMD Principle component analysis (PCA): For the view of maximum variance ? data points : ?1, ?1, , ?? ; ?? ?, ?=1,2, ,? How to find a projection vector ? ?, ? ? such that the variance of data ? is maximized ? ??? ??? = ? ??? ???? Optimized problem: ??? ???? ?= ? ??(? ??) (? ??)?? = ????? Small variance ?? ????? max ? ?2 subjected to ??? = 1 ?1 ?3 By Lagrange multiplier : = ????? ?(??? 1) and let ? ? ??= 0 Large variance 2??? 2?? = ? ??? = ??, ? ? ? is eigenvalue of the auto-correlation matrix ?? in corresponding to its eigenvector ?

PCA vs NMD Principle component analysis (PCA): For the view of minimum square error ? original data points : ?1, ?1, , ?? ; ?? ?, ?=1,2, ,? ? reconstructed data points : ?1, ?2, , Reconstruction error ? = ? ?=1 ?? ?? Our goal : Dimension reduction and less distortion of reconstruction. ?? ?=1 ??,??? , where ??,?= ?????, ??is an orthonormal basis. ??; ?? ?, ?=1,2, ,? 1 ? 2 ? ? 1 ? ?=1 2 ?? ?? m?? ? subjected to ?????= ??,? The solution is ????= ????,?? ?

Application Face recognition

Application Face recognition PCA NMD

Application Application : Denoising

Application Application : Speech Processing

Application Application: Temporal segmentation can be achieved by thresholding the temporal activations relating to components of interest

Reference Kejun Huang, Nicholas D.Sidiropoulos, and Ananthram Swami, Non-negative matrix factorization revisited: unique and algorithm for symmetry decomposition, IEEE Trans. on Signal Proc. vol.62, issue 1, pp.211-224, Oct, 2013. Slim ESSID & Alexey OZEROV, NMF tutorial, Telecom. ParisTech, July, 2014. Jimmy Lude na-Choez and Ascensi on Gallardo-Antol n, Speech denoising using non- negative matrix factorization with kullback-leibler divergence and sparseness constraints, Comm. in Computer and Information Science. Pp.207-216, Nov. 2012. Daniel D.Lee, and H.Sebastian Seung, Algorithm for non-negative matrix factorization, NIPS, 2000 Tommy Huang, / : (Principal Component Analysis, PCA) , Medium, Apr, 2018.