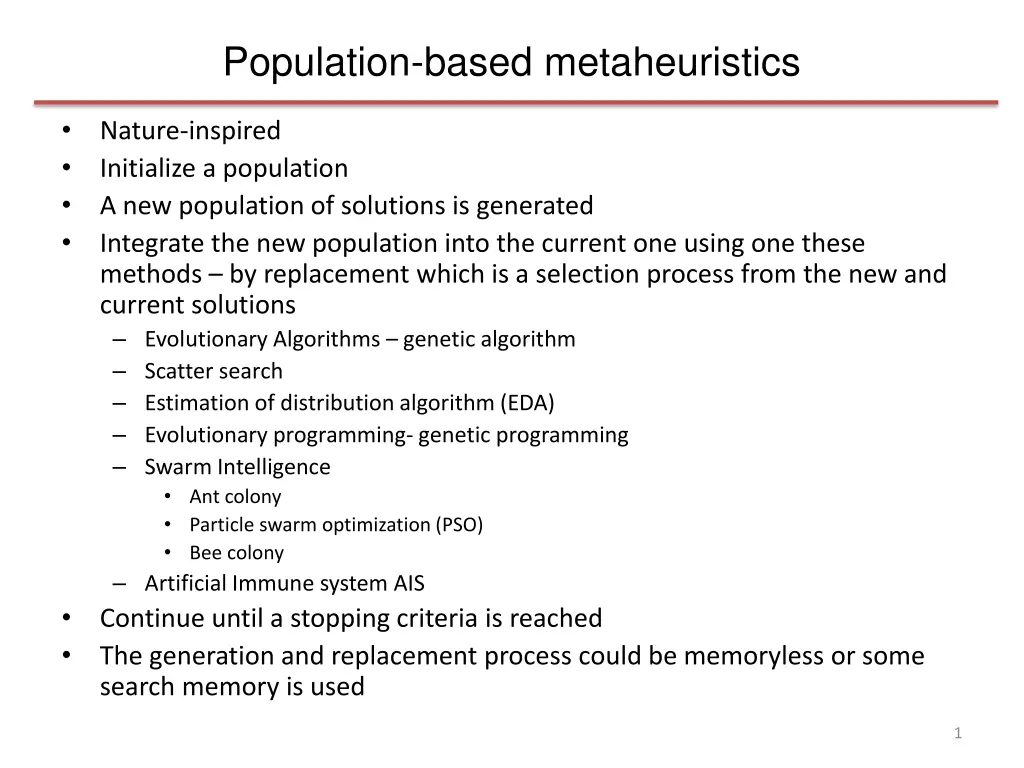

Metaheuristics in Evolutionary Algorithms and Genetic Programming

Discover the principles of population-based metaheuristics such as genetic algorithms and evolutionary programming. Explore common concepts in evolutionary algorithms and delve into genetic programming, a modern approach involving program-based solutions. Witness the evolution and application of genetic programming in tasks like symbolic regression.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Population-based metaheuristics Nature-inspired Initialize a population A new population of solutions is generated Integrate the new population into the current one using one these methods by replacement which is a selection process from the new and current solutions Evolutionary Algorithms genetic algorithm Scatter search Estimation of distribution algorithm (EDA) Evolutionary programming- genetic programming Swarm Intelligence Ant colony Particle swarm optimization (PSO) Bee colony Artificial Immune system AIS Continue until a stopping criteria is reached The generation and replacement process could be memoryless or some search memory is used 1

Common concepts of Evolutionary Algorithm Main search components are Representation For Ex: in Genetic Algorithm GA, the encoded solution is called a chromosome. The decision variables within a solution are genes. The possible values of the genes are alleles and the position of the element (gene) within a chromosome is named locus. Ex TSP Chromosome = (_,_,_,_,_,_,_,_,_) Population Initialization Objective function, also called fitness in EA terminology. Selection strategy which parents are chosen for the next generation with the objective of better fitness Reproduction strategy mutation, crossover, merge, or from a shared memory Replacement strategy using the best of the old and new population survival of the fittest Stopping criteria 2

Genetic Programming More recent approach than GA (1992, Koza from MIT) Instead of fixed length strings as in GA, the individuals in GP are programs- nonlinear representation based on trees. Crossover is based on subtree exchange and mutation is based on random changes in the tree Parent selection is fitness proportional and replacement is generational. One of the issue is the uncontrolled growth of trees known as bloat Used in machine learning and data mining tasks such as prediction and classification. 3

Genetic Programming GP uses terminal (leaves of a tree) and function (interior nodes) sets The objective is to generate programs that perform a task The programs grow or shrink in size at each iteration as the search progresses 1) Generate an initial population of random compositions of the functions and terminals of the problem (computer programs). 2) Execute each program in the population and assign it a fitness value according to how well it solves the problem. 3) Create a new population of computer programs. i) Copy the best existing programs ii) Create new computer programs by mutation. iii) Create new computer programs by crossover 4) The best computer program that appeared in any generation, the best- so-far solution, is designated as the result of genetic programming 4

Genetic Programming Examples Symbolic regression problem (in statistics) Obj is to find a program that fits a given data set Discovers both the form of the model and its parameters. - - - the known value of the function Z for several set s of parameter x,y,z values. Minimize mean square error Functions (interior nodes) are math operators +,-, *,/ Terminals (leaves) are variables and constants Evaluate the program and check deviation from + + y * z x 5 * y x Z= (x*5)+(z+(x*y))+y 5

Genetic Programming Examples Artificial ant on the Santa Fe trail 32X32 grid Food pellets are mapped on some cells. Find a shortest path to maximize food intake (number of squares or dots) The if constraints are sensing functions for locating food. If food then do x or y. If no food then do m or n Terminal set would be forward, left or right movements Autonomous Navigation Control of UAVs Using Genetic Programming 6

Genetic Programming Examples A program to control flow of water through a network of water sprinklers There are several sprinklers on a golf course and several valves that can turn on/off to let water flow through them. The objective (fitness function) is to achieve correct amount of water output from each sprinkler and achieve uniform distribution (pressure). Measure fitness by placing measuring devices (e.g rain gauge) that record the amount of water collected then minimize the standard deviation among these devices. Opening too many valves at a time will drop the pressure in the system (some areas may not get water), and too few will result in excess flow due to high pressure. The terminal sets are sprinklers (leaves) and function sets interior nodes) are valves The solution is to generate programs that open and close valves to achieve the above objective. There are many solutions to evaluate 7

Swarm Intelligence Algorithms inspired by collective behavior of species Ants, bees, termites etc. Inspired from the social behavior of the species as they compete/forage for food Particles are Simple, non-sophisticated agents Agents cooperate by indirect communication Agents move around in the decision space. Non evolutionary, uses shared memory 8

Ant Colony Optimization Ants transport food and find shortest paths Use simple communicating methods Leave a chemical trail that is both olfactive and volatile The trail is also called a pheromone trail The trail guides the other ants toward the food Larger the amount of pheromone, larger the probability that a trail will be chosen The pheromone evaporates over time In optimization Initialize pheromone trails Construct solutions using the pheromone trails Pheromone update using generated solutions Evaporation reinforcement 9

Ant Colony Optimization The pheromone trails memorize the characteristics of good generated solutions, which guides the construction of new solutions The trails change dynamically to reflect acquired knowledge Evaporation phase: The trail decreases automatically. Each pheromone value is reduced by a fixed proportion The goal is to avoid a premature convergence to the good solution for all the ants Also helps in diversification Reinforcement Phase: the pheromone trail is updated according to the generated solution 10

ACO for TSP n cities, m ants G = (V,E) input graph dij is the distance between i and j For each ant randomly select an initial city i Set up the pheromone matrix tij for edge i,j tij represents the pheromone strength of an edge (i,j) in a tour (higher strength if more ants choose an edge) Initialize tij =1 Each ant will construct a tour in a stochastic way starting from city i An ant will select the next city j using the highest probability tik is the pheromone to another city k in S, and ???is desirability S is the set of not yet visited cities of the Graph G 0 ?represents influence of the pheromone 1 ? representing the influence of problem specific values such as the distance in TSP ??? = 1/dij (Desirability higher values are better) 11

ACO for TSP Continue until S is a null set for each of the m ants. Update the pheromone using the quality obj fnc f(?) of solution ? from each ant or a set of k best ants where k<m tij = tij + where is 1/ f(?) and (i, j ) are edges in solution ? Good tours emerge as tij is updated by the ants The pheromone is evaporated by tij = (1-?)tij where 0 < ?< 1 is the reduction rate of the pheromone Repeat the process several times with a set of new ants ACO is better than genetic algorithm because it is adaptable and can adapt to changes in dij Useful in network routing and transportation problems. 12

Particle Swarm Optimization Stochastic p-metaheuristics PSO does not use the gradient of the problem being optimized Mimics social behavior of swarms such as birds, fish that go after food A coordinated behavior using local movement emerges without any central control A swarm consists of N particles flying in D dimensional space (D refers to the # of iterations for the algorithm, t= 1, ..,t=D) Each particle i is a candidate solution and represented by a vector xi position in the decision space Each particle has a velocity vi that gives direction and a value (called step) that updates the position xi Each particle successfully adjusts its position toward the global optimum using the following two factors 13

PSO 1) the best position visited by itself pi = best of (xi(1), xi(2), xi(t-1), xi(t)), xi(D))) at a given t 2) the best position visited by the whole swarm pg = best of (xg(1), ..xg(t-1), xi(t)), xi(D))) at a given t Neighborhood- a topology is associated with the swarm Global the whole population complete graph Local The neighborhood is a set of directly connected particles, a ring formation where every particle is connected to two others At each iteration t in D, each particle updates Velocity vi(t) = w vi(t-1) + r1 C1 (pi - xi(t-1)) + r2 C2 (pg - xi(t-1)) where r1 , r2 are two random variables on [0,1]. Constant C1 is the cognitive learning factor that represents the attraction that a particle has towards its own success and C2 is the social learning factor that represents the attraction that a particle has towards the success of its neighbors. w is a weight on the previous velocity. A large w will encourage diversification and a small value will encourage intensification 14

PSO Position update at iteration t xi(t) = xi(t-1) + vi(t) Update the best found particles For a minimization problem If f(pg) < f(xi) < f(pi) then pi = xi If f(xi) < f(pg) then pg = xi 15