Monte Carlo Integration in Computer Graphics: Overview and Applications

Explore Monte Carlo Integration in computer graphics, from motivation to algorithms, advantages, and disadvantages. Learn about its application in rendering complex shading effects and how it provides robust solutions for irregular domains and high-dimensional integrals. Understand the basics of probability and sampling in Monte Carlo estimation of integrals.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

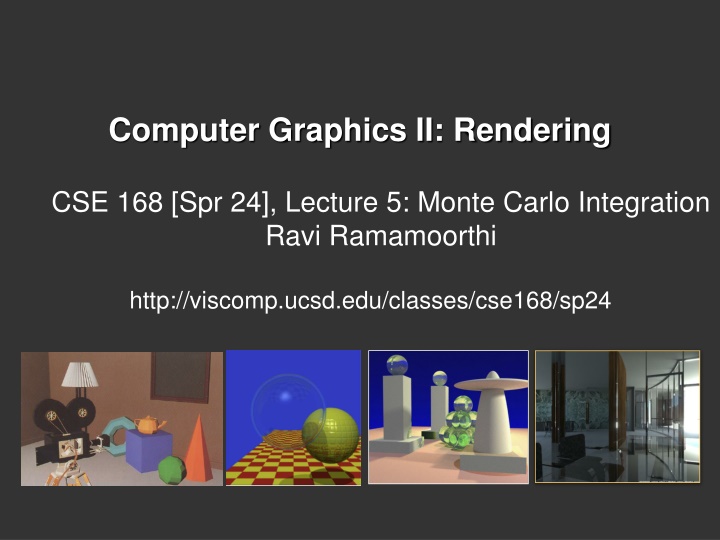

Computer Graphics II: Rendering CSE 168 [Spr 24], Lecture 5: Monte Carlo Integration Ravi Ramamoorthi http://viscomp.ucsd.edu/classes/cse168/sp24

To Do Homework 2 (Direct Lighting) due Apr 25 Assignment is on UCSD Online START EARLY (NOW)

Motivation Rendering = integration Reflectance equation: Integrate over incident illumination Rendering equation: Integral equation Many sophisticated shading effects involve integrals Antialiasing Soft shadows Indirect illumination Caustics

Monte Carlo Algorithms based on statistical sampling and random numbers Coined in the beginning of 1940s. Originally used for neutron transport, nuclear simulations Von Neumann, Ulam, Metropolis, Canonical example: 1D integral done numerically Choose a set of random points to evaluate function, and then average (expectation or statistical average)

Monte Carlo Algorithms Advantages Robust for complex integrals in computer graphics (irregular domains, shadow discontinuities and so on) Efficient for high dimensional integrals (common in graphics: time, light source directions, and so on) Quite simple to implement Work for general scenes, surfaces Easy to reason about (but care taken re statistical bias) Disadvantages Noisy Slow (many samples needed for convergence) Not used if alternative analytic approaches exist (but those are rare)

Outline Motivation Overview, 1D integration Basic probability and sampling Monte Carlo estimation of integrals

Integration in 1D 1 f(x)dx = ? 0 f(x) x=1 Slide courtesy of Peter Shirley

We can approximate 1 1 f(x)dx g(x)dx 0 0 Standard integration methods like trapezoidal rule and Simpsons rule g(x) f(x) Advantages: Converges fast for smooth integrands Deterministic Disadvantages: Exponential complexity in many dimensions Not rapid convergence for discontinuities x=1 Slide courtesy of Peter Shirley

Or we can average 1 = E(f(x)) f(x)dx 0 f(x) E(f(x)) x=1 Slide courtesy of Peter Shirley

Estimating the average 1 =1 N f(x)dx f(xi) N i=1 0 Monte Carlo methods (randomly choose samples) f(x) E(f(x)) Advantages: Robust for discontinuities Converges reasonably for large dimensions Can handle complex geometry, integrals Relatively simple implement, reason about x1 xN Slide courtesy of Peter Shirley

Other Domains b =b-a N f(x)dx f(xi) N i=1 a f(x) < f >ab x=a x=b Slide courtesy of Peter Shirley

Multidimensional Domains Same ideas apply for integration over Pixel areas Surfaces Projected areas Directions Camera apertures Time Paths =1 N UGLY f(x)dx f(xi) Eye N i=1 Pixel x Surface

Outline Motivation Overview, 1D integration Basic probability and sampling Monte Carlo estimation of integrals

Random Variables Describes possible outcomes of an experiment In discrete case, e.g. value of a dice roll [x = 1-6] Probability p associated with each x (1/6 for dice) Continuous case is obvious extension

Expected Value n Expectation E(x) = Discrete: pi xi i=1 1 E(x) = Continuous: p(x)f(x)dx 0 For Dice example: 1 6 xi=1 n ( )= 3.5 E(x)= 61+2+3+4+5+6 i=1

Sampling Techniques Problem: how do we generate random points/directions during path tracing? Non-rectilinear domains Importance (BRDF) Stratified Eye x Surface

Generating Random Points Uniform distribution: Use random number generator 1 Probability 0

Generating Random Points Specific probability distribution: Function inversion Rejection Metropolis 1 Probability 0

Common Operations Want to sample probability distributions Draw samples distributed according to probability Useful for integration, picking important regions, etc. Common distributions Disk or circle Uniform Upper hemisphere for visibility Area luminaire Complex lighting like an environment map Complex reflectance like a BRDF

Generating Random Points 1 Cumulative Probability 0

Rejection Sampling 1 x x x x Probability x x x x x x 0

Outline Motivation Overview, 1D integration Basic probability and sampling Monte Carlo estimation of integrals

Monte Carlo Path Tracing Big diffuse light source, 20 minutes Motivation for rendering in graphics: Covered in detail in next lecture Jensen

Monte Carlo Path Tracing 1000 paths/pixel Jensen

Estimating the average 1 =1 N f(x)dx f(xi) N i=1 0 Monte Carlo methods (randomly choose samples) f(x) E(f(x)) Advantages: Robust for discontinuities Converges reasonably for large dimensions Can handle complex geometry, integrals Relatively simple implement, reason about x1 xN Slide courtesy of Peter Shirley

Variance =1 N Var f(x) )-E(f(x))]2 [f(xi N i=1 E(f(x)) x1 xN

Variance for Dice Example? Work out on board (variance for single dice roll)

Variance =1 Var E(f(x)) NVar f(x) Variance decreases as 1/N Error decreases as 1/sqrt(N) E(f(x)) x1 xN

Variance Problem: variance decreases with 1/N Increasing # samples removes noise slowly E(f(x)) x1 xN

Variance Reduction Techniques Importance sampling Stratified sampling 1 =1 N f(x)dx f(xi) N i=1 0

Importance Sampling Put more samples where f(x) is bigger =1 N W Yi=f(xi) f(x)dx Yi N i=1 E(f(x)) p(xi) x1 xN

Importance Sampling This is still unbiased W E Yi = Y(x)p(x)dx f(x) p(x)p(x)dx W = E(f(x)) W = f(x)dx for all N x1 xN

Importance Sampling Zero variance if p(x) ~ f(x) p(x) = cf(x) Yi=f(xi) p(xi)=1 c E(f(x)) Var(Y) = 0 Less variance with better importance sampling x1 xN

Stratified Sampling Estimate subdomains separately Arvo Ek(f(x)) x1 xN

Stratified Sampling This is still unbiased FN=1 N f(xi) N i=1 M =1 NiFi N k=1 Ek(f(x)) x1 xN

Stratified Sampling Less overall variance if less variance in subdomains 1 M Var[Fi] Var[FN]= Ni N2 i=1 Ek(f(x)) x1 xN