Multiple Regression and Regularization Methods in Linear Regression

Explore the importance of evaluating regression fit, assumptions validation, feature selection, (multi)collinearity, Lasso, Ridge, splines, and more in linear regression. Learn why predictors should not be considered separately and how Ridge regression mitigates multicollinearity issues.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

B4M36SAN Linear regression III Anh Vu Le

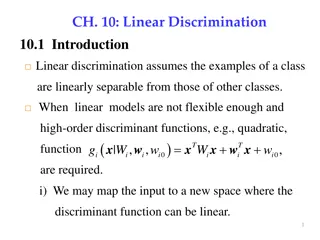

Outline Last last time Evaluating the regression fit from the summary (F-test, R2) Last time Assumptions of linear regression on their graphical validation Simple polynomial regression Today Multiple regression, feature selection methods Regularization methods (Lasso, Ridge)

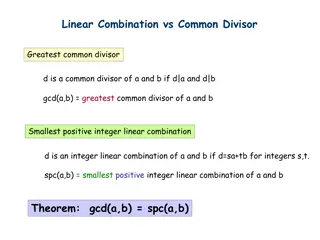

Why we shouldnt consider predictors separately ? = ?.?? ? = ?.?? Suppose that in reality: black does not affect house prices (medv) But black correlates with lstat You want to assess the importance of the predictors by: lm(medv ~ black) lm(medv ~ lstat) Interpretation of muliple regression coefficients lm(medv ~ lstat + black + ...) (Multi)Collinearity To be continued

machine learning - R: Plotting lasso beta coefficients - Stack Overflow https://xkcd.com/657/large/

Previously on (Multi)Collinearity black black lstat lstat How ridge regression treats multi-collinearity Driver.dvi (unica.it)

Summary How coefficients of multiple regression differ in meaning from coefficients of simple regression How Lasso performs feature selection? What trade-off needs to be discussed? What is multicollinearity and how it is manifested? How does Ridge help to mitigate the issues? What is the connection between splines and polynomial regression? What are the differences?