Multiplication-Avoiding Variant of Power Iteration with Applications

This study introduces a novel approach called Multiplication-Avoiding Variant of Power Iteration (MAPI) and its applications in the context of Reproducing Kernel Hilbert Space. The method is designed to efficiently compute dominant eigenvectors without conventional matrix multiplications, utilizing sign and minimum calculations. The research presents experimental results and demonstrates the effectiveness of MAPI in energy-efficient power iteration compared to traditional methods.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Multiplication-Avoiding Variant of Power Iteration with Applications Hongyi Pan*, Diaa Badawi, Runxuan Miao, Erdem Koyuncu, Ahmet Enis Cetin University of Illinois at Chicago *hpan21@uic.edu

Outline Introduction: What is power iteration? Multiplication-avoiding vector product (MAVP) Multiplication-avoiding power iteration (MAPI) Experimental results Conclusion

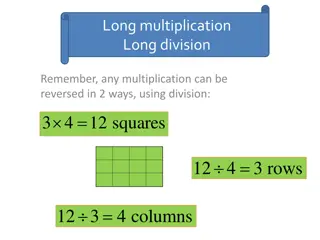

Introduction: What is power iteration? Regular Power Iteration: ?? ? ?? ?: a diagonalizable matrix, for example, covariance matrix ?: a vector, converges to the dominant eigenvector of ?

L1-Kernel Operator based on Sign and Minimum Let ? and ? are two real numbers, the min-1 operation is defined as ? ? = sign ? ? min ? , ? Multiplication-free (3 -1 = -1, 2 3=2) Vector form: ?? ? = sign ?? ??min ??, ?? ? Kernel: K ?,? =< ? ? ,? ? >= ?sign ?? ??min ??, ?? Reproducing Kernel Hilbert Space (RKHS) Theorem: ?? ?? a Mercer-type kernel (positive semi-definite Gram matrix) L1-norm Property: ?? ? = ?sign ?? ??min ??, ?? = ? 1

Another Mercer-type Kernel ? ? = sign ? ? min ? , ? Let ? and ? are two real numbers, the min-2 operation is defined as ? m? = min ? , ? , if sign ? = sign(?) otherwise 0, Vector form: ?? m? = ?(sign ?? = sign(??))min ??, ?? ? K(x,y)=< ??? ,??? >= ??(sign ?? = sign(??))min ??, ?? L1 norm Property: ?? ?? = ?min ??, ?? = ? 1

Matrix Form ??] and ? = [?1 ??]. Let ? = [?1 ? ?1 ?? ? ?? ?? ?1 ?1 , where { , m}. ?? ? = ? ?1 ? ?? Similar to the regular matrix product ??1 ?? ??? ?? ?1 ?1 ??? = ??1 ??? 1 ? 1??? Covariance matrix: ? = 1 ? 1? ??, where { , m}. Our covariance matrices: ? =

Multiplication-Avoiding Power Iteration (MAPI) in the Reproducing Kernel Hilbert Space Regular Power Iteration: ??? ??? ?t+1= Multiplication-Avoiding Power Iteration: ? ?? ? ??1, where { , m}. ?t+1= It converges to a pseudo-eigenvector in our simulation studies. Energy efficient power iteration because we perform one division in each iteration. We have only sign and minimum calculations.

Experiment 1: Image Reconstruction Min1: ? ? = sign ? ? min ? , ? Min2: ? m? = min ? , ? , if sign ? = sign(?) 0, otherwise

Experiment 2: Synthetic Dataset We perform eigen-decomposition and Min1-PI on a synthetic dataset. 2 ??1 ?? ?? Plot iteration time ? VS 1 . ??from PI, ?1from eig. After 100 iterations, ??= [0.1433,0.4171, 0.1166,0.3863,0.1285, 0.1315, 0.4330, 0.2097, 0.5770, 0.2106] from the regular power iteration (RPI) and ??= 0.1649,0.4166, 0.1371,0.3887,0.1480, 0.1508, 0.4306, 0.2359, 0.5362, 0.2370 from the multiplication-avoiding power iteration (MAPI). When we sort them from the largest to the smallest, both vectors produce the same ranks {2,4,5,3,6,1,8,10,7,9}.

Experiment 3: PageRank Algorithm We apply one L2-normalization after the final iteration for easier comparison. After 20 iterations, from the regular power iteration (RPI), ??= [0.6335,0.3452,0.4399,0.5348], and from the multiplication-avoiding power iteration (MAPI), ??= [0.5675,0.3486,0.5201,0.5347]. Consequently, though the weights are different, both index ranks are {1, 4, 3, 2}.

Experiment 3:PageRank Algorithm After 10 iterations, from Gnutella08, the top-10 ranks of the indices from the RPI are {367, 249, 145, 264, 266, 123, 127, 122, 1317, 5}, while the MAPI {266, 123, 367, 127, 424, 249, 145, 264, 427, 251}. On the other hand, from Gnutella09, the top-10 ranks of the indices from the RPI are {351, 563, 822, 534, 565, 825, 1389, 1126, 356, 530}, while the MAPI returns {351, 822, 51, 1389, 563, 565, 530, 825, 356, 1074}. 7 common top-10 ranks of indices {367, 249, 145, 265, 226, 123, 127} from the 6,301-node dataset Gnutella08 8 common top-10 ranks of indices {351, 563, 822, 565, 825, 1389, 356, 530} from the 8,114-node dataset Gnutella09.

Conclusion We introduced two Mercer-type kernels: Min-1 and Min-2. We proposed two multiplication-avoiding power iterations (MAPIs) based on Min-1 and Min-2 operations: Min1-PI and Min2-PI In our image reconstruction experiment, our Min2-PI returns the highest average PSNR. It overbeats the recursive L1-PCA and the regular power iteration. Our MAPI converges faster than the regular power iteration in the synthetic dataset experiment and the PageRank experiment. Thank you for your listening! Email: hpan21@uic.edu