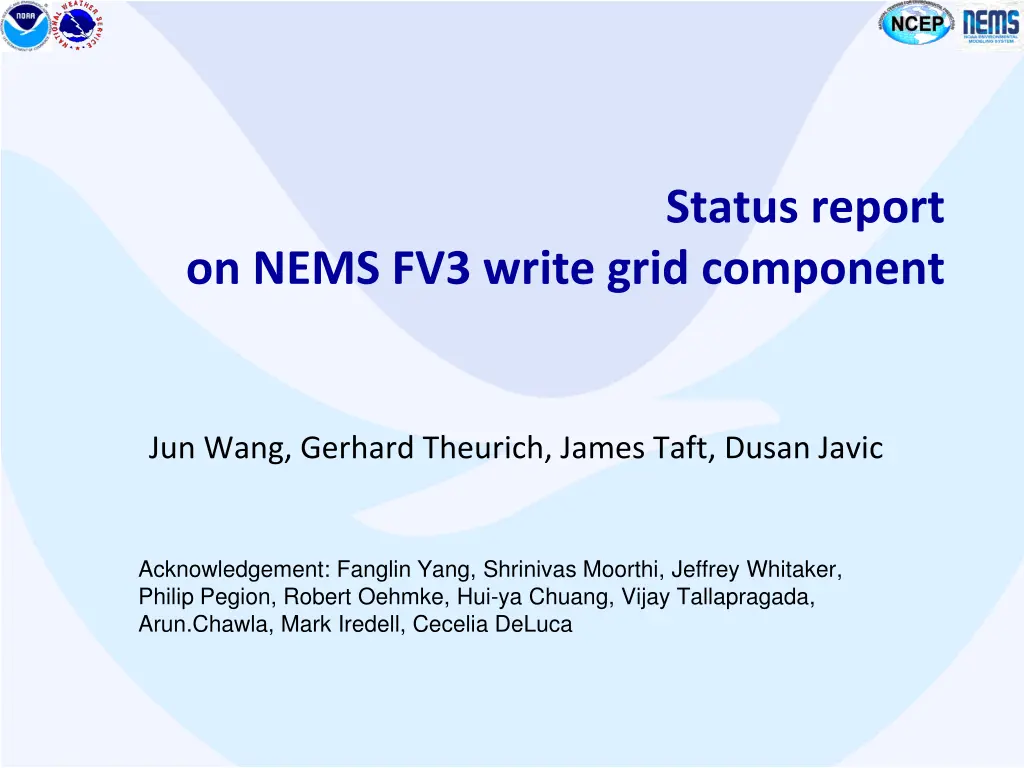

NEMS FV3 Write Grid Component Overview

Understand the role of the NEMS FV3 Write Grid Component in handling forecast data, processing results, and facilitating downstream processes. Learn about its structure, capabilities, and parallelization methods within the NEMSfv3gfs framework. Explore how it contributes to the Unified Earth Science System and supports both standalone and coupled modes of the FV3 model.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Status report on NEMS FV3 write grid component Jun Wang, Gerhard Theurich, James Taft, Dusan Javic Acknowledgement: Fanglin Yang, Shrinivas Moorthi, Jeffrey Whitaker, Philip Pegion, Robert Oehmke, Hui-ya Chuang, Vijay Tallapragada, Arun.Chawla, Mark Iredell, Cecelia DeLuca

NEMSfv3gfs NEMSFV3gfs is built as the base of the unified earth science system with FV3 as atmosphere component. FV3 CAP represents the atmosphere grid component. FV3 model (including dynamics and physics) is implemented as atmospheric forecast grid component with FV3 dynamics, IPD and GFS physics. The output and IO related downstream processes are implemented as write grid component. The output data are represented as ESMF fields with meta data and data values, these fields are stored in forecast grid component export state. ESMF regridding is used to transfer the data from forecast grid component to write grid component on desired grid. Downstream jobs such as POST processing can be conducted on write grid component where all the output data are available. This infrastructure facilitates FV3 model in both standalone mode and coupled mode.

NEMSfv3gfs code structure Program Main NEMS description layer NEMS grid comp Earth grid comp (ensemble) NEMS NUOPC Layer & mediator OCN FV3 other Sea ice Mediator User s code Wrt FCST dyn IPD phys Wrt ESMF grid component NEMS Infrastructure Fortran code

NEMS FV3 write grid component The main task for the write grid component is to release forecast from IO tasks, to process forecast data and to write out forecast results. The data transferred to write grid component is in ESMF field, a self- describing data representation. It allows write grid component to perform independent data process without inquiring information from forecast component. The data transferred to write grid component can be on different grids from forecast grid component. The regridding is conducted through ESMF regridding function call. The weights for regridding is computed once in the initialization step. Inline POST processing and other down stream processes can be called on write grid component besides outputting history files.

Parallelization of NEMS FV3 write grid component PE1 PE2 nggps3df00 nggps2df00 PEm pgbf00 PE1 wrt grid comp FH=00 PE2 PE3 PE1 PE2 nggps3df00 FH=03 nggps2df00 PEm wrt grid comp pgbf00 PEn FH=x FCST grid component PE1 PE2 nggps3df00 nggps2df00 Forecast grid comp Parallel domain PEm wrt grid comp pgbf00 Write grid comp Parallel domain

Current output capabilities in NEMSfv3gfs Without write grid component, output files in native cubed sphere grid in six tiles, one file for one tile in netcdf format with all the output time (GFDL write) With write grid component: - Output history files in cubed sphere grid in six tiles, one file one tile in netcdf format at specific output time - Output history files in global Gaussian grid, one file for global at specific output time - Output in netcdf format - Output in NEMSIO format - Native domain direction, south->north and top->bottom - Operational domain direction, North->South and bottom->top output

Output history files in cubed sphere grid using write grid comp Set up write grid comp Forecast grid component is created. Cubed sphere grid is created in forecast grid component, ESMF field bundles are created and filled up with ESMF fields in fv3 dynamics and gfsphysics. These field bundles are attached to the export state of the forecast grid component Cubed sphere grid is defined at write grid comp, the fields bundles from forecast grid comp are mirrored on write grid at write grid component and added in the import state of the write grid component. The field bundle route handles that contain data redistribution information and weight for regridding are stored once in the fv3_cap initialization step. Data are transferred to write grid component using ESMF regridding (data distribution only in this case) Write out 6 tiles history files in netcdf format using ESMF FieldBundle write, results are verified against GFDL write

GFDL write [0] ----------------------------------------------------- [0] Block User time System Time Total Time GID [0] ----------------------------------------------------- [0] ATMOS_INIT 11.8409 0.0000 11.8409 0 [0] TOTAL 1232.7345 0.0000 1232.7345 0 [0] NGGPS_IC 9.3757 0.0000 9.3757 0 [0] COMM_TOTAL 360.5388 0.0000 360.5388 0 [0] FV_DIAG 220.8266 0.0000 220.8266 0 Write grid comp native cubed sphere 6 tile output [0] ----------------------------------------------------- [0] Block User time System Time Total Time GID [0] ----------------------------------------------------- [0] ATMOS_INIT 14.6361 0.0000 14.6361 0 [0] TOTAL 1044.4040 0.0000 1044.4040 0 [0] NGGPS_IC 10.4291 0.0000 10.4291 0 [0] COMM_TOTAL 321.4978 0.0000 321.4978 0 [0] FV_DIAG 20.6646 0.0000 20.6646 0 C768, 1 day hourly output, 64+6nodes, 2*96 for write grid comp, on theia

Output history files in Gaussian grid Gaussian grid is set up on write grid component, the fields bundles from forecast grid are now mirrored on Gaussian grid on write grid component and added in the import state of the write grid component. Data are transferred to write grid component on global domain using ESMF regridding function. The data are written out in netcdf files using ESMF fieldbundle write, which is very slow (>30minutes for 1 day hourly output) A subroutine is created to write out nemsio file sequentially, which is much faster (1050s for 1 day hourly output).

Output history files in Gaussian grid (Cont.) James Taft tested the performance of write grid comp nemsio output file, found the IO bottle neck. Following the sequential nemsio write, Dusan generated sequential netcdf sequential write Current operational NEMSGSM history nemsio files are in north to south and top to bottom direction. To help current downstream jobs test FV3 results, a flip option is added to output nemsio file from native sount- >north, top->bottom direction to the direction in current operational nemsio files. Many fields present in operational nemsio files are missing in current NEMSfv3gfs code. Those fields are added.

Configuration for write grid component The setting for write grid component is in model configuration file: model_configure quilting: .true. ! turn on write grid component write_groups: 1 write_tasks_per_group: 6 num_files: 2 filename_base: 'dyn' 'phy output_grid: 'gaussian_grid ! Output grid write_nemsiofile: .true. write_nemsioflip: .true. imo: 384 jmo: 190 ! the number of write groups ! write tasks in each write group ! number of output files ! Output file names ! Output nemsio file, .false.-> netcdf ! Flip to N->S and B->T ! Number of points in Gaussian/latlon ! grid I direction ! Number of points in j direction for !Gaussian/latlon grid

Work plan for write grid comp For FV3 Q3FY2018 implementation, we propose to implement efficient global Gaussian grid file in nemsio format. - Generate operational like nemsio files allows minimal code changes in the GFS downstream jobs such as GSI, POST-Processing, hurricane relocation, regional models that use GFS as lateral boundary condition - It will help down stream applications to evaluate model results from appropriated ESMF regridding methods - Efficient netcdf write is under development, the sample netcdf file will be provided to downstream applications to develop NETCDF interface For Q2FY2019 implementation, efficient global Gaussian grid file in netcdf format will be used - All the GFS downstream jobs will be able to ingest netcdf file - Netcdf utility tools need to be installed at production machines

Ongoing work Interpolation methods for regridding forecast fields from cubed sphere grid to Gaussian grid IO bottleneck for fv3 write grid component Create operational like output files, add new fields from advanced microphysics Validation of sequential Gaussian grid netcdf

Interpolation methods for regridding forecast fields from cubed sphere grid to Gaussian grid Regridding interpolation method is critical to the quality of output data. Currently all the fields from dynamics are using bilinear , and fields from physics are using nearest neighbor Phil s presentation on 20170206 states: - Handling the wind field as vectors fixes initialization shock at poles - Assuming a U.S. standard atmosphere lapse rate (6.5oC/Km) improved the surface pressure field around steep orography, and associated initialization shock

Interpolation methods for regriding forecast fields from cubed sphere grid to Gaussian grid (cont.) Current status - Surface pressure is implemented as pseudo surface pressure field using constant lapse rate in the forecast grid comp, and then recovered as surface pressure on write grid comp - Wind components are used to form the wind vector in 3D Cartesian, the 3D Cartesian wind vector are regridded to Gaussian grid, and then the wind vector is projected back to the local directions on the new grid. - Fields from opn sfc file will be divided into two categories: fields using bilinear interpolation and fields using nearest neighbor interpolation. Two field bundles will be created, but to be output in a single physics file.

IO bottleneck in fv3 write grid component James Taft identified the IO bottleneck in fv3 write grid component - The startup I/O time for FV3/NEMS nemsio using 4x48 for I/O is significant - For the configuration of n=1536, it requires about 516 sec of the 2173 sec run time - It turns out the ESMF route handle generation is taking most of the initialization time Current status - Jim is speeding up the nemsio lib on cray to allow fewer write groups are used - Proposal is made to compute the route handle once and then copy to other write grid component to reduce the route handle generation time

Create operational like output files, add new fields from advanced microphysics Conserve interpolation method may need to be considered for radiation flux fields. Masks need to be applied properly to the masked fields. Advanced microphysics schemes are added in NEMSfv3gfs, a general way to identify those schemes and to output related fields have been worked on. There is discussion on weather to output convective clouds in physics file (currently all 2D fields), which will be used by radiance assimilation in GSI

sequential Gaussian grid netcdf write Following the example of sequential nemsio file, Dusan generated a subroutine to write out Gaussian grid netcdf file sequentially. Tests from C768 on cray shows that the sequential writing is significantly faster than the ESMF parallel write, and is also faster than the nemsio file. Write grid comp needs to be updated to move code changes in current nemsio write back to write grid comp. Netcdf file needs to be verified against nemsio file so that GFS down stream jobs only needs to develop netcdf interface.

Future work on write grid component Inline post and potential other down stream job Change write grid resolution and frequency for forecast2 Output Gaussian grid from stretched cubed sphere grid Output history files for regional nested grid Standalone regional model output Integrate FMS diag_manager functionality within the NEMS