Neural Net Distributed Simulation: Energy and Speed of Embedded vs Server Nodes

This study compares the energy efficiency and processing speed of ARM Cortex-A15-based NVIDIA Jetson TK1 boards and Intel Xeon-based dual-socket nodes for distributed simulation using DPSNN. The research, conducted by Pier Stanislao Paolucci at INFN APE Lab, focuses on optimizing performance trade-offs between embedded and server nodes in a neural network simulation setup. By examining the energy-to-solution ratio and computational speed of dual-socket quad-core nodes, the study sheds light on resource utilization and performance outcomes in distributed simulations. Explore the findings and implications for enhancing simulation efficiency.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

WP5: Neural Net Distributed Simulation (DPSNN). Energy to solution and speed of embedded vs server dual-socket quad-core nodes. ARM Cortex-A15 in 2x NVIDIA Jetson TK1 boards vs Intel Xeon quad-core in dual-socket node Pier Stanislao Paolucci for INFN APE Lab: R. Ammendola, A. Biagioni, O. Frezza, F. Lo Cicero, A. Lonardo, M. Martinelli, P. S Paolucci, E. Pastorelli, F. Simula, P. Vicini

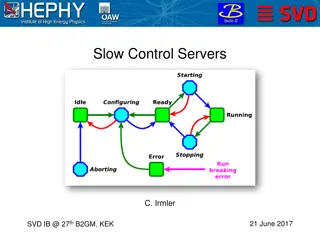

DPSNN STDP Status Distributed Simulator of Plastic Spiking Neural Networks with synaptic Spike Timing Dependent Plasticity State-of-the-art Application AND architectural benchmark: Designed in EURETILE EU Project 2011-2014: arXiv:1310.8478 Speed-up of CORTICONIC EU Project simulations (FIRST PART of this presentation): x420 speed-up on 4 servers and 100 MPI processes COSA: comparison of power/energy of distributed execution on embedded and server quad-cores: (SECOND PART of this presentation) arXiv:1505.03015 Benchmark in next-to-start EXANEST EU Project and in piCOLO proposal COSA Meeting - Roma - 2015 05 18

Acceleration (up to now, x 420) of CORTICONIC simulations through parallelization/distribution techniques on many-processor architecture Elena Pastorelli and Pier Stanislao Paolucci for INFN Roma - APE Lab* P. Del Giudice, M. Mattia - ISS Roma *INFN Roma APE Lab: R. Ammendola, A. Biagioni, O. Frezza, F. Lo Cicero, A. Lonardo, M. Martinelli, P. S Paolucci, E. Pastorelli, F. Simula, P. Vicini CORTICONIC 2015 04 28 - Barcellona

A Challenging Problem The simulation of the cortical field activity can be accelerated using parallel/distributed many-processor computers. However, there are several challenges, including: o Neural networks heavily interconnected at multiple distances, local activity rapidly produces effects at all distances Prototype of non-trivial parallelization problem o Each neural spike originates a cascade of synaptic events at multiple times: t + ts Complex data structures and synchronization. Mixed time-driven (delivery of spiking messages and neural dynamic) and event-driven (synaptic activity) o Multiple time-scales (neural, synaptic, long and short term plasticity models) Non-trivial synchronization at all scales o Gigantic synaptic data-base. A key issue for large scale simulations Clever parallel resource management required. CORTICONIC 28 April 2015 4

x 420 acceleration delivered 60 sec simulated activity of 2 M neuron, 3.5 G synapses Organized in a grid of 40x40 cortical columns Starting point (before parallelization) 1000h elapsed time required (single process running on a single processor) After application of our distribution/parallelization techniques now requires ~2h 20m elapsed time o x 420 acceleration on 4 servers on 100 processes o (dual socket Intel Xeon CPU E5-2650 0 @ 2.00GHz 8 hw core per socket) o 6.1 G syn fit on a 4 server cluster memory o for an introduction to our mixed time and event driven parallelization/distribution techniques, see arXiv:1310.8478 CORTICONIC 28 April 2015 5

Speed-up through parallelization Strong scaling (i.e. fixed problem size) of the 2M neuron, 3.5 G synapses configuration measured Parallelization Speed-up 2 M neu, 3.5 G syn 512 256 For larger problem sizes, good scaling expected using higher number of hardware resources and software processes 128 Speed-up 64 Speed-up 32 16 Exec. time of original non parallel code used as speed-up reference 8 8 16 Number of Processes 32 64 128 256 CORTICONIC 28 April 2015 6

Giga synapses configuration now run in a few hours CORTICONIC 28 April 2015 7

Validation The equivalence between the spiking activity simulated by the original (scalar) and the accelerated (parallel) code has been verified at all hierarchical levels: o Single neuron o Poissonian stimulus o Synaptic messaging o Sub-population o Cortical Module o Cortical Field Original (non parallel) code Parallelized code Main tool: analysis of power spectrum at different hierarchical levels CORTICONIC 28 April 2015 8

Conclusion/Future New parallel/distributed simulator of spiking neural networks relying on mixed time- and event-driven integration Accelerated CORTICONIC simulations (up to now, x 420), Reduced mem consumption large networks possible Future developments: CORTICONIC 28 April 2015 9

COSA: Jetson boards set-up details Initial standalone bootstrap of each board (no network) Ubuntu 14.04 LTS (GNU /Linux 3.10.24-g6a2d13a arm7v1) sudo apt-mark hold xserver-xorg-core cd {$HOME}/NVIDIA-INSTALLER; sudo ./installer.sh; reboot now Assign name to each board (e.g. tk00.ape and tk01.ape) (and modified /etc/hostname and /etc/hosts on each board) Use a Ethernet switch to interconnect the Jetson boards Install c++: sudo apt-get install g++ Install OpenMPI 1.8.1 on each Jetson board mkdir /opt/openmpi-1.8.1; cd ~; wget https://www.open-mpi.org/... tar xvf openmpi-1.8.1.tar.gz; cd openmpi-1.8.1; mkdir build; cd build ../configure -- prefix=/opt/openmpi-1.8.1; make; sudo make install Added to ~/.bashrc (just at the beginning, before the exit on non interactive login): export PATH= $PATH:/opt/openmpi-1.8.1/bin export LD_LIBRARY_PATH= $LD_LIBRARY_PATH:/opt/openmpi-1.8.1/bin mpirun np 8 host tk00.ape, tk01.ape hostname Prints 8 output lines tk00 tk01 tk01 COSA Meeting - Roma - 2015 05 18

COSA: same neural net sim. launched on embedded platform node : dual-socket node emulator: 2 x NVIDIA Jetson TK1 board (Tegra K1 chip): 2 x quad-core ARM Cortex-A15@2.3GHz, Ethernet interconnected (100 Mb mini-switch) DPSNN distributed on 8 MPI processes / node server platform node: dual-socket server SuperMicro X8DTG-D 1U: 2 x quad-core Intel Xeon CPUs (E5620@2.4GHz) 2 x HyperThreading DPSNN distributed on 16 MPI processes / node COSA Meeting - Roma - 2015 05 18

Energy to Solution, Speed and Power 2.2 micro-Joule per simulated synaptic event on the embedded dual socket node 4.4 times better than spent by server platform instantaneous power consumption: embedded 14.4 times better than server server platform 3.3 faster than embedded All inclusive, measured using amperometric clamp on 220V@50Hz power supply on: Details in arXiv:1505.03015 May 2015 COSA Meeting - Roma - 2015 05 18

DPSNN in COSA: possible next steps Accelerate tasks on embedded nodes, e.g.: Random numbers Interconnect optimizations Evaluate scaling, energy to solution, instantaneous power and speed on larger number of COSA embedded nodes N x 2 x Tegra Boards ( and from K1 to X1) ARM + FPGA + custom interconnet Compare scaling with large server platforms Key point: low memory / embedded board ? COSA Meeting - Roma - 2015 05 18